JAM: A Tiny Flow-based Song Generator with Fine-grained Controllability and Aesthetic Alignment (2507.20880v1)

Abstract: Diffusion and flow-matching models have revolutionized automatic text-to-audio generation in recent times. These models are increasingly capable of generating high quality and faithful audio outputs capturing to speech and acoustic events. However, there is still much room for improvement in creative audio generation that primarily involves music and songs. Recent open lyrics-to-song models, such as, DiffRhythm, ACE-Step, and LeVo, have set an acceptable standard in automatic song generation for recreational use. However, these models lack fine-grained word-level controllability often desired by musicians in their workflows. To the best of our knowledge, our flow-matching-based JAM is the first effort toward endowing word-level timing and duration control in song generation, allowing fine-grained vocal control. To enhance the quality of generated songs to better align with human preferences, we implement aesthetic alignment through Direct Preference Optimization, which iteratively refines the model using a synthetic dataset, eliminating the need or manual data annotations. Furthermore, we aim to standardize the evaluation of such lyrics-to-song models through our public evaluation dataset JAME. We show that JAM outperforms the existing models in terms of the music-specific attributes.

Summary

- The paper introduces a compact flow-matching model that enables word- and phoneme-level control for improved lyrical intelligibility and precise vocal timing.

- It employs token-level duration control and temporally-aware phoneme alignment to maintain natural prosody and strict timing boundaries.

- Experimental results demonstrate state-of-the-art performance in audio fidelity and aesthetic alignment, confirmed by both objective metrics and expert evaluations.

JAM: A Tiny Flow-based Song Generator with Fine-grained Controllability and Aesthetic Alignment

Introduction and Motivation

JAM introduces a compact, flow-matching-based architecture for lyrics-to-song generation, targeting the limitations of prior models in controllability, efficiency, and alignment with human musical preferences. Unlike previous large-scale diffusion and autoregressive models, JAM is designed to provide fine-grained, word- and phoneme-level control over vocal timing and duration, while maintaining high audio fidelity and stylistic flexibility. The model is further enhanced through iterative aesthetic alignment using Direct Preference Optimization (DPO), leveraging synthetic preference data to optimize for human-like musicality and enjoyment.

Model Architecture and Training Pipeline

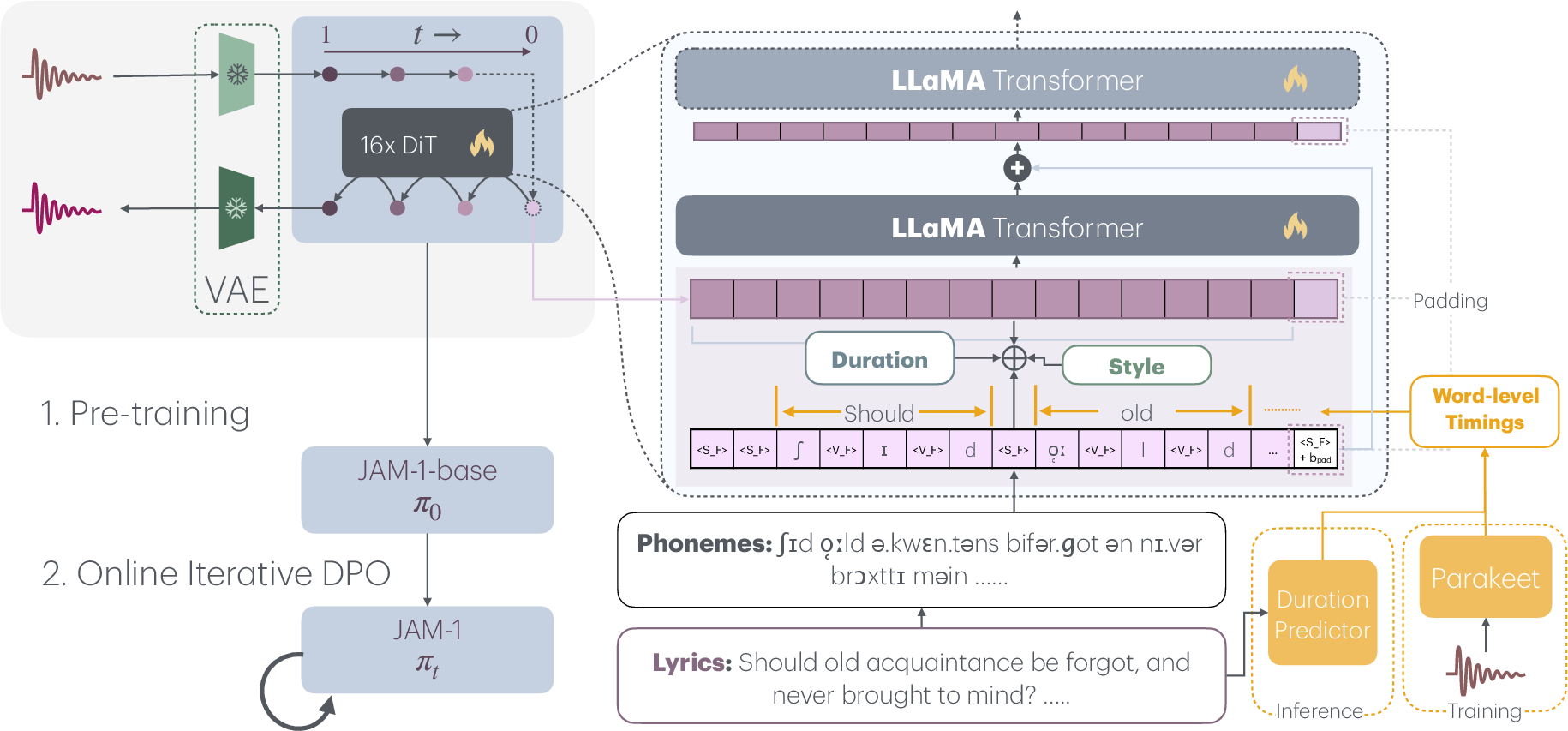

JAM employs a 530M-parameter conditional flow-matching model, utilizing 16 LLaMA-style Transformer layers as its Diffusion Transformer (DiT) backbone. The model is conditioned on three axes: lyrics (with explicit word/phoneme timing), target duration, and style prompt (audio or text). The audio is encoded using the Stable Audio Open VAE encoder and a DiffRhythm-initialized decoder, operating at 44.1kHz for up to 3 minutes and 50 seconds.

Figure 1: A depiction of the JAM architecture and training pipeline, highlighting the integration of lyric, style, and duration conditioning.

The training pipeline consists of three stages:

- Pre-training on 90-second clips with random crops.

- Supervised fine-tuning (SFT) on full-length songs.

- Iterative DPO-based preference alignment using SongEval-generated synthetic preference labels.

The flow-matching approach directly regresses a time-dependent vector field, enabling efficient and stable training compared to score-based diffusion models. During inference, an ODE solver integrates the learned vector field to generate the latent representation, which is then decoded to audio.

Conditioning and Fine-grained Temporal Control

JAM's conditioning pipeline fuses lyric, style, and duration embeddings at the latent level. Lyric conditioning leverages word-level and phoneme-level timing, transformed into upsampled phoneme sequences and embedded via a convolutional network. Style conditioning uses MuQMulan embeddings, and duration conditioning combines global duration embeddings with token-level duration control (TDC). The TDC mechanism introduces a learnable bias for padding tokens, enabling the model to sharply distinguish between valid content and silence, thus achieving precise temporal boundaries.

A key innovation is the temporally-aware word-level phoneme alignment algorithm, which distributes phoneme tokens within each word's temporal span, supporting both natural prosody and explicit user control. The model supports both continuous and quantized (beat-aligned) timestamp inputs, facilitating practical user interaction and downstream integration.

Aesthetic Alignment via Direct Preference Optimization

To address the gap between technical intelligibility and human musical preference, JAM applies DPO using SongEval as the reward signal. This process iteratively refines the model by generating candidate outputs, scoring them, and optimizing the model to prefer higher-scoring samples. The DPO loss is adapted for flow-matching objectives, and a ground-truth reconstruction term is optionally included to regularize against overfitting to synthetic preferences.

This alignment strategy yields measurable improvements in both objective and subjective metrics, including content enjoyment, musicality, and vocal naturalness, as well as reductions in word and phoneme error rates.

Experimental Results

Objective Evaluation

JAM is evaluated on JAME, a newly curated, genre-diverse, contamination-free benchmark. Compared to strong baselines (LeVo, YuE, DiffRhythm, ACE-Step), JAM achieves:

- Lowest WER (0.151) and PER (0.101), indicating superior lyric intelligibility and alignment.

- Highest MuQ-MuLan similarity (0.759) and genre classification accuracy (0.704), reflecting strong style adherence.

- Best content enjoyment (CE = 7.423) and lowest FAD (0.204), demonstrating high subjective appeal and audio fidelity.

- Consistent state-of-the-art or second-best performance across all other SongEval and aesthetic metrics.

Subjective Evaluation

Human annotators with musical expertise rated JAM highest in enjoyment, musicality, and song structure clarity, and comparable to the best models in audio quality and vocal naturalness. The model's fine-grained timing control and aesthetic alignment are directly linked to these improvements.

Ablation and Analysis

- Token-level duration control is critical for suppressing unwanted audio beyond the target duration, achieving sub-1% RMS amplitude leakage compared to >18% for models without TDC.

- Phoneme assignment strategy impacts long-term musicality: the "Average Sparse" method yields better FAD and SongEval scores than "Pad Right," despite slightly higher PER.

- Iterative DPO consistently improves musicality and aesthetic metrics, though excessive DPO can increase FAD, indicating a trade-off between alignment and perceptual realism.

- Duration prediction experiments show that naive timestamp prediction (e.g., GPT-4o) degrades musicality and intelligibility, while beat-aligned quantization offers a practical compromise with minimal performance loss.

Practical Implications and Limitations

JAM's architecture enables efficient, controllable, and high-fidelity lyrics-to-song generation suitable for both professional and research applications. The model's compact size (530M parameters) allows for faster inference and lower resource requirements compared to billion-parameter baselines. The explicit support for word- and phoneme-level timing control is particularly valuable for composers and music technologists seeking precise prosodic and rhythmic manipulation.

However, the requirement for accurate word-level duration annotations limits usability for non-expert users. Experiments with duration predictors highlight the need for robust, musically-aware timestamp generation, ideally integrated into the model's training loop. The current system also lacks phoneme-level duration control, which could further enhance expressiveness and pronunciation accuracy.

Future Directions

- End-to-end duration prediction: Jointly training a duration predictor with the song generator to enable robust, user-friendly inference without manual timing annotations.

- Phoneme-level control: Incorporating phoneme-level alignment and duration modeling for finer expressive granularity.

- Broader language and style support: Extending the model to handle non-English lyrics and a wider range of musical genres and vocal styles.

- Real-time and interactive applications: Leveraging the model's efficiency for live composition, editing, and music production workflows.

Conclusion

JAM demonstrates that a compact, flow-matching-based model with explicit fine-grained temporal control and iterative aesthetic alignment can achieve state-of-the-art performance in lyrics-to-song generation. The model's design and evaluation set new standards for controllability, efficiency, and musicality in AI-driven music generation. Future work on integrated duration prediction and phoneme-level control will further enhance the model's robustness and applicability in real-world creative contexts.

Follow-up Questions

- How does JAM's flow-matching approach enhance training stability compared to traditional diffusion models?

- What specific advantages does fine-grained temporal (word- and phoneme-level) control offer in lyrics-to-song generation?

- In what ways does Direct Preference Optimization improve the model's aesthetic alignment with human musical preferences?

- What limitations do the authors identify regarding the requirement for precise duration annotations, and how might these be addressed?

- Find recent papers about flow-based music generation.

Related Papers

- Jukebox: A Generative Model for Music (2020)

- Simple and Controllable Music Generation (2023)

- Long-form music generation with latent diffusion (2024)

- Quality-aware Masked Diffusion Transformer for Enhanced Music Generation (2024)

- Joint Audio and Symbolic Conditioning for Temporally Controlled Text-to-Music Generation (2024)

- Accompanied Singing Voice Synthesis with Fully Text-controlled Melody (2024)

- SongGen: A Single Stage Auto-regressive Transformer for Text-to-Song Generation (2025)

- InspireMusic: Integrating Super Resolution and Large Language Model for High-Fidelity Long-Form Music Generation (2025)

- MuseControlLite: Multifunctional Music Generation with Lightweight Conditioners (2025)

- DiffRhythm+: Controllable and Flexible Full-Length Song Generation with Preference Optimization (2025)

alphaXiv

- JAM: A Tiny Flow-based Song Generator with Fine-grained Controllability and Aesthetic Alignment (13 likes, 0 questions)