AgroBench: Vision-Language Model Benchmark in Agriculture (2507.20519v1)

Abstract: Precise automated understanding of agricultural tasks such as disease identification is essential for sustainable crop production. Recent advances in vision-LLMs (VLMs) are expected to further expand the range of agricultural tasks by facilitating human-model interaction through easy, text-based communication. Here, we introduce AgroBench (Agronomist AI Benchmark), a benchmark for evaluating VLM models across seven agricultural topics, covering key areas in agricultural engineering and relevant to real-world farming. Unlike recent agricultural VLM benchmarks, AgroBench is annotated by expert agronomists. Our AgroBench covers a state-of-the-art range of categories, including 203 crop categories and 682 disease categories, to thoroughly evaluate VLM capabilities. In our evaluation on AgroBench, we reveal that VLMs have room for improvement in fine-grained identification tasks. Notably, in weed identification, most open-source VLMs perform close to random. With our wide range of topics and expert-annotated categories, we analyze the types of errors made by VLMs and suggest potential pathways for future VLM development. Our dataset and code are available at https://dahlian00.github.io/AgroBenchPage/ .

Summary

- The paper introduces AgroBench, a comprehensive benchmark featuring seven agricultural tasks with expert annotations to evaluate VLM performance.

- It compares closed-source and open-source models, revealing significant gaps in fine-grained tasks such as weed identification.

- The study highlights the need for domain-specific pretraining and enhanced visual attention in VLMs for automated crop monitoring and management.

AgroBench: A Comprehensive Vision-LLM Benchmark for Agriculture

Motivation and Context

The development of robust, generalizable vision-LLMs (VLMs) for agriculture is critical for automating complex tasks such as disease identification, pest management, and crop monitoring. Existing agricultural datasets are limited in scope, often focusing on narrow tasks or relying on synthetic annotations. AgroBench addresses these limitations by providing a large-scale, expert-annotated benchmark spanning seven key agricultural tasks, with a breadth of categories unmatched in prior work. The benchmark is designed to rigorously evaluate both open-source and closed-source VLMs in realistic, expert-driven scenarios.

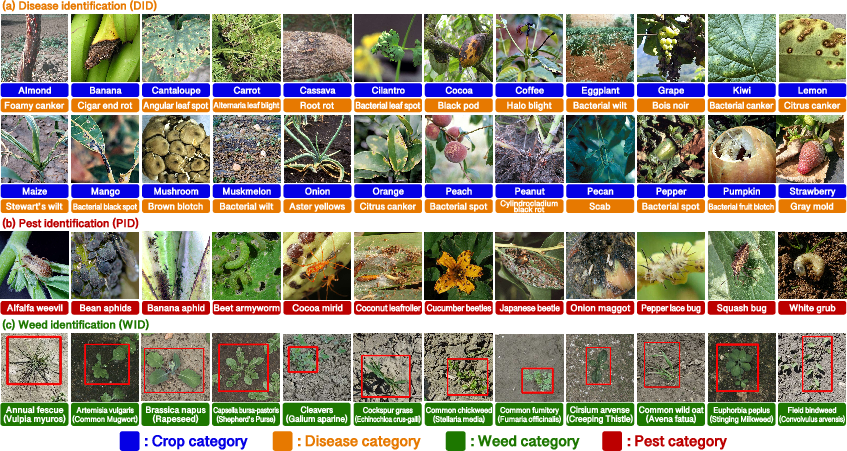

Figure 1: Examples of labeled images for DID, PID, and WID tasks. AgroBench includes 682 crop-disease pairs, 134 pest categories, and 108 weed categories, prioritizing real farm settings.

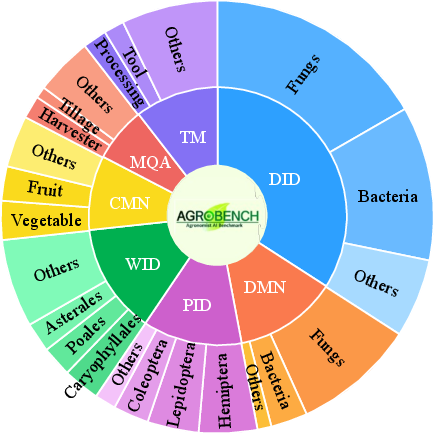

Benchmark Design and Task Coverage

AgroBench comprises seven tasks, each reflecting a critical aspect of agricultural engineering and real-world farming:

- Disease Identification (DID): Fine-grained classification of crop diseases, with 1,502 QA pairs across 682 crop-disease combinations.

- Pest Identification (PID): Recognition of 134 pest species, including multiple growth stages.

- Weed Identification (WID): Detection and classification of 108 weed species, with bounding box localization.

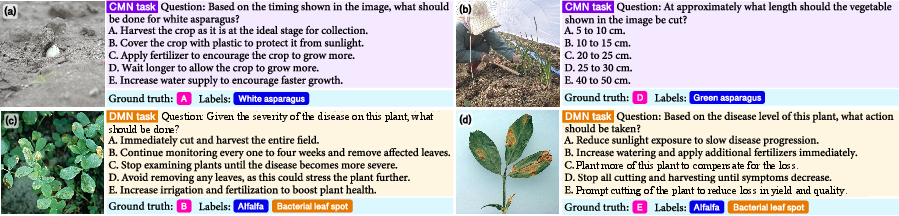

- Crop Management (CMN): Decision-making for optimal crop practices based on visual cues.

- Disease Management (DMN): Recommendation of intervention strategies for diseased crops, considering severity and context.

- Machine Usage QA (MQA): Selection and operation of agricultural machinery for specific tasks.

- Traditional Management (TM): Identification and explanation of sustainable, traditional farming methods.

Each task is formulated as a multiple-choice question, with distractors carefully selected to challenge both visual and domain reasoning. All annotations are performed by agronomist experts, ensuring high-quality, domain-accurate supervision.

Figure 2: Seven benchmark tasks in AgroBench, illustrating the diversity of topics and category coverage. Task accuracy is averaged to mitigate QA count imbalance.

Figure 3: Examples of QA pairs for CMN and DMN tasks, demonstrating nuanced differences in management recommendations based on crop type and disease severity.

Dataset Construction and Annotation Protocol

AgroBench is constructed from a curated pool of ~50,000 images, with a strong emphasis on real-world farm conditions. Images are sourced from public datasets and expert-licensed collections, with laboratory images used only when field data is unavailable. The final dataset contains 4,342 QA pairs, each requiring visual input for correct resolution.

Annotation is performed exclusively by domain experts, with strict guidelines prohibiting the use of LLM-generated knowledge for QA creation. This process ensures that the benchmark reflects authentic agricultural expertise and avoids the propagation of synthetic or internet-derived errors.

Evaluation of Vision-LLMs

AgroBench is used to evaluate a suite of state-of-the-art VLMs, including both closed-source (GPT-4o, Gemini 1.5-Pro/Flash) and open-source (QwenVLM, LLaVA-Next, CogVLM, EMU2Chat) models. Human performance is also measured as a reference, using a cohort of agriculture graduates.

Main Results

- Closed-source VLMs (e.g., GPT-4o, Gemini 1.5-Pro) consistently outperform open-source models across all tasks, with GPT-4o achieving the highest overall accuracy.

- Open-source VLMs (notably QwenVLM-72B) approach closed-source performance in some tasks but lag significantly in fine-grained identification, especially weed identification.

- Weed Identification (WID) is the most challenging task; most open-source models perform at or near random, and even the best closed-source model (Gemini 1.5-Pro) achieves only 55.17% accuracy.

- Disease Management (DMN) and Crop Management (CMN) tasks see higher model performance, indicating that VLMs are more adept at contextual reasoning than at fine-grained visual discrimination.

Ablation Studies and Analysis

Visual vs. Textual Input

Ablation experiments with text-only input confirm that visual information is essential for most tasks. However, models can sometimes infer plausible answers for management tasks based on common-sense or statistical priors, even when visual cues are absent.

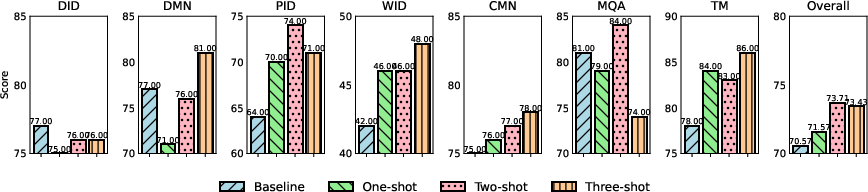

Chain of Thought (CoT) Reasoning

Incorporating CoT exemplars yields marginal improvements in accuracy, particularly for tasks requiring stepwise reasoning (PID, WID, CMN, TM). However, performance saturates quickly, and the benefit is not uniform across all tasks.

Figure 4: Results of seven benchmark tasks with Chain of Thought (CoT). CoT provides modest gains, especially in reasoning-intensive tasks.

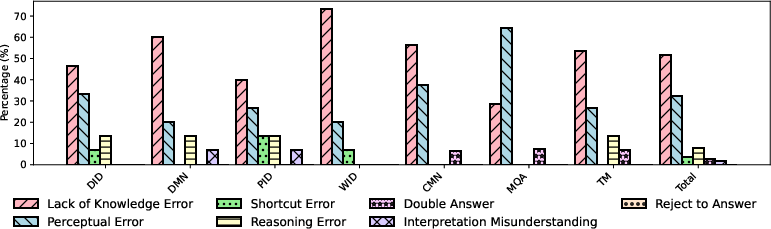

Error Taxonomy

A detailed manual error analysis on GPT-4o outputs reveals the following distribution:

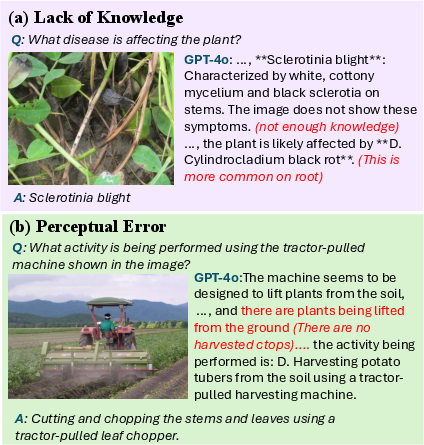

- Lack of Knowledge (51.92%): The dominant error mode, where the model lacks fine-grained agricultural knowledge (e.g., symptomatology, pest morphology, management protocols).

- Perceptual Error (32.69%): Failures in visual attention or recognition, such as missing small objects or misinterpreting scene context.

- Reasoning Error (7.6%): Incorrect logical comparison or stepwise deduction, less prevalent than in general-domain benchmarks.

- Other Errors (7.79%): Includes shortcut reasoning, double answers, misinterpretation, and refusal to answer.

Figure 5: Error analysis on seven benchmark tasks with GPT-4o, categorizing the main failure modes.

Figure 6: Error examples of GPT-4o, illustrating Lack of Knowledge and Perceptual Error cases.

Implications and Future Directions

AgroBench exposes significant gaps in current VLMs' ability to perform fine-grained agricultural tasks, particularly in weed and disease identification. The dominance of knowledge-based errors suggests that web-scale pretraining is insufficient for specialized domains with long-tail categories and subtle visual distinctions. Perceptual errors further indicate the need for improved visual encoders and attention mechanisms tailored to agricultural imagery.

Practically, AgroBench provides a rigorous testbed for developing and benchmarking VLMs intended for deployment in precision agriculture, automated crop monitoring, and decision support systems. The expert-annotated, multi-task structure enables targeted model improvement and error analysis.

Theoretically, the benchmark highlights the limitations of current VLM architectures in transferring general visual-linguistic knowledge to specialized, high-stakes domains. Future research should focus on:

- Domain-adaptive pretraining and fine-tuning with expert-curated data.

- Integration of structured agricultural knowledge bases.

- Enhanced visual grounding and attention for small or occluded objects.

- Task-specific prompting and reasoning strategies.

Conclusion

AgroBench establishes a new standard for evaluating vision-LLMs in agriculture, with unprecedented category coverage and expert-driven QA design. Current VLMs, while strong in contextual reasoning, exhibit substantial deficiencies in fine-grained identification and domain-specific knowledge. AgroBench will serve as a critical resource for advancing VLM research and deployment in sustainable, automated agriculture.

Follow-up Questions

- How do closed-source and open-source vision-language models compare in terms of agricultural task performance?

- What methodologies were used for expert annotation in constructing the AgroBench dataset?

- Which agricultural tasks in AgroBench present the most significant challenges for current VLMs?

- How can domain-adaptive pretraining improve the performance of vision-language models in agro-biological contexts?

- Find recent papers about Precision Agriculture.

Related Papers

- VisualWebArena: Evaluating Multimodal Agents on Realistic Visual Web Tasks (2024)

- Benchmarking Vision Language Models for Cultural Understanding (2024)

- Multimodal RewardBench: Holistic Evaluation of Reward Models for Vision Language Models (2025)

- A Multimodal Benchmark Dataset and Model for Crop Disease Diagnosis (2025)

- iNatAg: Multi-Class Classification Models Enabled by a Large-Scale Benchmark Dataset with 4.7M Images of 2,959 Crop and Weed Species (2025)

- AgMMU: A Comprehensive Agricultural Multimodal Understanding and Reasoning Benchmark (2025)

- A Vision-Language Foundation Model for Leaf Disease Identification (2025)

- Can Large Multimodal Models Understand Agricultural Scenes? Benchmarking with AgroMind (2025)

- WisWheat: A Three-Tiered Vision-Language Dataset for Wheat Management (2025)

- Traceable Evidence Enhanced Visual Grounded Reasoning: Evaluation and Methodology (2025)

GitHub

alphaXiv

- AgroBench: Vision-Language Model Benchmark in Agriculture (12 likes, 0 questions)