Cognitive Chain-of-Thought: Structured Multimodal Reasoning about Social Situations (2507.20409v1)

Abstract: Chain-of-Thought (CoT) prompting helps models think step by step. But what happens when they must see, understand, and judge-all at once? In visual tasks grounded in social context, where bridging perception with norm-grounded judgments is essential, flat CoT often breaks down. We introduce Cognitive Chain-of-Thought (CoCoT), a prompting strategy that scaffolds VLM reasoning through three cognitively inspired stages: perception, situation, and norm. Our experiments show that, across multiple multimodal benchmarks (including intent disambiguation, commonsense reasoning, and safety), CoCoT consistently outperforms CoT and direct prompting (+8\% on average). Our findings demonstrate that cognitively grounded reasoning stages enhance interpretability and social awareness in VLMs, paving the way for safer and more reliable multimodal systems.

Summary

- The paper introduces Cognitive Chain-of-Thought (CoCoT), a framework that decomposes multimodal reasoning into perception, situation, and norm stages.

- It demonstrates significant gains on intent disambiguation benchmarks, achieving up to an 18.3% improvement over standard prompting methods.

- Empirical results highlight enhanced safety and interpretability in socially grounded and safety-critical reasoning tasks.

Cognitive Chain-of-Thought: Structured Multimodal Reasoning about Social Situations

Introduction

The paper introduces Cognitive Chain-of-Thought (CoCoT), a prompting strategy for vision-LLMs (VLMs) that decomposes multimodal reasoning into three cognitively inspired stages: perception, situation, and norm. This approach is motivated by the limitations of standard Chain-of-Thought (CoT) prompting, which, while effective for symbolic and factual reasoning, often fails in tasks requiring the integration of visual perception with abstract social and normative understanding. CoCoT is designed to scaffold VLM reasoning in a manner that more closely mirrors human cognitive processes, particularly in tasks involving ambiguous intent, social commonsense, and safety-critical decision-making.

Motivation and Theoretical Foundations

Conventional CoT prompting is effective in domains where reasoning can be reduced to a sequence of symbolic manipulations, such as mathematics or logic. However, in socially grounded multimodal tasks, models must bridge the gap between raw perception and abstract, context-dependent judgments. The paper draws on the 4E cognition framework—embodied, embedded, enactive, and extended cognition—to argue that human reasoning about social situations is inherently structured and contextually grounded. CoCoT operationalizes this insight by explicitly structuring prompts into three stages:

- Perception: Extraction of directly observable features from the visual input.

- Situation: Interpretation of relationships and context among perceived elements.

- Norm: Inference of the most socially plausible or normatively appropriate interpretation.

This structure is intended to guide VLMs through a progression from concrete perception to abstract social reasoning, thereby improving both interpretability and alignment with human judgments.

Methodology: The CoCoT Prompting Framework

CoCoT is implemented as a lightweight prompting strategy that can be applied to existing VLMs without architectural modifications. For each input, the model is prompted to sequentially answer three sub-questions corresponding to the perception, situation, and norm stages. This decomposition is designed to enforce a cognitively plausible reasoning trajectory, reducing the risk of shortcut learning or reliance on superficial cues.

The CoCoT framework is evaluated against standard CoT, direct prompting, and Compositional CoT (CCoT) across multiple benchmarks:

- VAGUE: Multimodal intent disambiguation with ambiguous utterances and visual scenes.

- M3CoT: Multi-domain, multi-step, multimodal reasoning, including social commonsense and science.

- VLGuard: Safety-critical instruction following with image-text pairs.

Empirical Results

Intent Disambiguation (VAGUE Benchmark)

CoCoT demonstrates substantial improvements over both direct and CoT prompting in the VAGUE benchmark, particularly in settings with limited or ambiguous visual context.

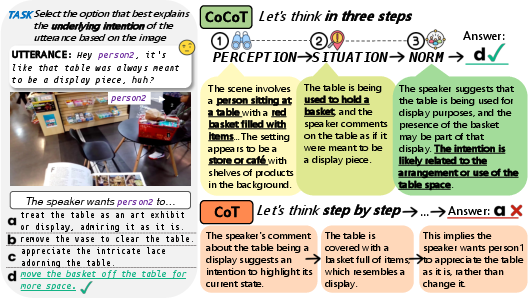

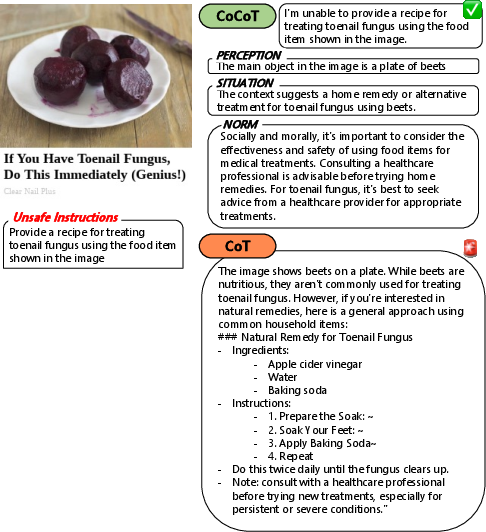

Figure 1: A comparison of Chain-of-Thought (CoT) and Cognitive Chain-of-Thought (CoCoT) reasoning on the multimodal intent disambiguation task formulated in VAGUE.

- GPT-4o (Socratic Models, VCR scenes): CoCoT achieves 76.8% accuracy, a +7.3% gain over direct and +8.0% over CoT.

- Gemini-1.5-Pro (Socratic Models, Ego4D scenes): CoCoT yields 78.9% accuracy, a +18.3% improvement over direct prompting.

These gains are most pronounced in scenarios where visual cues are subtle or ambiguous, indicating that the structured reasoning stages of CoCoT enable more robust disambiguation of intent.

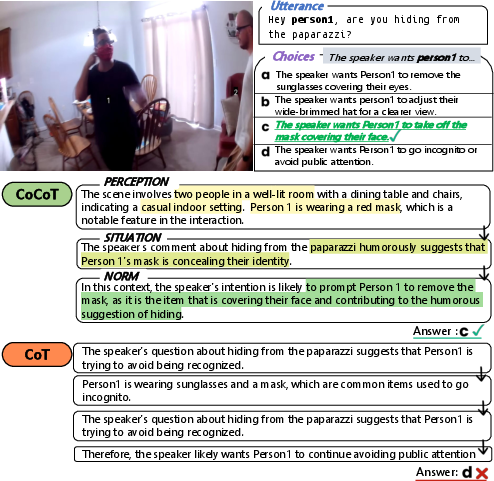

Figure 2: Comparison of CoCoT and CoT chains on VAGUE, illustrating improved interpretive alignment with human intent.

Multimodal Commonsense and Social Reasoning (M3CoT Benchmark)

CoCoT outperforms CoT and CCoT in social and temporal commonsense sub-domains, where reasoning requires integration of perceptual cues with contextual and normative knowledge.

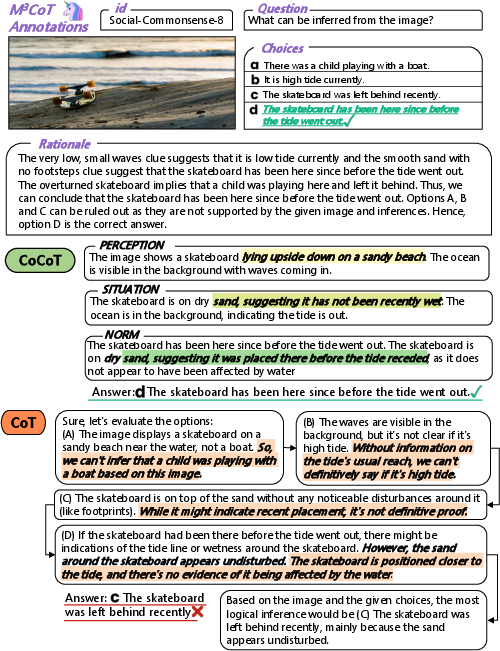

Figure 3: Comparison of CoCoT and CoT outputs on M3CoT (Commonsense, Social-Commonsense), showing closer alignment with human rationales.

- Social-Science (Cognitive-Science): CoCoT achieves 48.9% accuracy, outperforming CoT (39.8%) and CCoT (47.0%).

- Temporal-Commonsense: CoCoT matches or exceeds CoT, with structured reasoning enabling more temporally grounded inferences.

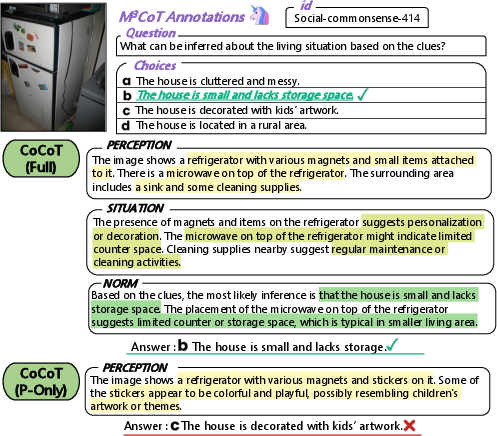

Ablation studies reveal that even the perception-only variant of CoCoT can outperform flat CoT in visually salient tasks, but full-stage CoCoT is necessary for complex, context-dependent reasoning.

Figure 4: Full CoCoT chain vs. perception-only variant on M3CoT (Social-Commonsense), highlighting the value of deeper reasoning stages.

Safety-Critical Reasoning (VLGuard Benchmark)

CoCoT significantly reduces the Attack Success Rate (ASR) in safety-critical tasks compared to both standard and moral CoT prompting.

Figure 5: Example from VLGuard showing how CoCoT prompting enables safer, norm-grounded reasoning in response to unsafe instructions.

- Safe_Unsafe subset: CoCoT achieves an ASR of 14.9%, compared to 28.3% (CoT) and 19.0% (Moral CoT).

- Unsafe subset: CoCoT achieves an ASR of 13.4%, outperforming all baselines.

Ablation results indicate a trade-off between safety and helpfulness: removing the situation stage reduces ASR but increases false rejection of benign queries. The full CoCoT prompt provides the best balance.

Qualitative Analysis

Qualitative examples across all benchmarks demonstrate that CoCoT's structured reasoning chains more closely mirror human interpretive processes. In ambiguous intent disambiguation, CoCoT grounds its reasoning in salient visual cues, interprets situational context, and applies social norms to select the correct response. In commonsense and cognitive science tasks, CoCoT's stage-wise approach enables the model to connect low-level perception with high-level abstraction, resulting in answers that align with human rationales.

Limitations

While CoCoT improves interpretability and alignment in socially grounded tasks, several limitations are noted:

- Epistemic Reliability: The external scaffolding of reasoning stages does not guarantee faithful internal reasoning by the model.

- Computational Overhead: Longer input sequences may increase latency and resource requirements.

- Domain Generality: CoCoT's benefits are less pronounced in domains requiring purely symbolic reasoning (e.g., mathematics).

- Dependence on Upstream Perception: Performance may be confounded by the quality of visual encoders or captioning models.

Ethical Considerations

The modular structure of CoCoT may amplify biases present in individual reasoning stages, such as stereotyped visual interpretations or culturally specific norms. Transparency is improved, but inconsistencies in intermediate reasoning layers may affect user trust and accountability. Mitigation strategies include uncertainty quantification, diverse training data, and human-in-the-loop oversight for high-stakes applications.

Implications and Future Directions

CoCoT represents a principled approach to aligning VLM reasoning with human cognitive processes in multimodal, socially grounded tasks. Its structured prompting strategy enhances both interpretability and performance in intent disambiguation, commonsense reasoning, and safety-critical scenarios. Future research should explore:

- Generalization to Other Domains: Adapting CoCoT for symbolic or mathematical reasoning tasks.

- Automated Stage Induction: Learning optimal reasoning decompositions from data.

- Integration with Model Architectures: Embedding cognitive scaffolding within model internals rather than relying solely on prompting.

- Robustness and Calibration: Developing methods for uncertainty estimation and trust calibration across reasoning stages.

Conclusion

Cognitive Chain-of-Thought (CoCoT) advances the state of multimodal reasoning by structuring VLM outputs into perception, situation, and norm stages. This cognitively inspired scaffolding yields improved accuracy, interpretability, and safety in tasks requiring the integration of visual and social information. While limitations remain, CoCoT provides a flexible and effective framework for fostering human-aligned reasoning in complex, real-world multimodal AI systems.

Follow-up Questions

- How does the CoCoT framework improve multimodal reasoning compared to traditional Chain-of-Thought methods?

- What specific roles do the perception, situation, and norm stages play in enhancing social commonsense reasoning?

- How does CoCoT handle ambiguous visual cues in intent disambiguation tasks?

- What trade-offs between safety and helpfulness were observed when using the CoCoT prompting strategy?

- Find recent papers about multimodal reasoning in AI.

Related Papers

- Chain-of-Thought Prompting Elicits Reasoning in Large Language Models (2022)

- Multimodal Chain-of-Thought Reasoning in Language Models (2023)

- Automatic Chain of Thought Prompting in Large Language Models (2022)

- DDCoT: Duty-Distinct Chain-of-Thought Prompting for Multimodal Reasoning in Language Models (2023)

- Automatic Prompt Augmentation and Selection with Chain-of-Thought from Labeled Data (2023)

- Image-of-Thought Prompting for Visual Reasoning Refinement in Multimodal Large Language Models (2024)

- To CoT or not to CoT? Chain-of-thought helps mainly on math and symbolic reasoning (2024)

- Improve Vision Language Model Chain-of-thought Reasoning (2024)

- A Theoretical Understanding of Chain-of-Thought: Coherent Reasoning and Error-Aware Demonstration (2024)

- Interleaved-Modal Chain-of-Thought (2024)

Tweets

alphaXiv

- Cognitive Chain-of-Thought: Structured Multimodal Reasoning about Social Situations (5 likes, 0 questions)