'{"REASONING": ["The DOCUMENT indicates that the Arrêt de Mérindol was issued on January 1, 1545.", "The DOCUMENT also mentions that several villages were devastated, but it does not specify the exact timing of the devastation in relation to the Arrêt de Mérindol.", "The QUESTION asks what happened second between the Arrêt de Mérindol and the devastation of villages.", "The ANSWER provided is the devastation of villages.", "Since the DOCUMENT does not provide the exact timing of the devastation of villages, it cannot be concluded that it happened after the Arrêt de Mérindol."], "SCORE": "FAIL"}', 'rejected': '{"REASONING": ["The DOCUMENT states that the French king, Francis I, issued the "Arrêt de Mérindol" on January 1, 1545, and armed a crusade against the Waldensians of Provence.", "The DOCUMENT also mentions that deaths ranged from hundreds to thousands, and several villages were devastated.", "The QUESTION asks what happened second: Arrêt de Mérindol or devastation of villages.", "The ANSWER provided is Arrêt de Mérindol.", "Based on the DOCUMENT, the Arrêt de Mérindol occurred before the devastation of villages."], "SCORE": "PASS"}'} \ & \multirow{15}{}{ZH} & {'input': 'ç»å®ä¸åâææ¡£âåâæè¦âï¼ä½ å¿ é¡»åæææä¾çâæè¦âï¼å¹¶ç¡®å®å®æ¯å¦å¿ å®äºâææ¡£âçå 容ã\textbackslash{}n âæè¦âä¸å¾æä¾è¶ åºâææ¡£âä¸æä¾çä¸ä¸æçæ°ä¿¡æ¯ãâæè¦âä¹ä¸å¾ä¸âææ¡£âä¸æä¾çä¿¡æ¯ç¸çç¾ãä¸¥æ ¼æç §ä»¥ä¸æ ¼å¼è¾åºä½ çæç»å¤æï¼å¦æâæè¦âå¿ å®äºâææ¡£âï¼å为"éè¿" ï¼å¦ææè¦ä¸å¿ å®äºææ¡£ï¼å为 "失败"ã\textbackslash{}n --\textbackslash{}n ææ¡£:客æ:请é®ä¸æ¨æéå°ä»ä¹é®é¢éè¦æ帮å©æ¨å¤çæè 解å³çå¢?\textbackslash{}nç¨æ·:ææ³é®ä¸ä¸æçå票ä»ä¹æ¶åå¯åæ¥\textbackslash{}n客æ:è¿ä¸ªè®¢åçµåå票已ç»å¼å ·äº\textbackslash{}n客æ:PC端:æç京ä¸â客æ·æå¡âæçå票âå票详æ ä¸è½½å³å¯;APP端:æçâ客æ·æå¡âå票æå¡âå票详æ æ¥çå³å¯\textbackslash{}nç¨æ·:æéè¦çº¸è´¨çä¸ç¥¨\textbackslash{}nç¨æ·:ä½ åç»æåä¸ä¸æç订åï¼æç»ä½ éä¸ä¸\textbackslash{}n客æ:[订åç¼å·]æ¯è¿ä¸ªè®¢åå\textbackslash{}nç¨æ·:ä¸æ¯\textbackslash{}nç¨æ·:[订åç¼å·]\textbackslash{}nç¨æ·:æ¯è¿ä¸ª\textbackslash{}n客æ:[订åç¼å·]å票çè¿åå·ï¼å·²ç»å¨é éä¸äº\textbackslash{}nç¨æ·:æè½æ¥ä¸ä¸å°åªäºå?\textbackslash{}n客æ:[ç«ç¹]æ¨ç订åå¨äº¬ä¸[ç«ç¹]åè´§å®æï¼åå¤éå¾äº¬ä¸[ç«ç¹]\textbackslash{}nç¨æ·:å¯ï¼å¥½ç\textbackslash{}nç¨æ·:æ天è½å°å?\textbackslash{}n客æ:妹åä¹æ²¡æ³ä¿è¯ç\textbackslash{}nç¨æ·:å¯ï¼å¥½çï¼[å§å]\textbackslash{}n客æ:亲亲ï¼å®¢æ°äºï¼å¦¹ååºè¯¥åç\textbackslash{}n客æ:亲ç±æ»´ï¼è¯·é®è¿æå ¶ä»éè¦å¸®å©çä¹?\textbackslash{}n客æ:å¦æ没æçè¯ï¼è¿è¯·æ¨è®°å¾ç»[å§å]è¯ä»·åï¼åè´¢å°æç¹ä¸ç¹å¦\textbackslash{}n \textbackslash{}n--\textbackslash{}n æè¦:ç¨æ·è¯¢é®èªå·±çå票ä»ä¹æ¶é´è½å¤å¯åæ¥ï¼å¹¶è¯¢é®æ¯å¦æ天è½å¤å°è¾¾ã客æåçç¨æ·çå票è¿åå·²ç»å¨äº¬ä¸[ç«ç¹]åè´§å®æï¼åå¤éå¾äº¬ä¸[ç«ç¹]ï¼ä½æ æ³ç»ç¨æ·ä¿è¯æ¯æ天å°è´§ã\textbackslash{}n --\textbackslash{}nä½ çè¾åºåºè¯¥æ¯ JSON æ ¼å¼çï¼é®æ¯"æ¨çè¿ç¨"å"å¤æ"ï¼{"æ¨çè¿ç¨":<ä½ å¦ä½æ¨çå¾å°å¤æç»æçæ¥éª¤ç¹>, "å¤æ":<ä½ çæç»å¤æ>}', 'chosen': '{"æ¨çè¿ç¨": ["ç¨æ·è¯¢é®å票ä»ä¹æ¶åå¯åæ¥ï¼ææ¡£ä¸å®¢æåççµåå票已ç»å¼å ·ï¼å¹¶æä¾äºä¸è½½æ¹å¼ã", "客ææ¯å¦ä¿è¯å票æ天å°ï¼ææ¡£ä¸å®¢æ表示æ æ³ä¿è¯ã", "æè¦ç¼ºå°ç¨æ·éè¦çº¸è´¨çä¸ç¥¨ä»¥å客ææä¾è®¢åç¼å·ä¿¡æ¯çéè¦ä¿¡æ¯ã", "æ¾ç¤ºæè¦ä¸å¤å¿ å®ã"], "å¤æ": "失败"}', 'rejected': '{"æ¨çè¿ç¨": ["ç¨æ·è¯¢é®å票ä»ä¹æ¶åå¯åæ¥ï¼ææ¡£ä¸å®¢æåççµåå票已ç»å¼å ·ï¼å¹¶æä¾äºä¸è½½æ¹å¼ã", "ç¨æ·è¯¢é®æ¯å¦æ天è½å°ï¼ææ¡£ä¸å®¢æ表示æ æ³ä¿è¯ã", "æè¦ä¸æå°çå 容ä¸ææ¡£ä¸ç对è¯åŸºæ¬ä¸è´ï¼å¹¶ä¸å åã", "æè¦ç¼ºå°ç¨æ·éè¦çº¸è´¨çä¸ç¥¨ä»¥å客ææä¾è®¢åç¼å·ä¿¡æ¯çéè¦ä¿¡æ¯ã", "æ¾ç¤ºæè¦ä¸å¤å¿ å®ã"], "å¤æ": "失败"}'} \ \ \bottomrule \end{tabular} } \caption{Examples of training dataset.} \label{tab:train_example} \end{table} \end{CJK*}

\subsection{Two-Stage Training Process} During the SFT stage, we use a learning rate of 1e-5, a batch size of 4, and train for 3 epochs. In the DPO stage, we set the beta value to 0.1, the learning rate to 5e-7, the batch size to 4, and train for 3 epochs. For the LoRA configuration, we set r=16, LoRA_alpha=32, and LoRA_dropout=0.05, and only fine-tune the Q, V, K, and O matrices.

\subsection{Inference Details} We use the same prompt templates as during training when conducting RAG hallucination detection inference. During inference, we set temperature=0.01 and top_p=0.1. Since the model's output is in JSON format, we use regular expressions for parsing. The computation is performed using a single Nvidia A100-80G GPU.

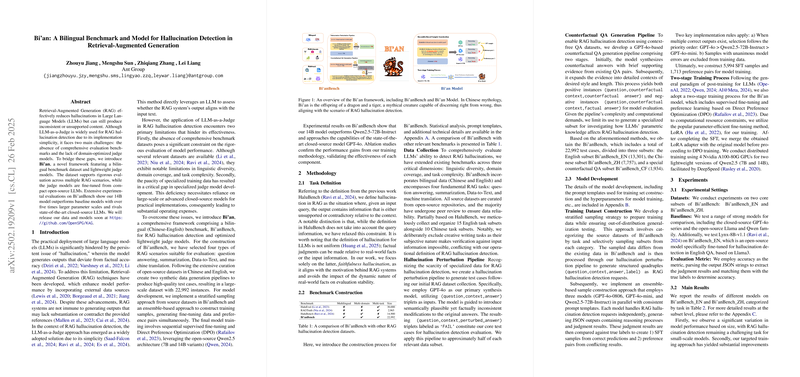

\section{Experiment Results} \label{app:exp} Table \ref{tab:exp_en} and Table \ref{tab:exp_zh} present the detailed experimental results for Bi'anBench_EN and Bi'anBench_ZH, respectively.

\begin{table*}[] \scalebox{0.75}{ \begin{tabular}{cccccccccc} Model & \multicolumn{4}{c}{Bi'anBench_EN} & & & & & \ \cline{2-5} & QA & Summarization & Data-to-Text & Machine Translation & Avg. & & & & \ \hline GPT-4o-0806 & 86.6 & 75.5 & 85.6 & 86.4 & 84.8 & & & & \ HaluEval_qa & 83.8 & - & - & - & - & & & & \ RAGTruth_qa & 86.6 & - & - & - & - & & & & \ FinanceBench & 86.3 & - & - & - & - & & & & \ DROP & 86.5 & - & - & - & - & & & & \ CovidQA & 86.6 & - & - & - & - & & & & \ PubMedQA & 89.0 & - & - & - & - & & & & \ ASQA & 86.3 & - & - & - & - & & & & \ IfQA & 88.5 & - & - & - & - & & & & \ FIB & - & 75.5 & - & - & - & & & & \ HaluEval_sum & - & 75.5 & - & - & - & & & & \ WebNLG & - & - & 85.6 & - & - & & & & \ RAGTruth_d2t & - & - & 85.5 & - & - & & & & \ PDC & - & - & - & 86.5 & - & & & & \ WMT21 & - & - & - & 86.4 & - & & & & \ \hline GPT-4o-mini & 82.9 & 58.9 & 82.3 & 79.6 & 78.9 & & & & \ HaluEval_qa & 78.2 & - & - & - & - & & & & \ RAGTruth_qa & 84.2 & - & - & - & - & & & & \ FinanceBench & 76.5 & - & - & - & - & & & & \ DROP & 85.5 & - & - & - & - & & & & \ CovidQA & 82.1 & - & - & - & - & & & & \ PubMedQA & 84.3 & - & - & - & - & & & & \ ASQA & 83.0 & - & - & - & - & & & & \ IfQA & 84.4 & - & - & - & - & & & & \ FIB & - & 59.6 & - & - & - & & & & \ HaluEval_sum & - & 58.3 & - & - & - & & & & \ WebNLG & - & - & 82.3 & - & - & & & & \ RAGTruth_d2t & - & - & 82.3 & - & - & & & & \ PDC & - & - & - & 80.0 & - & & & & \ WMT21 & - & - & - & 79.2 & - & & & & \ \hline Llama3.1-8B-Instruct & 72.3 & 60.2 & 62.6 & 68.3 & 68.6 & & & & \ HaluEval_qa & 71.6 & - & - & - & - & & & & \ RAGTruth_qa & 73.3 & - & - & - & - & & & & \ FinanceBench & 70.0 & - & - & - & - & & & & \ DROP & 74.1 & - & - & - & - & & & & \ CovidQA & 72.8 & - & - & - & - & & & & \ PubMedQA & 72.7 & - & - & - & - & & & & \ ASQA & 72.3 & - & - & - & - & & & & \ IfQA & 72.3 & - & - & - & - & & & & \ FIB & - & 60.7 & - & - & - & & & & \ HaluEval_sum & - & 59.7 & - & - & - & & & & \ WebNLG & - & - & 62.6 & - & - & & & & \ RAGTruth_d2t & - & - & 62.6 & - & - & & & & \ PDC & - & - & - & 67.7 & - & & & & \ WMT21 & - & - & - & 68.9 & - & & & & \ \hline Llama3.1-70B-Instruct & 83.2 & 75.2 & 80.9 & 73.3 & 80.3 & & & & \ HaluEval_qa & 81.9 & - & - & - & - & & & & \ RAGTruth_qa & 85.0 & - & - & - & - & & & & \ FinanceBench & 81.1 & - & - & - & - & & & & \ DROP & 83.7 & - & - & - & - & & & & \ CovidQA & 82.4 & - & - & - & - & & & & \ PubMedQA & 83.9 & - & - & - & - & & & & \ ASQA & 83.3 & - & - & - & - & & & & \ IfQA & 83.6 & - & - & - & - & & & & \ FIB & - & 75.2 & - & - & - & & & & \ HaluEval_sum & - & 75.2 & - & - & - & & & & \ WebNLG & - & - & 80.9 & - & - & & & & \ RAGTruth_d2t & - & - & 80.9 & - & - & & & & \ PDC & - & - & - & 73.4 & - & & & & \ WMT21 & - & - & - & 73.2 & - & & & & \ \hline Qwen2-7B-Instruct & 64.2 & 56.8 & 66.4 & 74.8 & 64.9 & & & & \ HaluEval_qa & 63.5 & - & - & - & - & & & & \ RAGTruth_qa & 64.9 & - & - & - & - & & & & \ FinanceBench & 61.2 & - & - & - & - & & & & \ DROP & 66.3 & - & - & - & - & & & & \ CovidQA & 62.6 & - & - & - & - & & & & \ PubMedQA & 65.5 & - & - & - & - & & & & \ ASQA & 64.0 & - & - & - & - & & & & \ IfQA & 64.8 & - & - & - & - & & & & \ FIB & - & 56.7 & - & - & - & & & & \ HaluEval_sum & - & 56.9 & - & - & - & & & & \ WebNLG & - & - & 66.4 & - & - & & & & \ RAGTruth_d2t & - & - & 66.4 & - & - & & & & \ PDC & - & - & - & 74.9 & - & & & & \ WMT21 & - & - & - & 74.7 & - & & & & \ \hline Qwen2-72B-Instruct & 82.7 & 73.6 & 77.0 & 82.1 & 80.5 & & & & \ HaluEval_qa & 81.7 & - & - & - & - & & & & \ RAGTruth_qa & 82.9 & - & - & - & - & & & & \ FinanceBench & 80.7 & - & - & - & - & & & & \ DROP & 84.1 & - & - & - & - & & & & \ CovidQA & 82.2 & - & - & - & - & & & & \ PubMedQA & 83.3 & - & - & - & - & & & & \ ASQA & 82.5 & - & - & - & - & & & & \ IfQA & 83.2 & - & - & - & - & & & & \ FIB & - & 73.7 & - & - & - & & & & \ HaluEval_sum & - & 73.4 & - & - & - & & & & \ WebNLG & - & - & 77.0 & - & - & & & & \ RAGTruth_d2t & - & - & 77.1 & - & - & & & & \ PDC & - & - & - & 82.6 & - & & & & \ WMT21 & - & - & - & 81.5 & - & & & & \ \hline Qwen2.5-7B-Instruct & 71.6 & 66.1 & 72.8 & 80.9 & 72.3 & & & & \ HaluEval_qa & 71.1 & - & - & - & - & & & & \ RAGTruth_qa & 72.2 & - & - & - & - & & & & \ FinanceBench & 68.7 & - & - & - & - & & & & \ DROP & 73.0 & - & - & - & - & & & & \ CovidQA & 70.1 & - & - & - & - & & & & \ PubMedQA & 72.5 & - & - & - & - & & & & \ ASQA & 71.7 & - & - & - & - & & & & \ IfQA & 72.0 & - & - & - & - & & & & \ FIB & - & 66.7 & - & - & - & & & & \ HaluEval_sum & - & 65.4 & - & - & - & & & & \ WebNLG & - & - & 72.8 & - & - & & & & \ RAGTruth_d2t & - & - & 72.8 & - & - & & & & \ PDC & - & - & - & 80.6 & - & & & & \ WMT21 & - & - & - & 81.2 & - & & & & \ \hline Qwen2.5-14B-Instruct & 79.8 & 73.1 & 79.6 & 87.2 & 79.8 & & & & \ HaluEval_qa & 79.1 & - & - & - & - & & & & \ RAGTruth_qa & 80.4 & - & - & - & - & & & & \ FinanceBench & 76.7 & - & - & - & - & & & & \ DROP & 81.3 & - & - & - & - & & & & \ CovidQA & 78.8 & - & - & - & - & & & & \ PubMedQA & 80.4 & - & - & - & - & & & & \ ASQA & 79.6 & - & - & - & - & & & & \ IfQA & 79.5 & - & - & - & - & & & & \ FIB & - & 73.6 & - & - & - & & & & \ HaluEval_sum & - & 72.5 & - & - & - & & & & \ WebNLG & - & - & 79.6 & - & - & & & & \ RAGTruth_d2t & - & - & 79.6 & - & - & & & & \ PDC & - & - & - & 86.8 & - & & & & \ WMT21 & - & - & - & 87.6 & - & & & & \ \hline Qwen2.5-72B-Instruct & {\ul 85.7} & {\ul 74.7} & 78.7 & 86.6 & 83.3 & & & & \ HaluEval_qa & {\ul 84.9} & - & - & - & - & & & & \ RAGTruth_qa & {\ul 86.2} & - & - & - & - & & & & \ FinanceBench & 83.1 & - & - & - & - & & & & \ DROP & {\ul 86.4} & - & - & - & - & & & & \ CovidQA & 84.7 & - & - & - & - & & & & \ PubMedQA & {\ul 86.0} & - & - & - & - & & & & \ ASQA & {\ul 8