The paper "Is That Your Final Answer? Test-Time Scaling Improves Selective Question Answering" presents a novel approach to enhance the performance of LLMs by focusing on test-time scaling and selective question answering. The authors criticize the prevalent assumption that models should always produce an answer, regardless of confidence, which neglects practical scenarios where incorrect answers incur costs.

Key Contributions:

- Test-Time Scaling and Confidence Calibration:

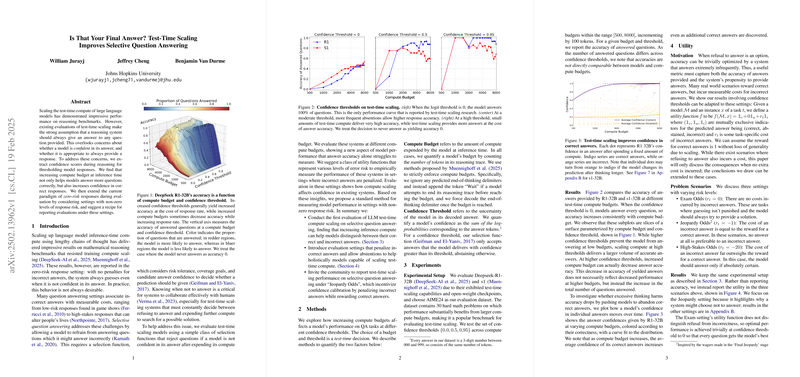

- The paper investigates how increasing the compute budget during inference improves not only the accuracy of models in answering questions but also increases their confidence levels in correct responses. This is crucial in situations where incorrect answers are penalized.

- Selective Question Answering Framework:

- The authors propose a method to integrate confidence scores during inference, allowing models to abstain from answering when uncertain. This selective approach considers the risk associated with responses and gauges when a model should refuse to answer.

- Utility Functions and Evaluation Settings:

- A class of utility functions is devised to measure system performance under various risk conditions (Exam Odds, Jeopardy Odds, and High-Stakes Odds). This approach allows for a more nuanced evaluation of a model's capabilities beyond simple accuracy metrics.

Methodological Details:

- Compute Budgets: Defined as the number of tokens processed during inference. Models are evaluated by controlling the reasoning length to see how budget variations affect performance, with mechanisms to continue reasoning until a pre-defined budget is reached.

- Confidence Thresholds: Implemented as a measure of uncertainty, where the model’s response is accepted only if its confidence level surpasses a set threshold. Confidence is based on the log-probabilities of decoded answer tokens.

Experiments and Results:

- The experiments focus on DeepSeek-R1-32B and s1 models, evaluated using the AIME24 dataset. This dataset is chosen for its challenging nature, necessitating substantial compute resources for correct problem-solving.

- Results indicate that test-time scaling significantly impacts confidence in correct answers, with higher compute budgets and thresholds leading to both more accurate and confident outputs.

- Utility analysis reveals that higher confidence thresholds and compute budgets positively influence utility under Jeopardy Odds, showcasing scenarios where selective answering is advantageous over constant guessing.

Implications:

- The paper underscores the need for LLMs to incorporate mechanisms for confidence calibration, effectively navigating environments where erroneous outputs have tangible costs.

- This research invites the community to adopt similar evaluation approaches that integrate confidence measures to better capture model performance across various risk settings.

Future Directions:

- Addressing compute costs in the utility function to consider energy consumption and evaluating models on diverse language datasets to generalize findings beyond English-centric tasks.

Overall, this work pushes the frontiers of LLM deployment by advocating for models that can dynamically adjust their decision to answer based on confidence, paving the way for more reliable AI collaboration in human-centric applications.