An Expert Overview of MMVU: Measuring Expert-Level Multi-Discipline Video Understanding

The development and evaluation of Multimodal Foundation Models (MFMs) have seen considerable advancement in recent years, yet the task of comprehensively assessing video-based expert-level reasoning remains largely underexplored. The paper "MMVU: Measuring Expert-Level Multi-Discipline Video Understanding" introduces a sophisticated benchmark—MMVU—that addresses this gap in evaluating MFMs across specialized domains. The authors propose a structured approach to create a comprehensive dataset aimed at testing MFMs' ability to understand and reason about videos in an expert-level, multi-disciplinary context.

MMVU sets itself apart with three primary advancements compared to existing benchmarks. First, it demands the application of domain-specific knowledge and expert-level reasoning beyond basic visual perception. This places higher cognitive demands on the models, requiring them to interpret and understand specialized, knowledge-intensive content. Second, each dataset example is thoroughly annotated by human experts from scratch, ensuring high quality and reliability through rigorous data control practices. Finally, each example is also accompanied by expert-annotated detailed reasoning rationales and relevant domain knowledge, which facilitates in-depth performance analysis and insightful error analysis.

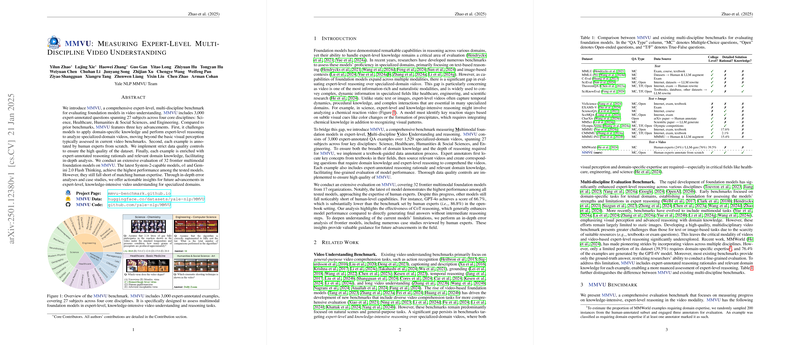

The MMVU benchmark includes 3,000 expert-annotated questions across 27 subjects within four core disciplines: Science, Healthcare, Humanities and Social Sciences, and Engineering. These questions are sourced from 1,529 videos covering a diverse range of topics. Through a textbook-guided protocol, expert annotators identify key concepts needing dynamic visual representation, curate relevant video clips from Creative Commons licensed content, and formulate questions demanding integration of domain knowledge. The MMVU dataset is distinctive for requiring models to engage in both temporal dynamics and complex procedural knowledge inherent in video content.

The paper presents a detailed evaluation of 32 leading-edge multimodal foundation models. Among these, the System-2-capable models, such as o1 and Gemini 2.0 Flash Thinking, demonstrated relatively superior performance. However, even these advanced systems continue to fall short of human expert-level capabilities, highlighting the persistent challenges MFMs face in achieving comprehensive video understanding and reasoning. For instance, GPT-4o scored 66.7% in the open-book setting, significantly below the human benchmark of 86.8%.

The paper also investigates the implications of employing Chain-of-Thought (CoT) reasoning, showing that it generally enhances model performance by encouraging step-by-step reasoning processes before arriving at a conclusion. This suggests future research should focus on refining reasoning strategies to leverage multimodal inputs effectively.

The potential applications of this research are extensive, with implications for the progression of AI toward achieving human-equivalent reasoning in specialized fields. The insights gained from evaluating MFMs on the MMVU could support the development of AI systems capable of more profound and comprehensive understanding of complex, dynamic environments. Future directions in AI research, as indicated by MMVU's findings, may focus on leveraging CoT strategies and improving the integration of diverse data modalities for more accurate, context-aware reasoning capabilities.

Through detailed error analyses and case studies, the authors provide actionable insights that highlight persistent challenges in video-based expert reasoning. By offering a granular evaluation framework, MMVU sets a new bar for evaluating multimodal models and facilitates targeted advancements. This paper serves as both a benchmark for current capabilities and a catalyst for ongoing research in knowledge-intensive video understanding within specialized domain contexts.