Discriminative Fine-tuning of LVLMs: Enhancing Vision-Language Capabilities

Summary

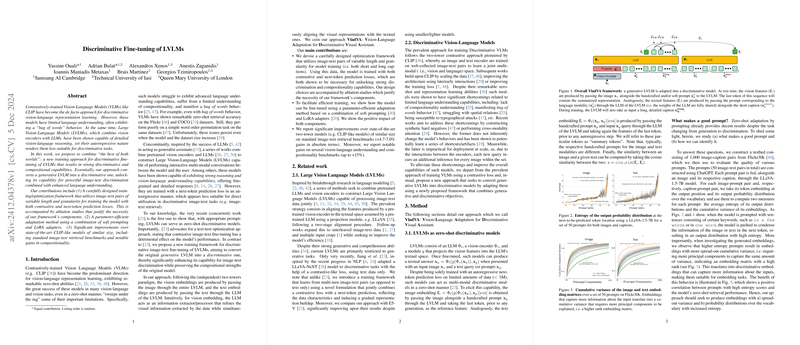

The paper "Discriminative Fine-tuning of LVLMs" explores the limitations of current Vision-LLMs (VLMs) like CLIP, which, despite exhibiting robust zero-shot abilities, often suffer from a "bag of words" behavior, lacking advanced language comprehension and compositional understanding. Furthermore, when these VLMs are integrated with LLMs to form LVLMs, their autoregressive nature renders them less efficient for discriminative tasks. This research proposes a novel methodology that transforms a generative LVLM into a more capable discriminative model, maintaining its rich compositional capabilities.

Methodology

The paper introduces a carefully orchestrated training and optimization framework, aiming to amalgamate the strengths of both contrastive and autoregressive methods. The proposed framework, dubbed VladVA: Vision-Language Adaptation for Discriminative Visual Assistant, utilizes image-text pairs that vary in length and granularity, training the model using both contrastive and next-token prediction losses. The fine-tuning process is enhanced using a parameter-efficient adaptation strategy that combines soft prompting and LoRA adapters.

Key Contributions:

- Data Utilization: The framework considers both short and long captions to create a diversified training dataset that helps in overcoming the shortcomings of solely contrastive or autoregressive models.

- Training Strategy: The combination of contrastive loss and next-token prediction loss enables fine-tuning that retains the strengths of the original LVLM while enhancing its discriminative capabilities.

- Parameter-Efficient Adaptation: By leveraging soft prompting and LoRA adapters, the approach maintains efficiency and scalability, crucial for handling large LVLMs.

Results and Implications

The proposed method demonstrates significant improvements over state-of-the-art CLIP-like models across standard image-text retrieval benchmarks, with impressive gains ranging from 4.7% to 7.0% in absolute terms. Furthermore, the method shows notable advancements in compositionality benchmarks, achieving up to 15% improvements over the competing models. The research also reveals that, contrary to recent findings, contrastive image-text fine-tuning can be beneficial when appropriately incorporated into the training regime of LVLMs.

The theoretical implications suggest a successful blend of generative and discriminative capabilities within a single unified LVLM architecture. Practically, this approach could herald improvements in applications requiring nuanced vision-language understanding, such as complex image retrieval and question-answering systems in multi-modal contexts.

Future Directions

The paper leaves room for future exploration into extending the methodology to even larger and more varied datasets, potentially incorporating more advanced forms of representation learning and further exploring the efficient scaling of model size. Additionally, there is potential to refine the balance between parameter efficiency and model capacity further, ensuring that the adaptation techniques remain practical as model architectures evolve.

In summary, this work represents a significant step towards addressing the limitations of current LVLMs by enhancing their discriminative performance without sacrificing the compositional strengths provided by their generative underpinnings. The proposed framework could serve as a blueprint for future advancements in the integration of LLMs with vision tasks.