A Technical Exploration of Interleaved-Modal Chain-of-Thought

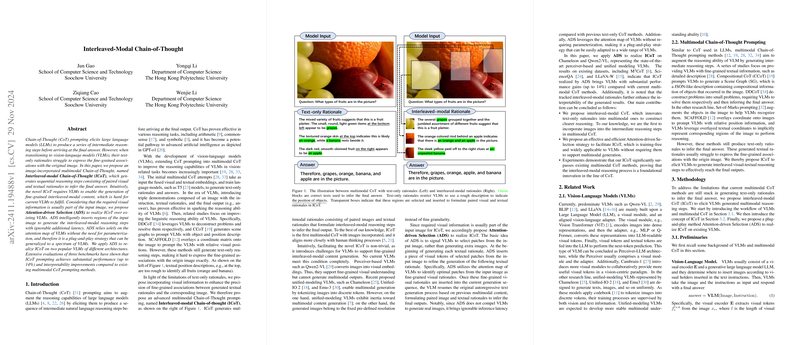

The research paper introduces a novel framework named Interleaved-modal Chain-of-Thought (ICoT) aimed at enhancing the reasoning capabilities of Vision-LLMs (VLMs) by integrating fine-grained visual information with textual reasoning steps. This approach addresses the limitations inherent in existing multimodal Chain-of-Thought (CoT) methods, which predominantly rely on text-only intermediate rationales. The authors propose that by incorporating visual elements into these reasoning chains, ICoT aligns more closely with human thinking processes and improves interpretability and performance in vision-related tasks.

Problem Identification

Traditional CoT prompting methodologies have demonstrated effectiveness in improving LLM performance by eliciting intermediate reasoning steps. However, when transitioning to VLMs, these methods face challenges. Specifically, the textual rationales often fail to capture the granular associations between visual and textual data necessary for nuanced image understanding. This shortcoming limits the potential of VLMs in domains where visual context significantly contributes to the reasoning process.

Proposed Method: Interleaved-modal Chain-of-Thought (ICoT)

The paper proposes ICoT, a methodology that interlaces visual cues directly into the reasoning process of VLMs. The novel aspect of ICoT lies in its ability to generate multimodal intermediate rationales, pairing visual elements with textual descriptions. This interweaving of modalities is achieved using the Attention-driven Selection (ADS) strategy, which intelligently selects and inserts key visual information into the reasoning sequence without necessitating changes to the model's architecture.

Attention-driven Selection Strategy

A focal innovation introduced in the paper is the ADS strategy. This mechanism employs the attention maps generated by VLMs to dynamically identify and incorporate relevant visual regions into the reasoning process as interleaved-modal content. This selection is achieved without introducing significant computational latency, ensuring that the method is both efficient and versatile across various VLM architectures. Furthermore, ADS does not require parameterization, making it compatible with a range of existing models.

Empirical Validation

The efficacy of ICoT, implemented via ADS, is rigorously tested against several benchmarks, including M CoT, ScienceQA, and LLaVA-W. The experimental results reveal that ICoT, when applied to state-of-the-art VLMs like Chameleon and Qwen2-VL, delivers substantial performance improvements, up to 14%, over existing multimodal CoT approaches. This enhancement is attributed to the method's capacity to form clear, multimodal reasoning chains that leverage both textual and visual information effectively.

Implications and Future Directions

ICoT represents a significant exploratory step towards improving multimodal reasoning in artificial intelligence by enhancing coherence and interpretability. By providing a framework that better reflects the complexities of human cognitive processes, ICoT paves the way for future advancements in AI systems operating in visually rich domains. Future developments could focus on further optimizing the selection of visual cues, exploring more complex reasoning scenarios, and extending the applicability of ICoT across diverse and dynamic environments.

Conclusion

The paper offers a detailed and innovative approach to augmenting the reasoning abilities of VLMs. Through the integration of visual cues into the CoT framework, ICoT advances the state-of-the-art in multimodal learning. By addressing key limitations in existing methodologies, ICoT not only boosts performance metrics but also enhances the interpretability and precision of VLM outputs. This research provides a foundation for future work aimed at refining multimodal AI reasoning and comprehension capabilities.