A Survey on Natural Language Understanding and Inference in Visual Question Answering

The paper "Natural Language Understanding and Inference with MLLM in Visual Question Answering: A Survey" provides an extensive review of methodologies and developments in the field of Visual Question Answering (VQA), with a focus on natural language understanding and inference using multimodal LLMs (MLLMs). This survey examines the evolution and challenges of integrating computer vision with NLP to produce comprehensive models capable of answering questions based on visual input.

The paper categorizes VQA models based on their approach to feature extraction, information fusion, and knowledge reasoning, elaborating on advancements as well as gaps that persist in the domain.

Visual and Textual Feature Extraction

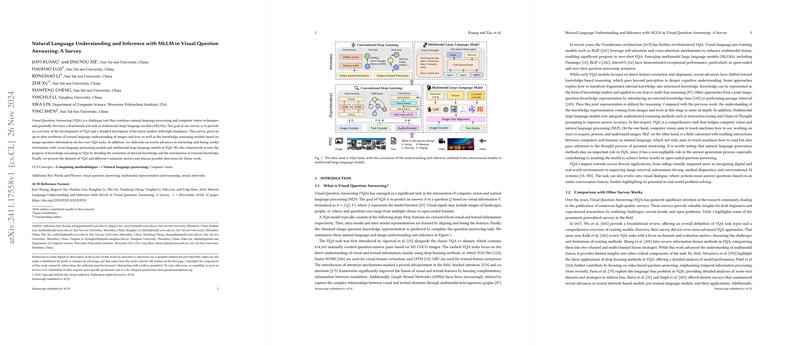

In exploring natural language understanding of visual inputs, the paper explores techniques employed for visual feature extraction, which are largely dependent on convolutional neural networks (CNNs) and visual transformers. CNNs such as VGG-Net and ResNet have been foundational, yet newer approaches deploy visual transformers like ViT and CLIP to enhance pixel-level understanding.

Textual feature extraction typically involves bag-of-words or RNN-based architectures, with the introduction of BERT offering substantial improvements in processing natural language inputs. Integration of these feature sets is critical for effective VQA, and methods such as element-wise operations, deep learning-based attention mechanisms, and graph neural networks (GNNs) are discussed to illustrate current practices in multimodal fusion.

Vision-Language Pre-training Models

The survey highlights vision-language pre-training models, which operate through dual-stream and single-stream architectures, as pivotal for furthering VQA capabilities. These models, such as LXMERT and ViLBERT, facilitate learning across visual and textual modalities, utilizing self-supervised learning to improve performance on downstream tasks including VQA. The impact of training on vast datasets allows these models to generalize effectively across unseen data.

Multimodal LLMs (MLLMs)

A considerable portion of the paper discusses the rising role of MLLMs in VQA, driven by the integration of LLMs into multimodal systems. The paper categorizes the approaches into three streams: LLM-aided visual understanding, Image-to-Text generation, and the development of unified MLLM frameworks. The training paradigms for these models are scrutinized, emphasizing the importance of image-to-text alignment for enabling LLMs to handle visual input directly.

Moreover, advanced techniques such as multimodal instruction tuning and chain-of-thought prompting are outlined as instrumental in honing the reasoning capabilities of MLLMs in VQA tasks.

Knowledge Reasoning and Challenges

Addressing the inference aspect, the paper provides an intricate overview of knowledge reasoning techniques, segmented into conventional, one-hop, and multi-hop strategies. These strategies involve internal and external knowledge sources, integration of feature-based methods, and the application of GNNs or memory networks for robust reasoning capabilities.

The paper identifies key challenges in the VQA field, particularly emphasizing the need for better robustness against biases ingrained in datasets, improving explainability of model outputs, and advancing the natural language generation capability to produce nuanced and contextually accurate answers.

Conclusion

In conclusion, the survey effectively canvasses the landscape of VQA research, offering insightful commentary on current capabilities and future directions. It highlights how advancements in AI, particularly through MLLMs and vision-language pre-training models, are poised to resolve existing limitations in VQA tasks. This overview not only serves as a guide for existing methodologies but also as a catalyst for novel approaches that could further integrate reasoning, understanding, and knowledge extraction in VQA models. As AI continues to evolve, the intersection of vision and language will undoubtedly remain a rich field for research and application, with substantial implications for artificial intelligence development across domains.