The paper "Star Attention: Efficient LLM Inference over Long Sequences" addresses the computational and memory challenges posed by processing long sequences in Transformer-based LLMs, particularly due to the quadratic complexity of traditional self-attention mechanisms. The authors introduce a novel method called Star Attention, which improves the efficiency of inference over long sequences by employing a two-phase block-sparse attention mechanism.

Key Concepts and Motivation

LLMs have expanded their context lengths to support applications requiring the processing of extensive text sequences, such as multi-document summarization and large corpus retrieval. This increased context length, though beneficial for applications, leads to substantial computational overhead. Various prior techniques attempted to alleviate these issues by optimizing attention mechanisms through sparse approximations or distributed computational strategies, such as Flash Attention and Ring Attention.

Star Attention Methodology

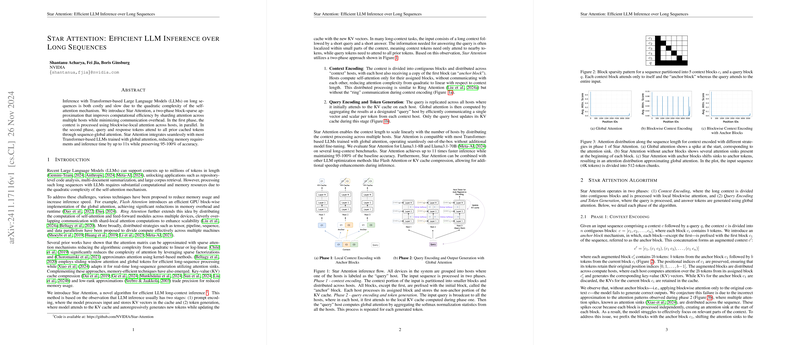

Star Attention presents a two-phase approach aimed at enhancing computational efficiency:

- Context Encoding:

- In this phase, the context is divided into contiguous blocks distributed across multiple computing hosts. Each block is led by an "anchor block" (the first block in the sequence). This design leads to blockwise-local attention, reducing attention complexity from quadratic to linear with respect to context length.

- By prefixing each block with the anchor block, the model preserves the sink-like attention distribution, which closely resembles global attention without requiring full global context in each block.

- Query Encoding and Token Generation:

- Queries and responses are managed through sequence-global attention. The queries are replicated across hosts where they initially attend to local KV (key-value) caches computed during block processing.

- Global attention is then computed at a designated "query" host by efficiently communicating selective data between hosts, further reducing computational costs and improving inference time.

Evaluation and Results

Star Attention demonstrates 11x reductions in memory and inference time when applied to LLMs such as Llama3.1-8B and Llama3.1-70B, with negligible losses in accuracy, achieving 95-100% fidelity compared to baseline global attention models. These results were verified over several long-context benchmarks.

Implications and Usage

- Efficiency and Scalability: Star Attention allows context lengths to scale linearly with the number of hosts involved in processing, making it highly suitable for distributed computing environments.

- Ease of Integration: The method integrates easily with most Transformer-based LLMs, requiring no additional fine-tuning, and works in conjunction with other optimization techniques like Flash Attention.

- Flexibility: Star Attention's two-stage processing allows adjustments to the size of blocks, enabling a trade-off between speed and accuracy based on users' specific needs.

Challenges and Future Work

While showing promising results, Star Attention does present challenges in tasks requiring deep context understanding, such as Multi-Hop Tracing, where inter-block communication is crucial. Future work might explore optimizing anchor block configurations or further balancing block sizes to refine accuracy while preserving efficiency.

Overall, Star Attention offers a significant advancement in managing long-sequence inferences in LLMs by effectively reducing computational load while maintaining high accuracy levels, making it a valuable tool for deploying resource-heavy LLMs in practical applications.