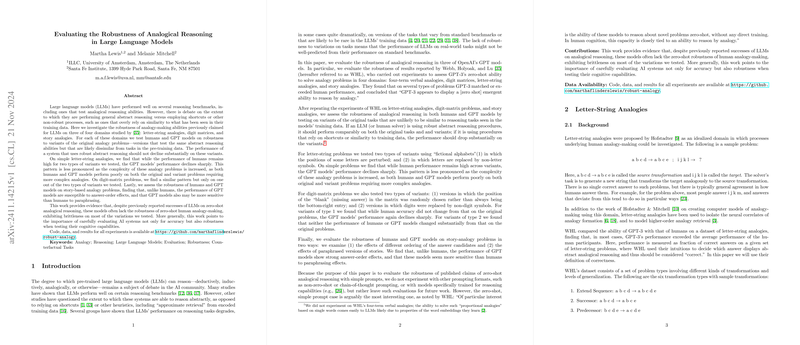

Evaluating the Robustness of Analogical Reasoning in LLMs

In recent explorations of LLMs, considerable research focus has been directed toward evaluating their cognitive reasoning capabilities, specifically in analogical reasoning. A paper titled "Evaluating the Robustness of Analogical Reasoning in LLMs" by Martha Lewis and Melanie Mitchell undertakes a detailed examination of the robustness and reliability of LLMs—particularly the GPT series—against variants of analogy benchmarks devised by Webb et al. (2023), who originally posited emergent analogical reasoning abilities in GPT models.

Overview and Objectives

This research builds on the foundation that while LLMs have shown promising results on certain benchmarks, there remains a significant question: do these models genuinely achieve abstract reasoning, or do they rely on narrower heuristic-based solutions derived from vast training data? The paper explores this question by expanding the range of analogy tasks to include variants that differ from likely pre-training data to assess if the LLM's performance can be consistently robust under novel conditions.

Methodology

Three classical analogy formats were investigated:

- Letter-String Analogies: Problems that ask for an analogous transformation of letter strings.

- Digit Matrices: Inspired by Raven's Progressive Matrices, these test the ability to infer rules and fill in blanks in a matrix of digits with potential rules spanning simple to logical operations.

- Story Analogies: Evaluations of causal reasoning via story comprehension and analogy.

For each task, the research tested the trained LLM models—GPT-3, GPT-3.5, and GPT-4—against human participants using both the original tasks and newly devised variants that stressed the models' ability to generalize reasoning techniques beyond familiar and potentially encoded examples.

Key Insights and Numerical Findings

- Letter-String Analogies: Human participants demonstrated higher accuracy than GPT models particularly on zero generalization tasks, highlighting LLM sensitivity to superficial characteristics. As problem complexity increased with added generalization, performance for both humans and models diminished.

- Digit Matrices: Humans maintained performance levels regardless of blank position changes in matrices. However, GPT models exhibited significant performance drops when the location of the task's unknown changed, indicating brittleness under mild task reconfiguration.

- Story Analogies: GPT-4 was influenced by the ordering of story presentation, unlike human participants, who showed robustness to such superficial presentation orderings. When stories were paraphrased to eliminate surface similarity cues, both humans and models displayed degraded performance, although human participants showed relatively better resilience.

Implications and Future Directions

The results imply that LLMs, despite their capacity for some level of analogical reasoning, often rely heavily on shortcuts derived from training similarities rather than robust abstract reasoning. This brittleness in confronting task variations mirrors a broader issue in AI reasoning: the translation from surface pattern recognition to deep structural analogy understanding. The findings strongly advocate for developing evaluation benchmarks that force LLMs to rely less on pre-learned, surface-level heuristics and more on universally transferable reasoning processes.

For future research, the paper suggests exploring diverse prompting strategies, specialized model training designed explicitly for reasoning, and factoring in meta-cognitive processes to align AI reasoning more closely with human cognitive mechanisms. The lessons drawn here guide paths not only toward models with improved reasoning robustness but also toward understanding the mechanism of reasoning in neural architectures more generally.

Through systematic evaluation and adaptation of the digital reasoning landscape, this work contributes valuable insights to the academic dialogue surrounding artificial intelligence's cognitive parallels, supporting further augmentation in LLM design and evaluation pipelines.