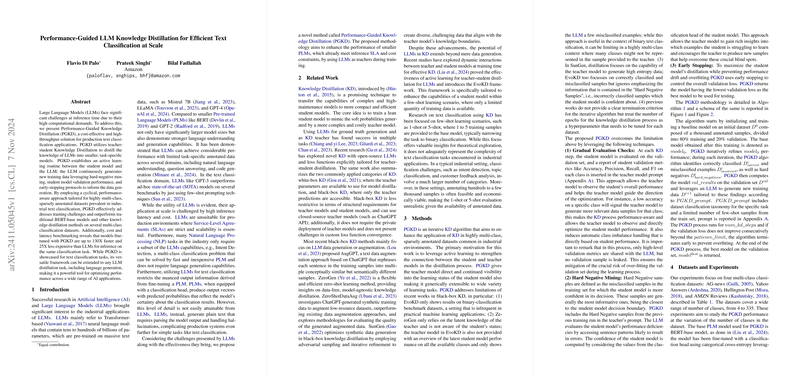

Performance-Guided LLM Knowledge Distillation for Efficient Text Classification at Scale

The paper by Di Palo, Singhi, and Fadlallah presents a novel approach termed Performance-Guided Knowledge Distillation (PGKD) aimed at overcoming the computational challenges associated with deploying LLMs such as BERT and GPT-3 in production environments, specifically for text classification tasks. This insightful paper seeks to maximize the inference efficiency by distilling the expansive knowledge of LLMs into smaller, task-specific Pre-trained LLMs (PLMs), thereby reducing the cost and latency of inference operations.

Methodological Overview

The research introduces an iterative knowledge distillation algorithm that uses LLMs (acting as teacher models) to guide the training of compact student models. PGKD involves several key components:

- Active Learning: This cyclic process involves the LLM continuously generating training samples for the student model. It leverages hard-negative mining—the identification of misclassified samples for which the student model is confident—to inform sample generation. The goal is to enhance the student model's learning by focusing on its deficiencies.

- Performance-Aware Training: PGKD adopts a performance-aware strategy by observing the student model's validation metrics across classification classes. This real-time feedback loop allows the LLM to strategically generate data that targets performance improvement areas directly.

- Early Stopping Protocols: To prevent overfitting and unnecessary computations, an early stopping criterion based on validation losses is put into place. The iterative process ceases when further training does not yield improved performance on the validation set.

Noteworthy Findings

The PGKD approach significantly outperforms conventional BERT-based models and naive distillation methods across various text classification datasets ranging from AG-news with four classes to AMZN reviews with 335 classes. Specifically, the proposed methodology achieves up to a 130% improvement in inference speed and a 25-fold reduction in the cost compared to full-scale LLM deployment in production-class environments. There is a clear trend indicating the larger the multi-class problem, the more significant the performance gain from PGKD, spotlighting its efficacy in complex industrial scenarios where class numbers could be substantial and data annotations sparse.

Future Directions and Implications

The findings indicate several promising avenues for future research and development in industrial AI applications:

- Extended Applications: While PGKD is demonstrated primarily for text classification, its framework is theoretically adaptable to any LLM distillation task, including various NLP tasks such as text generation.

- Optimization of Prompt Engineering: The effectiveness of PGKD is dependent on the quality of the prompts used for LLM data generation. Future work may explore optimal prompting strategies or even automated prompt generation techniques to further enhance distillation efficacy.

- Exploration of Teacher LLMs and Student Models: Future research could investigate the impact of different LLMs used as teacher models in PGKD and the influence of the student model's size and architecture on the distillation outcomes.

Conclusion

The PGKD method provides a compelling solution to the paramount challenge of balancing LLM capabilities with the practical limitations of production deployment, particularly within the AI industry. By significantly enhancing inference efficiency while maintaining robust classification performance, this research paves the way for broader LLM applications in domains where computational efficiency and cost-effectiveness are critical. As the reliance on AI grows, approaches like PGKD will be invaluable in enabling scalable, efficient deployment of sophisticated models across diverse sectors.