LLM2CLIP: Enhanced Visual Representation via LLMs

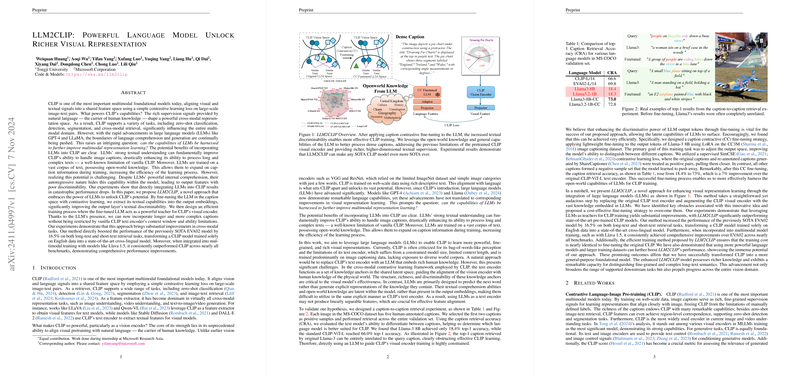

The paper introduces LLM2CLIP, a novel framework aimed at enhancing multimodal foundational models by incorporating the capabilities of LLMs with the CLIP (Contrastive Language–Image Pre-training) architecture. CLIP has established a pivotal role in multimodal tasks, effectively aligning visual and textual data into a shared feature space. However, as advancements in LLMs have progressed rapidly, the question arises whether such models can be leveraged to further enhance the capabilities of vision-language representations, particularly CLIP.

Conceptual Framework and Methodology

LLM2CLIP suggests redefining the interaction between textual and visual encoders by integrating LLMs as a replacement for CLIP's original text encoder. The central innovation lies in fine-tuning an LLM to act as a sophisticated text encoder, effectively addressing the challenge of poor discriminability typically encountered in LLM output features when attempting to align visual data. This process involves a caption contrastive fine-tuning strategy, which significantly boosts the discriminative power of the LLM's output, thereby enabling it to provide superior textual supervision during the training of the CLIP's visual encoder.

The authors illustrate that directly substituting CLIP’s text encoder with an LLM leads to suboptimal performance, primarily due to LLMs' inherent predictive nature which inadequately deals with the discriminative tasks required by CLIP. As such, a finely-tuned LLM is employed as a teaching signal to guide the visual encoder, resulting in significantly improved performances across a variety of image-text retrieval tasks. Notably, the method transforms a standard English-trained CLIP model into a cross-lingual model, showcasing its learning efficiency and adaptability.

Experimental Analysis

The empirical results provided demonstrate that LLM2CLIP enhances previously state-of-the-art (SOTA) models like EVA02, improving performance by substantial margins. Key highlights include a 16.5% performance improvement in retrieval tasks, both with short and long texts, affirming the effectiveness of this integrated methodology. The caption retrieval accuracy (CRA) for the fine-tuned LLM signifies a drastic improvement from 18.4% to 73%, surpassing previous best-performing text encoders.

Moreover, the training overhead when applying LLM2CLIP remains comparably low to that of the original CLIP, ensuring practical applicability in large-scale settings. This is a testament to the efficient design and thoughtful consideration of computational resources, making it feasible to merge these vast LLMs without prohibitive costs.

Theoretical and Practical Implications

From a theoretical standpoint, LLM2CLIP underscores the importance of textual discrimination and knowledge integration in multimodal learning frameworks, highlighting the potential of LLMs to infuse richer semantic understanding into visual models. Practically, this approach broadens the capacity of CLIP models to process more complex and dense textual data, addressing a known limitation in vanilla CLIP related to context window constraints and encoder capabilities.

Furthermore, the cross-lingual adaptability demonstrated by LLM2CLIP showcases the potential for models trained in one linguistic context to generalize effectively to others, merely by leveraging the knowledge inherent within LLMs. This offers a paradigm shift in how cross-lingual and multilingual models might be developed in the future.

Future Directions

The LLM2CLIP methodology opens several avenues for future research and exploration. Further investigation could be directed towards optimizing the joint training of LLMs and CLIP models and exploring methods that allow for fine-tuning LLM gradients without compromising computational efficiency. Moreover, expanding training to encompass even larger datasets could unlock further capabilities and push the boundary of what is achievable in vision-language tasks. Integrating more diverse datasets could also refine the model's ability to handle a broader range of downstream tasks.

In conclusion, the LLM2CLIP framework marks a significant advancement in multimodal representation learning, harnessing the expansive knowledge encapsulated within LLMs to extend and enrich the capabilities of CLIP, setting a new benchmark in cross-modal retrieval and representation learning.