An Analysis of "CodeTree: Agent-guided Tree Search for Code Generation with LLMs"

The paper "CodeTree: Agent-guided Tree Search for Code Generation with LLMs" introduces a structured framework for improving the performance of LLMs in code generation tasks. These tasks have significantly impacted various domains, extending the utility of LLMs beyond just natural language processing. However, the challenges inherent in code generation, such as the requirement for executable and functionally correct code, present unique difficulties. This paper aims to address these challenges by proposing a comprehensive framework, CodeTree, which utilizes a tree-based search strategy, integrated with LLM-driven feedback, to enhance the efficacy of code generation.

Overview and Methodology

The CodeTree framework is built upon a tree-based structure that systematically explores the search space associated with code generation tasks. This paradigm is distinct from prior approaches, which either relied on generating a massive number of candidate outputs or employed iterative refinement on a singular output. CodeTree capitalizes on a balance between exploration and exploitation by adopting multi-stage planning via three specialized agents: Thinker, Solver, and Debugger. Each agent plays a dedicated role in strategy planning, solution implementation, and iterative refinement, coordinated by a Critic Agent that drives tree expansion through detailed feedback mechanisms.

The methodological innovation includes:

- Strategy Generation: The Thinker Agent generates multiple problem-solving strategies, which serve as potential paths in a unified search space.

- Solution Implementation: The Solver Agent translates these strategies into executable code candidates.

- Iterative Refinement: The Debugger Agent enhances code quality by generating introspections based on AI and execution feedback.

- Critic Agent: Creates a feedback loop by scoring nodes and guiding exploration and refinement until an optimal solution is found.

This hierarchical organization of agents, coupled with the tree's flexibility for dynamic expansion, allows CodeTree to efficiently achieve high accuracy across benchmarks.

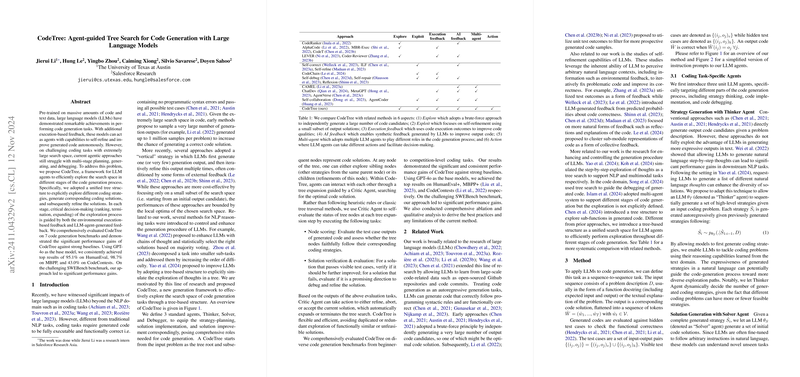

Evaluation and Results

The paper presents comprehensive evaluations on seven benchmarks, including HumanEval, MBPP, and CodeContests, demonstrating CodeTree's superior performance over conventional baselines. With a pass@1 score of 95.1% on HumanEval and 98.7% on SWEBench, the framework showcases significant performance gains, especially in tasks with large search spaces. Notably, the Critic Agent's feedback mechanism ensures robust verification and scoring, which is crucial for maintaining performance consistency.

Practical and Theoretical Implications

Practically, CodeTree's modular architecture suggests its adaptability to other complex code-related tasks like real-world software engineering, potentially enhancing automated debugging and program synthesis. Theoretically, the introduction of multi-agent collaboration in a hierarchically structured search space sets a new direction for research in AI-driven code generation. This approach could pioneer developments in decomposing intricate computational tasks in AI systems beyond coding.

Future Directions

Several avenues exist for future research:

- Expanding the LLM models' scope to handle even more dynamic and ambiguous programming domains.

- Extending the framework's adaptability to non-functional aspects of code, such as optimization and readability.

- Enhancements in multi-agent coordination to streamline processes like data retrieval and problem decomposition.

In conclusion, CodeTree represents a proficient framework that leverages LLMs’ capabilities with structured exploration and strategic planning in code generation tasks. It addresses the critical dimensions of functional correctness and scalability, offering promising directions for both practical implementations in software development and theoretical advancements in AI-based computational problem-solving.