Analyzing Adaptive Fine-Tuning Techniques for LLMs in Scientific Problem-Solving

The paper "Adapting While Learning: Grounding LLMs for Scientific Problems with Intelligent Tool Usage Adaptation" by Bohan Lyu et al. proposes a novel methodology to enhance the performance of LLMs in solving scientific problems. This work addresses a prevalent issue where LLMs, such as those built on GPT architectures, demonstrate competence with straightforward problems but struggle with complex tasks, often resorting to erroneous assumptions known as hallucinations.

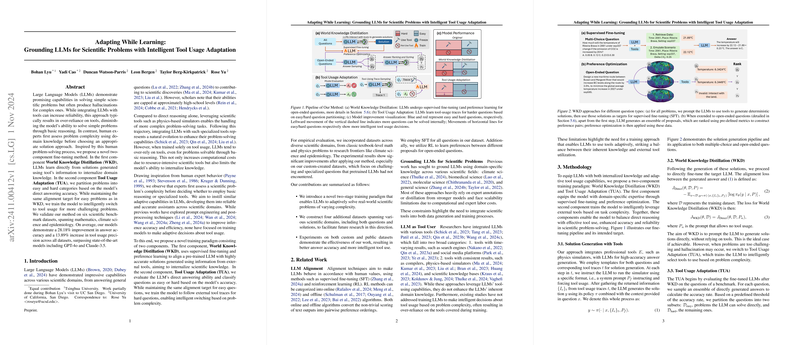

The authors have identified that while LLMs can benefit from the integration of external tools, such over-reliance can impede the ability to execute basic problem-solving independently. In response, the paper introduces a dual-component fine-tuning framework: World Knowledge Distillation (WKD) and Tool Usage Adaptation (TUA). This framework aims to emulate human-like decision-making processes by training models to critically assess problem complexity before determining the necessity of tool usage.

Methodological Insights

World Knowledge Distillation (WKD):

The first component, WKD, focuses on imbuing LLMs with domain-specific knowledge through supervised fine-tuning. This process involves using accurate solutions derived via tool assistance to condition LLMs to internalize and apply world knowledge directly. The loss function applied ensures that the LLM aligns its responses with those exemplary solutions, sans tool dependency.

Tool Usage Adaptation (TUA):

For the second component, TUA, the method involves a strategic partitioning of dataset problems into "easy" and "hard" categories based on the model’s innate solving capabilities. This categorization is determined by evaluating the model’s success in generating correct answers without tools. Subsequently, while the training objective remains unchanged for simple problems, hard problems are aligned towards a solution path that encourages tool utilization, thus enhancing the model's decision-making prowess.

Empirical Validation

The authors validate their framework across six scientific datasets spanning domains like mathematics, climate science, and epidemiology. Impressive improvements are reported, with models achieving a 28.18% enhancement in answer accuracy and a 13.89% boost in tool usage precision over state-of-the-art counterparts such as GPT-4o and Claude-3.5. This outcome demonstrates the efficacy of the proposed methodology in mitigating over-reliance on tools without diminishing reasoning capabilities.

Implications and Future Directions

The research provides valuable insights into constructing more adaptable LLMs that can perform efficiently across varied scientific contexts. The implications are substantial, offering practical benefits for fields that require robust and autonomous problem-solving abilities in AI deployments. The paper also challenges the current paradigm by proposing a training mechanism that fosters intelligent tool usage decisions akin to those made by human experts.

For future research avenues, exploring cross-domain training consistency and integrating adaptive tool utilization at finer granularities could further push the boundaries of what LLMs can achieve independently. Additionally, extending these methods to handle multi-modal data inputs and outputs can broaden the applicability of these techniques to real-world scenarios requiring more complex forms of data interpretation.

In summary, the work presented in this paper advances the methodological landscape for training LLMs in scientific tasks, setting a new standard for balancing inherent knowledge with external tool usage to achieve superior problem-solving outcomes.