A Survey on Speech LLMs

This paper provides a detailed examination of the integration of LLMs within the domain of Spoken Language Understanding (SLU). The authors present a comprehensive analysis of Speech LLMs, delineating their evolution, architecture, training strategies, and performance on various SLU tasks. They focus on advancing SLU capabilities by leveraging LLMs for enhanced audio feature extraction and multimodal fusion.

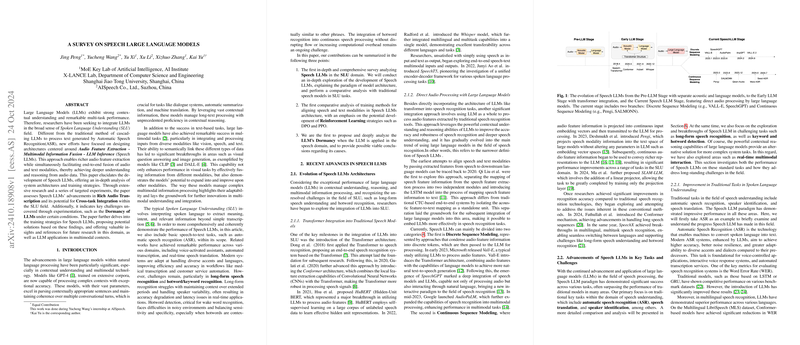

Architectural Developments

The paper categorizes Speech LLMs into distinct architectural stages: Audio Feature Extraction, Multimodal Information Fusion, and LLM Inference. These models seek to integrate audio and text modalities for comprehensive understanding. Notably, evolving from traditional approaches that use simple cascading with Automatic Speech Recognition (ASR), Speech LLMs now propose innovative direct processing architectures. They achieve this through methods like Direct Projection and Token Mapping, ensuring that audio features are seamlessly integrated into the text feature space of LLMs.

Training Strategies

Three primary training strategies are outlined: pretraining, supervised fine-tuning (SFT), and reinforcement learning (RL). Pretraining captures broad, unsupervised knowledge, while SFT hones models on specific tasks. Reinforcement learning is noted as a potential area for further exploration to optimize cross-task performance in SLU.

Performance and Challenges

The paper highlights significant achievements in traditional tasks such as ASR and speech translation, where Speech LLMs often outperform existing models. It underscores the importance of addressing specific challenges such as LLM dormancy and high computational costs. The authors identify dormancy as a notable issue, where LLMs underutilize their capabilities when applied to speech tasks, suggesting the need for further optimization in audio-text alignment.

Future Directions

The investigation concludes by proposing future directions that emphasize refining modality alignment and expanding the application scope of Speech LLMs. The authors suggest augmenting token spaces and introducing more dynamic reinforcement learning techniques. They foresee deploying Speech LLMs as integral components in complex multimodal systems, leveraging their robust contextual reasoning for enhanced interaction and processing capabilities.

Implications

The paper's findings have practical implications, suggesting pathways for developing more efficient and effective SLU systems. Theoretical implications include deepening our understanding of multimodal fusion in LLMs and exploring novel architectural adaptations. The proposed solutions aim to mitigate current limitations, enhancing model performance and utility across various applications.

Overall, this survey provides a well-rounded analysis of Speech LLM advancements and sets a foundation for future research within SLU and multimodal contexts.