An Overview of "A Little Help Goes a Long Way: Efficient LLM Training by Leveraging Small LMs"

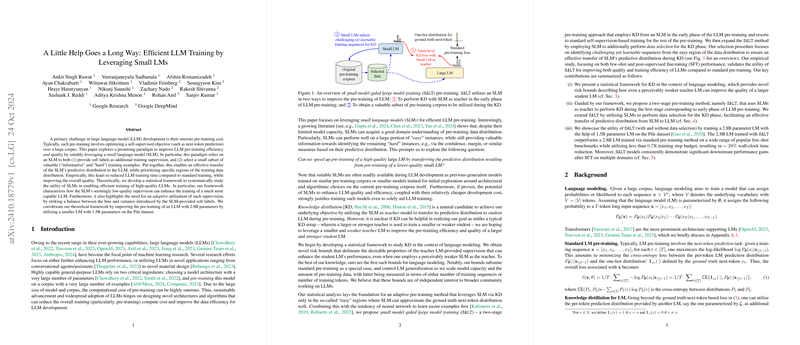

The paper addresses the computational challenges faced in pre-training LLMs by introducing an approach that leverages small LLMs (SLMs) to enhance the efficiency and quality of the training process. This paper proposes a methodology wherein SLMs are utilized to provide soft labels and select informative training examples, ultimately facilitating a more effective transfer of information to LLMs.

The researchers present both empirical and theoretical frameworks to validate this paradigm. Empirically, the approach demonstrates a reduction in training time coupled with improvements in model quality. The SLM-assisted training method is tested with a 2.8B parameter LLM utilizing a 1.5B parameter SLM on the Pile dataset, yielding superior results compared to conventional training methods.

Methodology

- Soft Labels and Data Selection: SLMs are employed to generate soft labels, offering supplementary supervision during training. Additionally, these models assist in identifying valuable subsets of training data that are both informative and challenging.

- Statistical Framework: The theoretical model delineates how SLM-generated supervision, though potentially low in quality, can be advantageous by properly balancing bias and variance in training.

- Adaptive Utilization: The importance of adaptively utilizing SLM-derived supervision is emphasized, suggesting that the focus should be on scenarios where SLM predictions align closely with the true data distribution.

- Knowledge Distillation (KD): Through KD, the paper extends the classic teacher-student model, showing improved training effectiveness even when using a weaker teacher model (SLM) to guide a stronger student (LLM).

Key Findings

- The empirical results show that the proposed method reduces LLM training time while enhancing performance metrics, such as few-shot learning accuracy.

- The adaptation at training allows SLMs to prioritize easier examples initially, letting LLMs refine their focus during subsequent training phases.

- Utilizing SLMs during the early stages of LLM pre-training captures simpler patterns, reducing overall computational demands.

Implications

The research provides a pragmatic approach to LLM training, suggesting a pathway to achieving efficient computation without sacrificing model quality. The implications are particularly meaningful given the substantial resources typically required for LLM development.

Future Directions: The potential for SLMs to be used in this capacity sets a precedent for exploring further instantiations of such architectures; seeking novel architectures that could inherently combine the efficiencies of SLM with the capabilities of LLM is a promising avenue.

In summary, this paper effectively demonstrates how small models, traditionally overshadowed by their larger counterparts, can play a pivotal role in optimizing the creation and efficiency of LLMs. By leveraging concise, targeted supervision, the paper not only presents a technique that holds significant promise but also paves the way for further exploration into scalable AI development practices.