Overview of "LEGO: LLM Building Blocks"

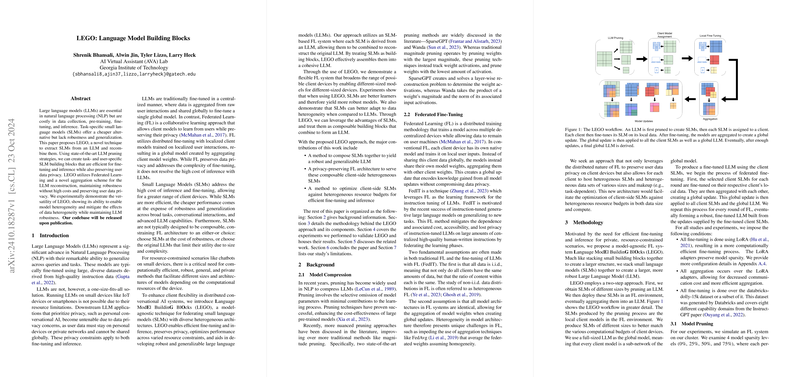

The paper "LEGO: LLM Building Blocks" presents a novel methodology designed to optimize the utilization of LLMs by crafting efficient task-specific small LLMs (SLMs). This is achieved through a system named LEGO, which strategically extracts and decomposes smaller, dedicated models to maintain robustness, efficiency, and privacy in widely disparate computational environments. The authors focus on two central issues: the computational and resource constraints faced by LLMs and the necessity for data privacy preservation in applications such as personal conversational AI.

Technical Approach

The core contribution of the paper lies in establishing a technique using federated learning (FL) along with an innovative aggregation methodology. This allows the composition of SLMs from pruned LLMs, enabling the models to be used as modular building blocks—hence the name LEGO. In federated settings, these SLMs can be optimized for diverse computational setups by reconstructing their native LLM features in a collective manner while preserving model integrity and user privacy.

- Model Pruning and Aggregation: The LEGO approach leverages pruning strategies like SparseGPT and Wanda to create SLMs capable of executing specific tasks under practical constraints. This is complemented by an aggregation scheme (HeteAgg) that fosters heterogeneity, which is crucial when incorporating user-specific data without resorting to traditional centralized data aggregation techniques.

- Federated Fine-Tuning: In congruence with the principles of FL, LEGO utilises pruned models as entities that can be fine-tuned locally with task-specific data thus preserving privacy. The technique presented argues for efficiency in federated systems, allowing robust performance without necessitating data sharing.

Key Experimental Findings

The experiments conducted demonstrate the strength of LEGO's approach in several key aspects. The authors report that LEGO effectively balances the trade-off between SLM efficiency and LLM robustness. The numerical results indicate that task-heterogeneous SLMs maintain competitive performance levels when recombined into their original LLM forms. Furthermore, LEGO is demonstrated to show superior capabilities in adapting to data heterogeneity, providing evidence for improved learning when compared to conventional Federated Instruction Tuning (FedIT) platforms.

The experimental results also reveal the adaptability of LEGO with devices of varying computational capacities, indicating that SLMs customized to leverage available hardware capabilities can be integrated without losing accuracy. This is crucial for the deployment of conversational AI across a spectrum of devices, from smartphones to advanced IoT equipment.

Implications and Future Directions

The implications of implementing LEGO extend significantly into both theoretical and practical fields. By enabling scalable, privacy-preserving, and efficient LLM architectures, LEGO supports the democratization of sophisticated AI technologies across numerous sectors, including mobile applications, personal assistants, and secure enterprise solutions. This potentially lowers barriers to entry and operational costs for deploying AI models in real-world scenarios.

From a theoretical standpoint, the paper challenges existing paradigms about monolithic model efficiency, suggesting a pivot towards modular architectures that can better navigate heterogeneous datasets and user requirements. The combination of pruning, federated learning, and aggregation paves the way for future research in model efficiency optimization, and scalability of LLMs in more diverse application contexts.

The research opens several pathways for further investigation. Future studies can delve into optimizing the pruning and aggregation methods further, exploring the boundaries of SLM adaptability and robustness. Additionally, engaging in cross-disciplinary research could enhance comprehension of how these adaptive models interact in broader, dynamic environments, especially with the rise of edge computing and decentralized AI systems.

In summary, the LEGO methodology manifests a promising framework for addressing the growing need for efficient, scalable, and privacy-conscious LLMs, setting an innovative precedent in federated AI systems.