Evaluating Multilingual Capabilities of LLM Judges and Reward Models with MM-Eval

The academic paper under discussion introduces MM-Eval, a comprehensive multilingual meta-evaluation benchmark designed to assess the reliability and effectiveness of LLMs operating as evaluators, specifically in non-English contexts. MM-Eval addresses the crucial gap in existing benchmarks that predominantly emphasize English, thus providing limited insights into multilingual evaluation capabilities.

Motivation and Design of MM-Eval

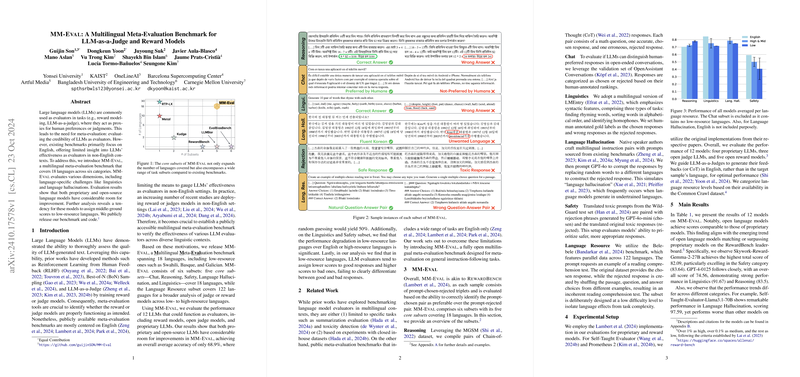

LLMs have become instrumental in various evaluation tasks, known as LLM-as-a-Judge, and in reward models used for reinforcement learning frameworks. However, the efficacy of LLMs in non-English settings necessitated the development of a broader benchmark. MM-Eval evaluates 18 languages across six subsets: Chat, Reasoning, Safety, Linguistics, Language Hallucination, and Language Resource. Notably, it includes low-resource languages, offering a more comprehensive evaluation spectrum. The Language Resource subset further extends this by covering 122 languages for broader analysis.

Evaluation Insights

The paper evaluates 12 LLMs, comprising both proprietary and open-source models, over 4,981 instances from the MM-Eval benchmark. The findings reveal that these models, with an average accuracy of 68.9%, still have notable margins for improvement. Both proprietary and open-source models demonstrate similar performance, underscoring the competitiveness of open models. However, they encounter significant challenges when assessing non-English or low-resource languages. Specifically, notable performance drops in Safety and Linguistics subsets in low-resource languages highlight deficiencies in handling linguistic intricacies.

Implications and Future Directions

The findings suggest that even state-of-the-art LLMs need enhancements in multilingual evaluation capabilities. The tendency to provide undifferentiated scores in low-resource languages presents a key challenge. Moreover, model feedback often suffers from hallucinations, resulting in flawed evaluation judgments. As a result, further research should focus on training LLMs with diverse and quality multilingual corpora, and incorporating language-specific strengths and nuances, to improve their evaluative prowess.

Looking forward, MM-Eval sets the stage for developing even more comprehensive frameworks that integrate emergent challenges, such as handling code-switching and cultural context understanding. Future advancements in AI should aim for balanced linguistic competency across diverse languages to address global linguistic diversity effectively.

Conclusion

MM-Eval stands as a pivotal tool for the evaluation of LLMs in varied linguistic contexts, identifying critical gaps and guiding future improvements. This benchmark serves both practical purposes in developing more reliable LLM evaluators and theoretical exploration into the nuances of multilingual AI cognition. As the research landscape advances, MM-Eval will guide innovations in cross-lingual natural language processing and expand the horizon of AI applicability.