JMMMU: A Japanese Massive Multi-discipline Multimodal Understanding Benchmark for Culture-aware Evaluation

This essay provides an expert overview of the paper titled "JMMMU: A Japanese Massive Multi-discipline Multimodal Understanding Benchmark for Culture-aware Evaluation". The paper introduces JMMMU, a pioneering benchmark designed to evaluate Large Multimodal Models (LMMs) within the context of Japanese culture.

Objective and Methodology

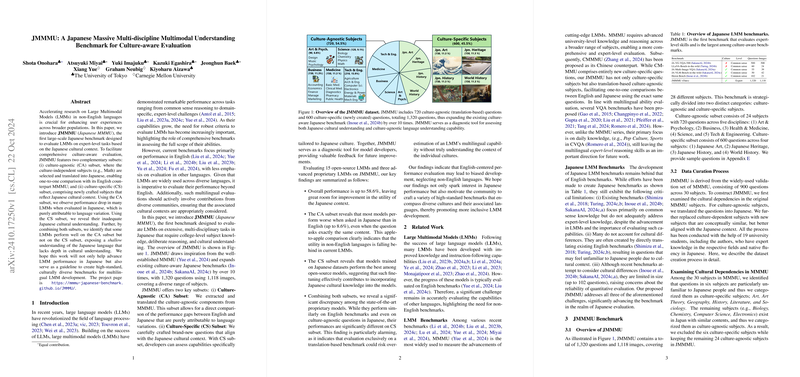

The primary objective of JMMMU is to accelerate research on LMMs in non-English languages, ultimately enhancing user experiences across diverse populations. The benchmark features two distinct subsets to facilitate a comprehensive culture-aware evaluation:

- Culture-Agnostic (CA) Subset: This subset includes subjects independent of cultural context (e.g., Math), allowing direct comparison with English benchmarks through translation into Japanese. This approach isolates performance variation attributable purely to language differences.

- Culture-Specific (CS) Subset: Tailored to reflect the Japanese cultural context, this subset comprises newly crafted subjects such as Japanese Art and History. It aims to assess cultural understanding.

Key Findings

Upon evaluating 15 open-source LMMs and three advanced proprietary LMMs, several key findings emerge:

- Performance Potential: The overall performance reaches up to 58.6%, indicating significant room for improvement in processing Japanese contexts.

- Language Variation Impact: The CA subset reveals up to an 8.6% performance drop when LMMs are evaluated in Japanese compared to English, highlighting the challenges posed by language differences.

- Cultural Understanding: Models trained on Japanese datasets outperform others on the CS subset, underscoring the effectiveness of fine-tuning for cultural knowledge integration.

- Depth of Understanding: Analysis of both subsets exposes discrepancies among state-of-the-art proprietary models. Some models perform well on CA but not on CS subsets, revealing a superficial understanding of Japanese cultural nuances.

Implications and Future Directions

These findings suggest that existing English-centric performance evaluations may lead to development biases, neglecting non-English performance. The paper emphasizes the importance of creating culturally rich benchmarks, fostering a more inclusive approach to multilingual LMM development.

Looking forward, the establishment of benchmarks similar to JMMMU across other languages and cultures is encouraged. This initiative could lead to more robust and culturally adaptable AI models.

In conclusion, JMMMU serves as both a valuable tool for LMM developers and a guideline for crafting diverse, high-standard benchmarks necessary for the advancement of multicultural AI systems. The research significantly contributes to understanding LMM capabilities in non-English settings and sets a precedent for future work in the area of culture-aware AI evaluation.