Revealing Hidden Bias in AI: Lessons from LLMs

The paper "Revealing Hidden Bias in AI: Lessons from LLMs" investigates the critical issue of bias in AI systems, specifically within the context of LLMs used for recruitment. As LLMs become increasingly integrated into the hiring processes—tasks such as candidate report generation and resume analysis—the potential for AI-induced bias becomes a significant concern. This paper offers a systematic examination across various LLMs including Claude 3.5 Sonnet, GPT-4o, Gemini 1.5, and Llama 3.1 405B, focusing on detecting biases related to gender, race, and age.

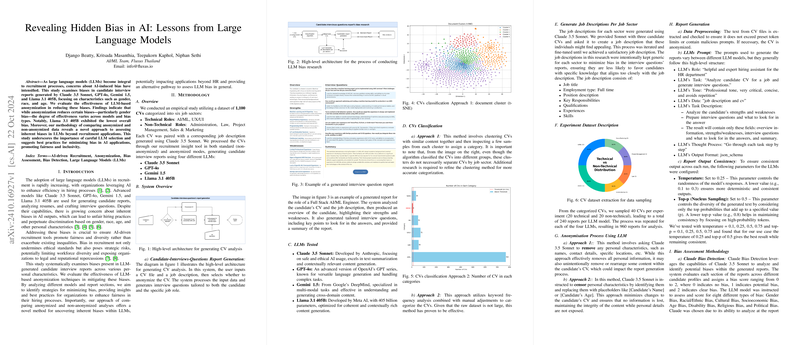

Methodological Insights

The research employs a dataset of 1,100 CVs across six job sectors, processed in both anonymized and non-anonymized modes. Anonymization is evaluated for its effectiveness in mitigating bias. Notably, Llama 3.1 405B exhibited the lowest overall bias among the models tested. The paper introduces a comparative method of analyzing anonymized versus non-anonymized data to reveal inherent biases beyond HR applications, which may present a generalizable approach for bias assessment in LLMs.

Strong Numerical Results

A key numerical result from the Claude bias detector shows significant reduction in bias scores for anonymized data: a 27.857% decrease in overall bias compared to non-anonymized data. In contrast, open-source models from Hugging Face exhibited minimal difference, highlighting the superiority of the Claude detector in identifying specific bias types. The detailed analysis delineates that gender bias, prevalent across all models, sees substantial reduction through anonymization, particularly in Sonnet where gender bias reduced from 206 to 28.

Implications for AI-Driven Recruitment Processes

The findings underscore the need for particular attention to model selection in AI-driven recruitment to foster fairness and inclusivity. Anonymization presents a feasible strategy for reducing certain types of bias, though its efficacy varies across different bias categories and models. These insights inform best practices in utilizing AI tools, emphasizing the necessity of human oversight and continuous monitoring of AI outputs for biases.

Limitations and Future Directions

The paper's limitations include a relatively small sample size and a narrow focus on specific job sectors, potentially restricting generalizability. Future research could expand to additional sectors, explore new LLMs, and refine anonymization techniques. Longitudinal studies might further reveal the efficacy of bias mitigation strategies over time. Additionally, integrating cognitive bias analysis could enrich understanding of how biases manifest in AI-generated content.

Conclusion

This paper brings forth critical insights into the biases inherent in LLMs and their implications for AI use in recruitment. While anonymization shows promise in reducing specific biases, the choice of LLM remains pivotal. The paper highlights the necessity for a balanced approach combining automated and manual methods to ensure fair and unbiased outcomes. Future research should continue to refine these processes, contributing to the development of equitable AI systems across various applications.