Enhancing Long Context Performance in LLMs Through Inner Loop Query Mechanism

The research paper titled "Enhancing Long Context Performance in LLMs Through Inner Loop Query Mechanism" presents an innovative approach to addressing the limitations of LLMs when managing extensive input contexts. The authors introduce the Inner Loop Memory Augmented Tree Retrieval (ILM-TR), a novel method that leverages inner-loop querying for improved performance in long-context scenarios. This methodology significantly advances the field of Retrieval-Augmented Generation (RAG) by refining response accuracy and context integration.

Background and Motivation

Traditional LLMs such as GPT and others have shown formidable capabilities in numerous NLP tasks. However, their performance is hampered by the quadratic complexity of the self-attention mechanism, limiting their effective context window to a few thousand tokens. Attempts to overcome this limitation often involve splitting extensive text into chunks for retrieval, but these methods generally only address direct retrieval without deeper reasoning capabilities.

The ILM-TR method proposed by the authors aims to address this gap by implementing a system that not only uses initial queries for retrieval but also conditions retrieval on ongoing findings within the process. This progression allows LLMs to more accurately integrate complex information from longer contexts, enhancing interpretability and memory-like comprehension abilities.

Methodology

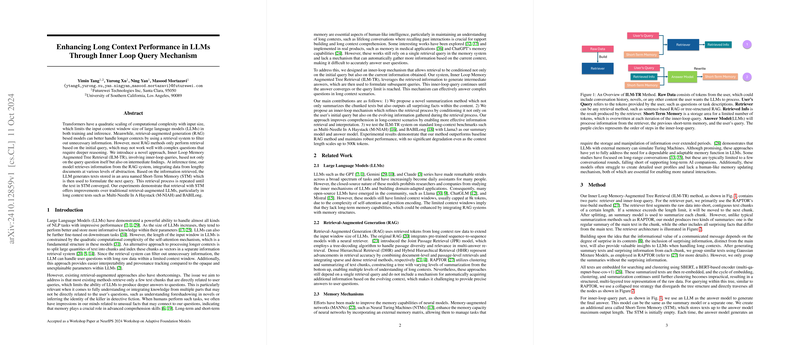

The ILM-TR method innovatively combines a retriever and an inner-loop query system. The retriever uses a tree-building method based on RAPTOR's approach, segmenting raw text into manageable chunks. Unlike previous summarization methods, this model outputs both standard summaries and surprising information. The latter is included to improve information retrieval when handling long texts.

The inner-loop querying process involves continuously refining queries based on retrieved data and interim results stored in Short-Term Memory (STM). The LLM generates answers iteratively, using the evolving STM and user's query until an answer converges or a query limit is reached.

Experimental Results

To validate ILM-TR's efficacy, rigorous testing was conducted using established benchmarks such as Multi-Needle In A Haystack (M-NIAH) and BABILong. The results demonstrated a significant outperformance of ILM-TR over baseline methods, including the original RAPTOR. Notably, ILM-TR showed robust scalability, handling context lengths up to 500k tokens without significant performance degradation. These tests confirm ILM-TR's superiority in retrieving complex, interrelated information across long contexts.

Implications and Future Work

The ILM-TR model presents substantial implications for enhancing LLM capabilities in both practical and theoretical domains. Practically, its advancements could improve applications requiring comprehension of lengthy documents, such as legal texts or comprehensive scientific literature. Theoretically, ILM-TR contributes to the development of memory-augmented systems that mirror human-like cognitive processing, potentially influencing future AI interactions involving complex, sustained narratives.

Despite the promising results, the ILM-TR process bears some limitations, notably the increased computational time due to repeated query iterations and the demand for a large model to accurately follow instructions. Future research could focus on refining these aspects by, for example, fine-tuning the answer models based on STM-intermediate outcomes to enhance the active search capability within RAG systems.

In conclusion, the Inner Loop Memory Augmented Tree Retrieval method represents a significant stride in overcoming the constraints of traditional LLMs in long-context tasks, providing a framework for more intelligent and contextually aware AI systems.