Overview of Enabling LLMs to Master Tools via Self-Driven Interactions

The paper introduces a framework called DRAFT aimed at refining the application of LLMs in utilizing external tools effectively. Despite the advancement of LLMs, their capacity to leverage tools to augment problem-solving remains constrained due to the limitations inherent in human-centric documentation. DRAFT stands as a sophisticated approach to optimize tool documentation through a feedback-driven iterative process, enhancing the alignment between LLM interpretations and tool functionalities.

Methodological Approach

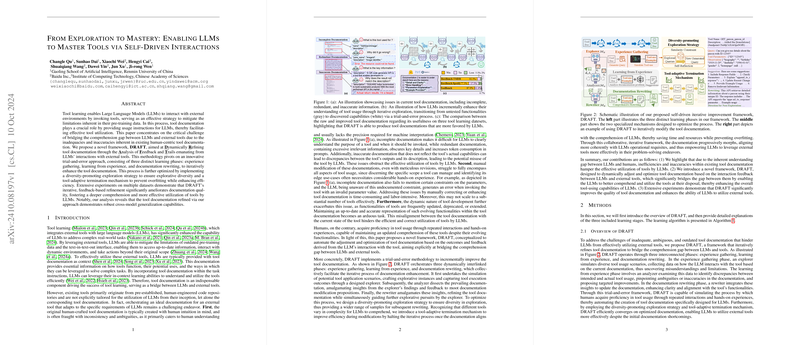

DRAFT is structured into three dynamic phases: (1) Experience Gathering, (2) Learning from Experience, and (3) Documentation Rewriting. These stages form a trial-and-error loop, where initial encounters with tools inform the subsequent revisions of the documentation.

- Experience Gathering:

- LLMs simulate diverse scenarios through an Explorer, generating exploratory instances that model potential tool use cases.

- A diversity-promoting strategy ensures varied exploration, avoiding redundancy and capturing a wide array of tool capabilities.

- Learning from Experience:

- An Analyzer evaluates the data gathered, comparing intended and actual tool usage.

- Through this comparison, it provides revision suggestions, focusing on consistency, coverage, and conciseness.

- Documentation Rewriting:

- The Rewriter integrates the Analyzer’s insights, updating tool documentation.

- To prevent overfitting, a tool-adaptive termination mechanism halts the iterative process when convergence is detected.

This framework results in documentation that is progressively refined, enhancing the LLM’s understanding and operational alignment with the tools.

Experimental Evaluation

Experiments were conducted using multiple datasets, including ToolBench and RestBench, with evaluation metrics such as Correct Path Rate (CP%) and Win Rate (Win%). Key results demonstrated that DRAFT significantly improves the documentation quality beyond traditional baselines, empowering LLMs like GPT-4o to utilize tools more effectively. Notably, the revised documentation enhances cross-model generalization, suggesting its robustness across different LLM architectures.

Implications and Future Directions

The development of DRAFT underscores the necessity to automate tool documentation refinement within AI systems, which, in turn, facilitates the comprehension and practical application of tools by LLMs. The robust cross-model generalization observed hints at potential scalability across various LLM architectures, thereby broadening the applicability of this framework.

This work speculates on future advancements in AI where LLMs will not only interpret but also autonomously manage and update tool capabilities, thus pushing the boundaries of what AI can achieve through self-sufficient learning frameworks. The DRAFT framework ideally marks a step towards fully autonomous machine-learning systems capable of self-improvement by adapting to the dynamic landscapes of tool functionalities.

In conclusion, while the adoption of LLMs in tool usage presents challenges, DRAFT provides a structured approach to address these by iterating towards a cohesive understanding. This advancement is not just a practical enhancement but also a foundational framework guiding the future of AI-tool interactions.