Essay on "TidalDecode: Fast and Accurate LLM Decoding with Position Persistent Sparse Attention"

The paper "TidalDecode: Fast and Accurate LLM Decoding with Position Persistent Sparse Attention" addresses a critical bottleneck in the efficient deployment of LLMs—the memory and computational constraints during the decoding phase. This paper is particularly focused on Transformer architectures that, despite their prowess, demand substantial memory resources due to the expanding key-value (KV) cache, especially for long-context tasks. The authors propose TidalDecode, an innovative algorithm utilizing Position Persistent Sparse Attention (PPSA) to significantly mitigate these constraints without compromising performance.

Sparse Attention Mechanisms and Their Limitations

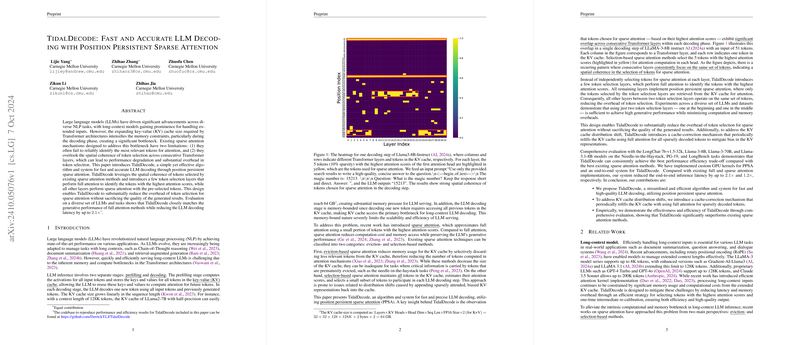

Current sparse attention strategies often miss critical tokens for attention and neglect the spatial coherence of token selections across layers, leading to inefficiencies and potential performance degradation. These strategies are either eviction-based, selectively discarding tokens to decrease memory usage, or selection-based, focusing on estimating and choosing tokens based on attention scores. Eviction-based methods can inadvertently remove crucial tokens, while selection-based approaches might introduce computational complexity without guaranteed optimal token selection.

TidalDecode: Leveraging Position Persistence

TidalDecode distinguishes itself by recognizing and exploiting the overlap of tokens with high attention scores across consecutive transformer layers. Rather than selecting tokens at each layer independently—a process rife with computational overhead—TidalDecode implements token selection layers performing full attention at strategic points, reducing redundant computations.

Implementation and Evaluation

The implementation of TidalDecode involved custom GPU kernel optimizations, demonstrating its ability to achieve substantial reductions in decoding latency. Measurement of its efficacy was evidenced by experiments on various models like LLaMA-3-8/70B, showcasing a latency reduction up to 2.1x compared to existing full attention approaches.

Empirical evaluations further demonstrated that TidalDecode maintains or surpasses performance benchmarks on tasks such as Needle-in-the-Haystack and LLMing using the PG-19 dataset. By selecting tokens with the highest attention scores once at the beginning and once at a middle layer—a novel approach termed token re-selection—the algorithm ensures optimal performance with minimized computational load.

Implications and Future Directions

The development of TidalDecode signifies a pivotal advancement in the efficient processing of long-context NLP tasks. By circumventing costly computational demands traditionally associated with full attention mechanisms, TidalDecode opens pathways for deploying LLMs in more resource-constrained environments without forfeiting accuracy or performance.

Future work may explore further refinements in sparse attention methods to enhance token selection precision or adapt the PPSA framework to other model architectures beyond Transformers. Additionally, investigating the dynamics of token re-selection could yield insights into even more efficient memory usage strategies, essential for scaling LLMs to handle increasingly complex and longer sequences.

In conclusion, TidalDecode represents a substantive contribution to the field of NLP, providing a pragmatic solution to a prevalent challenge, and setting a foundation for further innovation in sparse attention mechanisms.