KV-Compress: Paged KV-Cache Compression with Variable Compression Rates per Attention Head

Isaac Rehg from Cloudflare introduces a novel method for Key-Value (KV) cache compression designed for LLMs with extended context lengths. The method, termed KV-Compress, focuses on leveraging variable compression rates per attention head within a PagedAttention framework to alleviate memory constraints while maintaining high-performance standards during inference.

Introduction

As context lengths in LLMs have exponentially grown, efficient memory management for KV caches has become a significant challenge. The memory requirements for maintaining the KV cache during token generation increase linearly with context length, restricting the potential for concurrent long-context inferences within a fixed memory budget. Traditional solutions employing uniform KV eviction rates across attention heads introduce substantial fragmentation, thereby failing to achieve theoretical memory savings in practice.

Methodology

KV-Compress seeks to address these inefficiencies by adopting a variable eviction rate approach within a PagedAttention framework, effectively reducing the KV cache's memory footprint while maintaining high inference performance.

Key Innovations

- PagedAttention Framework Modification:

- The method extends the existing PagedAttention framework to handle fragmentation by referencing KV caches at both layer and head levels, making variable-rate evictions viable.

- It leverages an on-device block allocation system to parallelize block management, ensuring efficient scheduling and prefetching of cache blocks.

- Query Group Compression:

- Adapts existing methods to manage grouped-query-attention (GQA) models without repeating KVs, directly addressing redundancy.

- Metrics aggregation for eviction decisions is carried out within query groups, informing a more refined eviction strategy.

- Adaptive Eviction Metrics:

- Introduces squared attention aggregation over observed queries, proposing both full and limited observation window variants. The squared sum approach (L2) is shown to work better than the traditional sum (L1), thereby optimizing the eviction process.

- Sequential Eviction Strategy:

- Implements an algorithm to evict continuous blocks by organizing KV blocks such that the sum eviction metric over evicted blocks aligns with the optimal eviction schedule.

Experimental Results

The results demonstrate substantial improvements over existing methods.

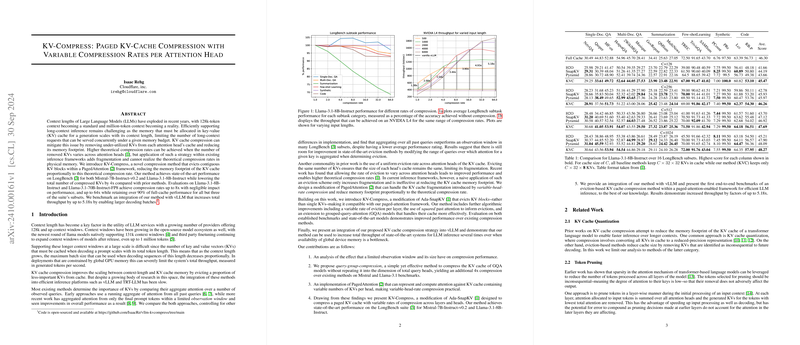

- Benchmark Performance:

- KV-Compress leads in most LongBench subsets for Mistral-7B-Instruct-v0.2 and Llama-3.1-8B-Instruct models.

- With max-cache-size configurations set at , $256$, $512$, and $1024$, it achieves state-of-the-art performance metrics while using significantly fewer KVs (4x reduction compared to baselines owing to non-repetition in GQA models).

- Throughput Improvements:

- Deployed on single-instance configurations, Llama-3.1-8B (NVIDIA L4) and Llama-3.1-70B (NVIDIA H100), KV-Compress increases throughput by up to 5.18x in memory-constrained environments.

- Reaches maximum decoding batch sizes significantly larger than those possible with vanilla vLLM, enabling better parallel processing and higher throughput.

- Continual Compression:

- Evaluates the impact of continual compression during decoding steps. Results show high performance retention (≥90% of full-cache performance) for most tasks even at aggressive compression rates (32x to 64x).

Implications and Future Work

The implications of KV-Compress are multifold. Practically, this method enables more efficient memory usage, allowing LLMs to handle long-context inferences with increased batch sizes and throughput, which is crucial for large-scale deployment scenarios constrained by GPU memory capacity. Theoretically, the approach underscores the importance of fine-grained cache management, driving future research towards efficient linearized memory frameworks and adaptive attention mechanisms.

Potential avenues for future work include exploring dynamic cache management strategies that adapt not only to inference context length but also to varying computational workloads. Additionally, further refinement of metric aggregation strategies could yield even higher compression rates with minimal performance loss.

Conclusion

KV-Compress presents a significant advancement in the area of KV cache management for LLMs, combining innovative eviction strategies with a refined adaption of the PagedAttention framework. The method delivers top-tier performance on benchmark suites while significantly improving inference throughput, thereby setting a new standard for memory-efficient LLM deployment.