Federated LLMs: Current Progress and Future Directions

In the landscape of machine learning and artificial intelligence, LLMs have driven significant transformations, excelling in generating human-like text and advancing realms such as natural language processing and code generation. However, the centralized training of LLMs poses major challenges, primarily around data privacy and computational feasibility. This challenge is particularly pressing in sectors that handle sensitive data, such as healthcare, finance, and legal services, where data privacy is paramount. Federated learning (FL) arises as a potential solution, enabling decentralized model training where data remains local and only model updates are exchanged. The surveyed paper explores the nuances of Federated Learning for LLMs (FedLLM), summarizing recent advances, identifying prevalent challenges, and proposing future research directions in this domain.

Introduction

The notion of combining LLMs with FL introduces complexities including model convergence issues due to heterogeneous data and heightened communication costs. This synthesis strives to provide a meticulous overview of FedLLM by critically scrutinizing recent advancements in federated fine-tuning and prompt learning. Herein, the authors aim to guide research by evaluating existing literature and pinpointing gaps that could pave the way for innovative solutions.

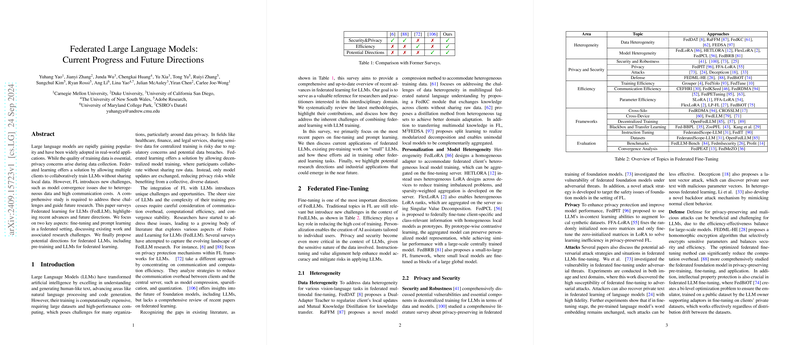

Federated Fine-Tuning

Fine-tuning LLMs in a federated setting requires addressing traditional FL topics such as efficiency, personalization, and privacy but on a much larger scale due to the sheer size of LLMs.

Heterogeneity

Data and model heterogeneity present significant challenges. Solutions like FedDAT and FedKC address data heterogeneity by proposing knowledge distillation and federated clustering mechanisms. Methods like FedLoRA and FlexLoRA attend to model heterogeneity by enabling personalized adaptations that align local and global model training effectively.

Privacy and Security

Privacy in federated LLMs is amplified given the sensitive nature of the data. Techniques such as FedPIT enhance privacy through in-context learning abilities, while attack strategies like those examined by Wu et al. highlight vulnerabilities in federated training, urging the development of robust defense mechanisms.

Efficiency

Efficiency remains critical in federated LLMs due to the high costs of training and communication. Approaches such as Dataset Grouper and model compression techniques aim to optimize both training and communication efficiency, ensuring scalability and practical deployment feasibility.

Frameworks

Innovative frameworks for federated fine-tuning span cross-silo and cross-device settings, with methods like FedRDMA enhancing communication protocols, and systems like FwdLLM addressing computational constraints on mobile devices through backpropagation-free protocols.

Prompt Learning

Prompt learning for LLMs offers a route to reducing communication overhead and computational demands by fine-tuning soft prompts rather than entire models.

Prompt Generation

PromptFL and similar frameworks focus on creating efficient and adaptable prompts that cater to heterogeneous data distributions. These methods show promise in enhancing privacy and performance in FL settings.

Few-shot Scenario

FeS introduces a framework to enable federated few-shot learning, making federated fine-tuning on resource-limited devices feasible through techniques like curriculum pacing and co-planning of model layer depth.

Personalization

Methods like FedLogic and Fed-DPT improve LLMs' personalization by optimizing prompts to reflect individual users' data distributions, thereby enhancing model relevance and performance.

Multi-domain

Frameworks such as FedAPT enable cross-domain collaborative learning by personalizing prompts for distinct clients while facilitating data-independent knowledge sharing.

Efficiency and Optimization

Emerging techniques in parameter-efficient learning and communication optimization demonstrate that FL can be integrated with prompt tuning and LoRA to significantly reduce computational overheads while maintaining robust model performance.

Potential Directions

The survey concludes with a forward-looking view, suggesting potential areas for exploration:

- Real-World Deployment: Optimizing personalized AI agents for deployment on confidential data while ensuring robust, efficient model adaptation.

- Multimodality Models: Co-optimizing models handling diverse data modalities to reduce inefficiencies and enhance performance.

- Federated Pre-Training: Exploring efficient data exchange protocols and optimal model architectures to reduce the computational burden of pre-training LLMs.

- Federated Inference: Developing real-time, on-device inference techniques to minimize latency and computational overhead.

- LLMs for Federated Learning: Utilizing LLMs for synthetic FL data generation and advanced applications such as capacity-augmented FL and responsible, ethical model deployment.

Through this exhaustive synthesis, the paper underscores how federated learning can not only alleviate data privacy concerns but also enhance the adaptability and scalability of LLMs, bolstering their applicability in diverse, real-world scenarios.