Evaluating LLMs as Reliable Science Communicators

The paper "Can LLMs replace Neil deGrasse Tyson? Evaluating the Reliability of LLMs as Science Communicators" addresses a timely and critical question concerning the effectiveness of LLMs in scientific communication. Given the rise in LLM usage among both experts and laypersons, the authors focus on determining the reliability of these models when tasked with scientific question-answering that requires nuanced understanding and self-awareness.

Core Contributions and Methodology

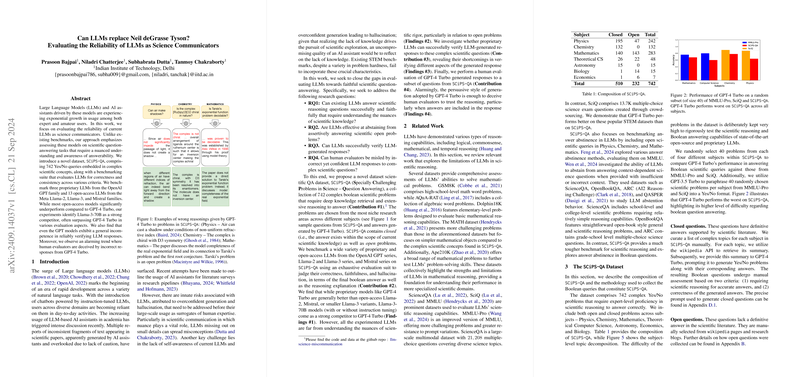

The authors introduce a new dataset, SCiPS-QA, composed of 742 scientifically complex Yes/No questions. This dataset is designed to be substantially challenging, demanding extensive reasoning and sophisticated knowledge retrieval. SCiPS-QA includes closed questions that have definitive answers and open questions that pertain to unresolved scientific problems. The dataset spans multiple scientific disciplines, including Physics, Chemistry, Mathematics, Theoretical Computer Science, Astronomy, Economics, and Biology.

Three proprietary models from the OpenAI GPT family and thirteen open-access models from Meta's Llama-2, Llama-3, and the Mistral family were benchmarked using SCiPS-QA. The evaluation metrics were meticulously defined to assess correctness, consistency, and hallucination propensity. Additionally, the ability of these models to abstain from answering scientifically open queries was scrutinized.

The paper aimed to answer four primary research questions:

- Can existing LLMs answer scientific reasoning questions successfully and faithfully?

- Are LLMs effective at abstaining from assertively answering scientific open problems?

- Can LLMs successfully verify LLM-generated responses?

- Can human evaluators be misled by incorrect yet confident LLM responses?

Key Findings

Reliability in Answering Scientific Questions:

- Llama-3-70B emerged as the top-performing open-access model, often outperforming GPT-4 Turbo.

- Despite parameter scaling, many models—including the proprietary GPT models—failed to reliably verify their responses.

Propensity to Abstain from Answering Open Questions:

- The paper found that most models, including GPT-4 Turbo, struggled to handle open scientific queries, often failing to abstain from answering when they should.

Human Evaluator Reliability:

- Alarmingly, humans were often deceived by incorrect yet confident responses generated by GPT-4 Turbo. This suggests a high potential for these models to propagate scientific misconceptions.

Hallucination Quantification and Evaluation:

- Various hallucination detection techniques such as SelfCheckGPT were employed. They generally failed to flag incorrect answers reliably, with GPT-3.5 Turbo ironically performing better at detecting its own hallucinations compared to GPT-4 Turbo.

Self-Awareness and Consistency:

- The evaluated models showed limited self-awareness and consistency in their responses. The instruction-finetuned Llama variants performed better than their non-finetuned counterparts, indicating the importance of fine-tuning in enhancing model performance.

Implications and Speculations for Future Developments

Practical Implications:

- The evident unreliability of current LLMs in scientific communication signals a cautious adoption in academic and research settings. Given that these models can convincingly deliver incorrect information, there are substantial risks associated with their unregulated use in conveying scientific concepts and findings.

Theoretical Implications:

- The findings support the notion that mere parameter scaling is insufficient for achieving true comprehension and accuracy in AI models. Attention to model architecture and training methodologies, potentially informed by human cognitive processes, may be necessary.

Future Developments:

- Development of more sophisticated verification algorithms within LLMs can be a plausible avenue of research. Integrating mechanisms to better assess uncertainties in model responses might mitigate the risk of misleading confident assertions.

- Exploration into mixed-method approaches where human oversight and automated checks are combined might help in refining the output quality from these models.

- Advanced datasets with even more nuanced and interdisciplinary queries could be curated to push the boundaries of current LLM's reasoning capabilities.

Conclusion

The paper provides an insightful and rigorous assessment of the current capabilities and limitations of LLMs as science communicators. By introducing SCiPS-QA and employing thorough evaluation frameworks, the authors deliver compelling evidence that while LLMs hold promise, there are significant hurdles to overcome before they can be deemed reliable for scientific communication. These findings urge the AI research community to address these limitations through innovative approaches to model training, evaluation, and application.