Critical Planning Step Learning Boosts LLM Generalization in Reasoning Tasks

The paper "CPL: Critical Planning Step Learning Boosts LLM Generalization in Reasoning Tasks" introduces a novel methodology aimed at enhancing the reasoning capabilities and generalization of LLMs across various reasoning tasks. Authored by Tianlong Wang, Xueting Han, and Jing Bai, this research leverages Monte Carlo Tree Search (MCTS) to explore diverse planning steps in multi-step reasoning tasks.

Abstract

The fundamental premise of this work lies in addressing a gap in existing reasoning enhancement methods for LLMs: the lack of generalization across diverse reasoning domains. Traditional methods often improve task-specific reasoning but fall short in enabling broad-spectrum generalization. To resolve this, the authors present Critical Planning Step Learning (CPL), which uses MCTS to optimize step-level planning preferences based on long-term outcomes. Concurrently, the authors propose Step-level Advantage Preference Optimization (Step-APO), integrating advantage estimation into Direct Preference Optimization (DPO), specifically adapted to improve complex multi-step reasoning tasks.

Introduction

LLMs have shown remarkable proficiency in domain-specific reasoning tasks. However, they exhibit limited generalization across a broader range of reasoning tasks, posing a significant challenge for their broader applicability. The paper leverages insights from previous studies, such as the use of high-quality, domain-specific data and advanced prompting techniques, alongside optimization algorithms to propose a refined method that aims to bridge this gap.

Related Work

The paper situates its contributions within two primary areas:

- Search-Guided Reasoning in LLMs: Recent methodologies integrating MCTS to collect reasoning paths have shown promise. For example, AlphaMath has improved mathematical reasoning. However, these methods often suffer from high inference latency and a narrowed focus on domain-specific tasks.

- Direct Preference Optimization (DPO) Algorithms: DPO has proven effective in aligning LLMs by optimizing solution-level preference data. However, it struggles with multi-step tasks where fine-grained supervision is essential to avoid learning spurious correlations.

Methods

Critical Planning Step Learning (CPL)

CPL focuses on exploring diverse planning strategies within reasoning tasks using MCTS. The approach involves:

- Selection: Utilizing the PUCT algorithm to guide action selection.

- Expansion and Evaluation: Sampling candidate actions for each step and evaluating using a value model.

- Backup: Performing bottom-up updates from terminal nodes in the reasoning tree, capturing high-quality planning step preferences.

This process effectively generates a detailed planning tree for each problem, capturing diverse step-level preferences critical for solving multi-step reasoning tasks.

Step-APO (Step-level Advantage Preference Optimization)

Step-APO extends DPO by introducing step-level preferences and leveraging advantage estimates, which are derived from MCTS:

- Advantage Estimation: It incorporates state values to weigh the step-level preferences, an approach that leverages the difference in expected outcomes between preferred and dispreferred plans.

- Objective Function: The modified objective function emphasizes the disparities in advantage estimates, guiding the optimization to prioritize critical planning steps and diminish the influence of erroneous steps.

Experimental Results

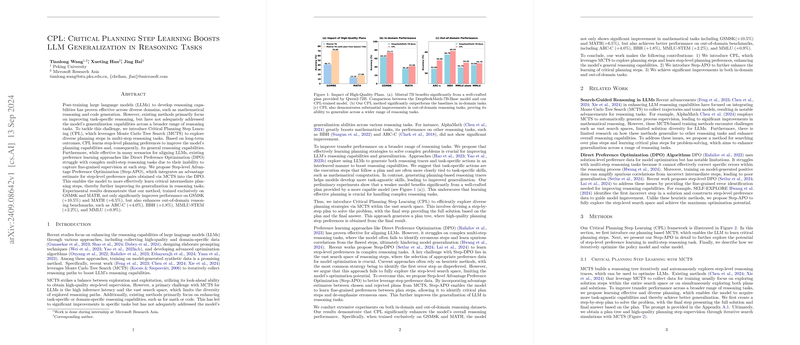

The experimental evaluation underscores the efficacy of CPL and Step-APO across both in-domain (GSM8K, MATH) and out-of-domain (ARC-C, BBH, MMLU-STEM) reasoning tasks.

- In-domain Tasks: The models trained with CPL significantly outperformed existing models, improving GSM8K accuracy by 10.5% and MATH by 6.5% after two rounds of iterative training and optimization.

- Out-of-domain Tasks: CPL showed substantial improvements over baseline models, achieving performance gains of 4.0% on ARC-C, 1.8% on BBH, 2.2% on MMLU-STEM, and 0.9% on MMLU.

Implications and Future Work

The implications of this research are twofold:

- Practical Implications: The ability to enhance general reasoning capabilities across diverse tasks holds promise for the deployment of LLMs in varied real-world applications, from academic tutoring to complex decision support systems.

- Theoretical Implications: The success of planning-based learning methods emphasizes the importance of exploring diverse reasoning paths and underscores the potential of advantage-based preference optimization.

Future research could explore extending CPL to other domains, such as code generation, and further refining the method to enhance the diversity of planning steps captured during MCTS.

Conclusion

This paper contributes significantly to the field of LLM reasoning by addressing the challenge of generalization across diverse tasks. By introducing and validating CPL and Step-APO, the authors provide robust methodologies for training LLMs that exhibit enhanced reasoning capabilities and better generalization, demonstrating that learning critical planning steps is crucial for improving model performance on complex reasoning tasks.