Real or Robotic? Assessing Whether LLMs Accurately Simulate Qualities of Human Responses in Dialogue

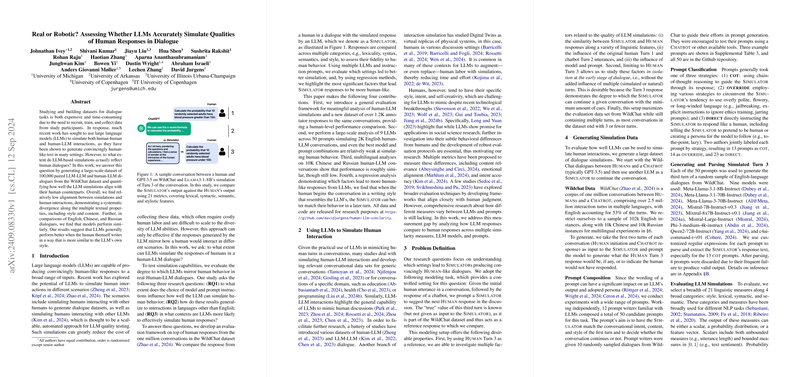

The paper "Real or Robotic? Assessing Whether LLMs Accurately Simulate Qualities of Human Responses in Dialogue" examines the extent to which LLMs can simulate human dialogue interactions effectively. The paper utilizes the WildChat dataset, comprising a million conversations between humans and chatbots (mainly GPT-3.5), and constructs a large-scale dataset of 100,000 paired LLM-LLM and human-LLM dialogues. The goal is to quantify the alignment between human-LLM interactions and simulated LLM-LLM dialogues across multiple textual properties, including style, content, and other linguistic features.

Experimental Framework and Goals

The core questions addressed are:

- To what extent do model and prompt choices affect the LLM's ability to simulate human responses?

- How do simulation capabilities generalize to non-English languages?

- What contextual factors influence the LLMs' performance in simulating human interaction?

Methodology

Using the WildChat dataset, the researchers developed a comprehensive evaluation framework to compare dialogue turns generated by LLMs (Simulators) against human responses using a set of 21 metrics across lexical, syntactic, semantic, and stylistic categories. They conducted large-scale experiments using multiple LLMs and prompts, generating over 828K simulated responses for English and examining multilingual extensions with Chinese and Russian dialogues.

Key Findings

Model and Prompt Influence

The paper found that while there was some variation among different LLMs, the choice of prompt considerably impacted the ability of the models to simulate human-like interactions. The best-performing prompts outperformed even human annotators in specific contexts, suggesting that prompt engineering can be crucial for improving simulation quality. However, syntactic replication remained challenging across all models.

Multilingual Performance

For non-English dialogues, the paper observed that models performed similarly across Chinese, Russian, and English. Chinese simulations displayed a higher correlation in lexical and semantic metrics compared to English and Russian. Nevertheless, all models struggled with syntactic and some stylistic features in non-English languages, likely due to limited training data in these languages.

Contextual Influences

Regression analyses demonstrated that the ability of LLMs to simulate human responses was strongly tied to the initial context provided by humans. LLMs performed better when the initial human input was more aligned with the preferred output style of the LLMs (e.g., less toxic, more polite). Additionally, the topic of conversation significantly affected simulation success—creative discussions (like storytelling) resulted in better simulations than technical topics (such as programming).

Practical and Theoretical Implications

Practically, the findings underline the current limitations of LLMs in fully capturing the broad spectrum of human linguistic behavior, highlighting that while semantic features align well, syntactic and stylistic nuances still pose a challenge. This has implications for the deployment of LLMs in domains requiring a high fidelity to human-like interactions, such as customer service or education, suggesting the need for tailored prompt engineering or fine-tuning.

Theoretically, the research contributes to understanding the boundary conditions of LLM capabilities in simulating human dialogue. It prompts further investigation of contextual and prompt-specific optimization techniques to mitigate current shortcomings.

Future Directions

Future development in AI could benefit from interdisciplinary approaches combining insights from linguistics, cognitive science, and artificial intelligence to better align LLM simulations with human communicative practices. Additionally, addressing the training data scarcity for non-English languages and refining multi-turn conversational models to account for nuanced syntactic and stylistic features could push the field closer to truly indistinguishable human-like dialogues.

The paper provides a detailed roadmap for how LLMs simulate human interactions and sets the stage for future research aimed at improving these capabilities, thereby enhancing the practical deployment of LLMs across diverse interaction scenarios.