Summary and Analysis of "RLPF: Reinforcement Learning from Prediction Feedback for User Summarization with LLMs"

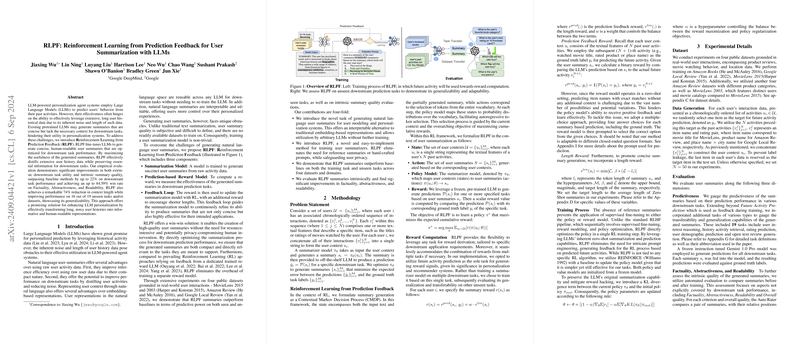

The paper "RLPF: Reinforcement Learning from Prediction Feedback for User Summarization with LLMs," authored by researchers at Google DeepMind and Google, presents a novel methodology designed to enhance LLM personalization agents through the fine-tuning process of summarizing user histories. This methodology, named Reinforcement Learning from Prediction Feedback (RLPF), aims to create succinct, human-readable user summaries that maintain high utility for downstream tasks.

Motivation and Method

Current LLM-powered personalization systems often struggle to handle extensive user interaction histories due to noise and data volume. Moreover, pre-trained LLMs typically generate brief summaries, often at the expense of contextual richness necessary for downstream tasks. RLPF seeks to fine-tune LLMs by maximizing the effectiveness of these summaries through reinforcement learning, constructing summaries that encapsulate essential information from user data while being concise and readable.

RLPF operates via three core components:

- Summarization Model: Fine-tuned to condense raw activity data into coherent summaries.

- Prediction-based Reward Model: Assesses the utility of summaries based on performance in downstream tasks.

- Feedback Loop: Utilizes rewards to iteratively improve the summarizer model through reinforcement learning, including length-based incentives to ensure summaries remain concise.

The proposed methodology does not necessitate reference summaries or complex pre-crafted prompts, providing a privacy-preserving solution by eliminating the need for detailed human-generated labels.

Empirical Evaluation and Results

The paper outlines comprehensive experiments conducted on four datasets—specifically MovieLens 2015 and 2003, Amazon Review datasets, and Google Local Review—to evaluate RLPF effectiveness. The empirical results reveal that RLPF surpasses baseline methods significantly:

- Up to a 22% improvement in downstream task performance.

- Achieves up to 84.59% win rate on intrinsic quality metrics such as Factuality, Abstractiveness, and Readability.

- Realizes a 74% reduction in context length while improving performance across 16 out of 19 unseen tasks/datasets, demonstrating substantial generalizability.

The policy model trained using RLPF generally exhibits enhanced predictiveness on unseen tasks while maintaining readability and factual integrity. Compared to conventional RL approaches, RLPF does not require separate training for reward models, simplifying the training pipeline and reducing computational overhead.

Implications and Future Work

RLPF showcases a noteworthy advancement in user summary generation within LLM-powered systems. The substantial improvements in summary quality and downstream task performance underscore its potential utility across various domains, ranging from personalized recommendations to user modeling.

From a theoretical standpoint, RLPF sets precedence for seamlessly integrating reinforcement learning with LLMs, expanding upon existing methods like RL from Human Feedback (RLHF) and AI feedback (RLAIF). Practically, RLPF can be instrumental in applications where user data needs to be condensed and interpreted quickly without sacrificing actionable insights.

Anticipation for Future Developments

Future research may explore several avenues:

- Extending RLPF to more nuanced user contexts, including multi-modal data sources such as visual interactions and audio cues.

- Incorporating additional feedback mechanisms, potentially integrating user-generated feedback to further refine the summarization model.

- Applying RLPF to other domains within AI, particularly where concise and contextually rich summarization proves beneficial, such as educational environments or content recommendation engines.

By continuing to enhance the interpretability and efficiency of user summaries, future developments rooted in the RLPF methodology promise to broaden the impact of LLMs across personalization and beyond.

This paper paves the way for more robust personalization systems, ensuring that even comprehensive user data can be harnessed effectively without overwhelming the underlying models or compromising the quality of interactions.