In Defense of RAG in the Era of Long-Context LLMs

The paper "In Defense of RAG in the Era of Long-Context LLMs" by Tan Yu, Anbang Xu, and Rama Akkiraju presents a critical examination of the ongoing evolution in natural language processing, specifically focusing on the efficacy of Retrieval-Augmented Generation (RAG) versus contemporary long-context LLMs. This work revisits the relevance and potential superiority of RAG in addressing long-context question-answering tasks within the field.

Introduction

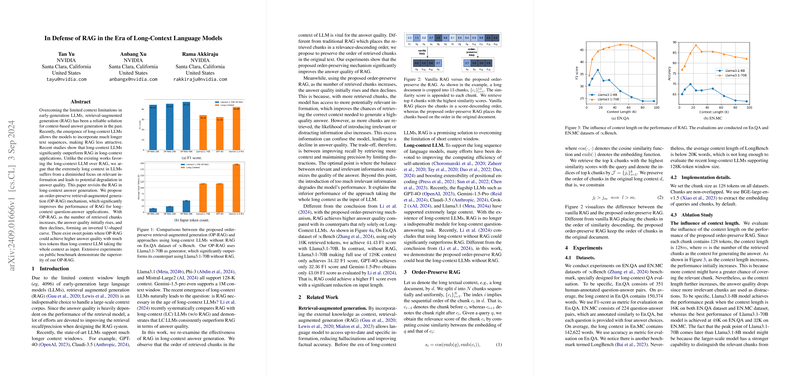

Retrieval-Augmented Generation (RAG) has long stood as a solution to the context window limitations inherent in earlier generations of LLMs. By integrating specific, external knowledge into LLMs, RAG systems enhance factual accuracy and reduce instances of hallucinations. Despite the recent advancements in LLMs that support expanded context windows (e.g., up to 1M tokens), current literature suggests that these long-context LLMs generally outperform RAG in handling extended context scenarios. The authors of this paper, however, contend that excessively long contexts can dilute the focus on pertinent information, thereby potentially degrading the quality of generated answers.

Proposed Method: Order-Preserve RAG (OP-RAG)

The authors introduce Order-Preserve Retrieval-Augmented Generation (OP-RAG), a novel mechanism to enhance the performance of traditional RAG systems for long-context applications. Traditional RAG sorts retrieved chunks purely based on relevance scores in descending order. In contrast, OP-RAG maintains the original order of these chunks as they appear in the source document. This order-preservation is critical because it aligns more naturally with the narrative flow and intrinsic structure of the document, which are often disrupted by pure relevance-based ordering.

Experimental Results

The experimental results are compelling, showing that OP-RAG outperforms both vanilla RAG and long-context LLMs without RAG in terms of quality and efficiency. Using datasets such as En.QA and EN.MC from the Bench benchmark, OP-RAG demonstrated notable improvements:

- On the En.QA dataset, OP-RAG with 48K retrieved tokens achieves a 47.25 F1 score using the Llama3.1-70B model, compared to 34.26 F1 score for the long-context Llama3.1-70B with 117K tokens as input.

- Similarly, on the EN.MC dataset, OP-RAG achieves an accuracy of 88.65% with 24K tokens, outperforming other evaluated long-context LLMs without RAG.

These results underscore the claim that efficient retrieval and focused context utilization can significantly elevate model performance without necessitating massive token inputs.

Theoretical and Practical Implications

The OP-RAG mechanism challenges the current trend that favours long-context LLMs by illustrating that proper retrieval mechanisms can and do enhance context application efficiency. This research also highlights an optimal balance between the inclusion of relevant information and exclusion of distracting content, showing an inverted U-shaped performance curve as the retrieved chunk count increases.

Practically, this means that NLP systems can achieve better performance not merely by expanding the context window but by refining how context is retrieved and presented to the model. This finding is crucial for applications confined by computational resources or seeking to reduce inference costs without sacrificing answer quality.

Future Prospects

The paper opens up several avenues for further exploration. Future research could delve into refining OP-RAG methods to dynamically adapt to different types of queries and documents, developing more sophisticated chunking strategies, or integrating OP-RAG with other state-of-the-art LLMs to explore symbiotic enhancements.

In conclusion, this paper provides a robust argument and empirical evidence supporting the sustained relevance and potential superiority of RAG, particularly through the proposed OP-RAG mechanism. It asserts that the efficient and contextually-aware retrieval of information can maintain, if not surpass, the performance of even the most advanced long-context LLMs. Research in this direction could catalyze more optimized approaches to context handling in NLP.