MaFeRw: Query Rewriting with Multi-Aspect Feedbacks for Retrieval-Augmented LLMs

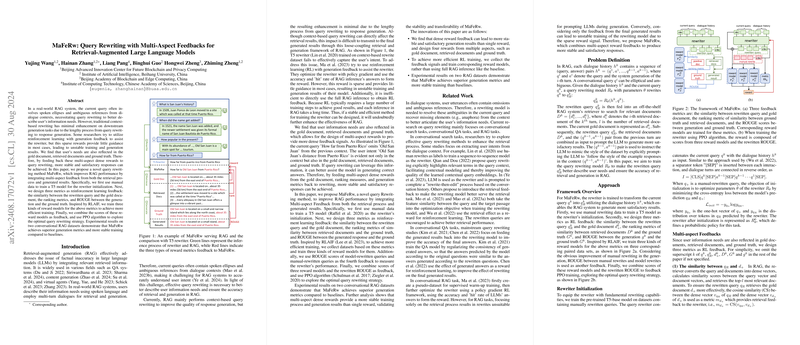

The paper "MaFeRw: Query Rewriting with Multi-Aspect Feedbacks for Retrieval-Augmented LLMs" presents an innovative approach to improving retrieval-augmented generation (RAG) systems through enhanced query rewriting mechanisms. The primary contribution lies in integrating multi-aspect feedback to train a query rewriter that addresses typical challenges in real-world RAG applications, such as ambiguous references and spoken ellipses in dialogue contexts.

In typical RAG systems, users' queries are often embedded within multi-turn dialogues, leading to contextually rich but sometimes ambiguous inputs. Traditional context-based rewriting techniques inadequately address the intricacies of users' intents, particularly due to the elongated pipeline from query rewriting to response generation. Although some existing methods have incorporated reinforcement learning (RL) with generation feedback, they often face the issue of sparse rewards, resulting in unstable training and suboptimal generation outcomes. MaFeRw proposes a more effective solution by employing dense rewards from multiple sources, including retrieved documents and ground truth data.

Methodology

Rewriter Initialization:

The query rewriter is initialized by training a T5 model using manually annotated data. The purpose is to equip the model with foundational query rewriting skills.

Multi-Aspect Feedbacks:

The core innovation of this work is the integration of multiple feedback mechanisms into the RL framework. Three primary metrics are designed:

- Similarity between rewritten query and gold document (m_{d+}): This metric uses cosine similarity to ensure that the rewritten query effectively retrieves relevant documents.

- Ranking metric based on retrieved documents and ground truth (m_{D}): This metric assesses how well the retrieval results are ordered in relevance to the ground truth.

- ROUGE score between generated responses and ground truth (m_{G}): This metric measures the alignment of the generated output with the expected answer.

Additionally, ROUGE between the model-rewritten query and the manually rewritten query (m_{q}) serves as another feedback signal.

Reward Models:

To mitigate the inefficiency of end-to-end RL training, the authors train three reward models corresponding to the primary metrics. This approach leverages a pre-trained T5-base model, augmented with a value head, to predict reward values based on the rewrite and historical context.

Reinforcement Learning:

The query rewriter is further refined using a PPO-based RL framework. The overall reward for training combines the scores from the reward models and the direct ROUGE metric, ensuring a balanced optimization objective.

Experimental Evaluation

The proposed method is evaluated on two benchmark datasets, QReCC and TopiOCQA, with further transferability tests on the WSDM@24 Multi-Doc QA dataset. The evaluation metrics include ROUGE-1, ROUGE-L, BLEU, METEOR, and Mean Reciprocal Rank (MRR).

Key Findings:

- Enhanced Generation Metrics: MaFeRw consistently outperforms baseline methods, including T5-based rewriters and RL-base rewriters, on both datasets.

- Stable and Efficient Training: The integration of dense multi-aspect feedback leads to more stable and satisfactory training outcomes as compared to single-reward-based RL methods.

- Transferability: The rewriter trained on QReCC demonstrates commendable generalization capability on the WSDM@24 Multi-Doc QA dataset.

- Retrieval Performance: The improvements in MRR indicate that MaFeRw enhances the quality of document retrieval, which is critical for subsequent generative tasks.

Implications and Future Directions

Theoretical Implications:

MaFeRw's multi-aspect feedback framework demonstrates a significant advance in addressing the sparse reward problem in RL-based RAG systems. By leveraging dense, multi-faceted reward signals, the method enhances both the retrieval and generation phases of the pipeline, resulting in more reliable and contextually appropriate responses.

Practical Implications:

Practically, MaFeRw can be integrated into various applications requiring complex information retrieval and generation, such as virtual assistants, QA systems, and content generation tools. The improvements in precision and stability are particularly beneficial for domains where accurate and contextually coherent information is crucial.

Future Developments:

Future work could explore document re-ranking methodologies to align retrieved documents more closely with user contexts. Additionally, extending the approach to handle more complex dialogue histories and incorporating dynamic prompt reconstruction could further enhance the model's capability to discern nuanced user intents.

In conclusion, the paper provides a solid contribution to the field of retrieval-augmented generation, presenting a novel method that significantly improves the query rewriting process and, consequently, the overall RAG performance. The inclusion of multi-aspect feedback signals for RL training marks a significant step forward, promising more effective and stable RAG systems in the future.