MemLong: Memory-Augmented Retrieval for Long Text Modeling

The paper "MemLong: Memory-Augmented Retrieval for Long Text Generation" introduces an innovative methodology aimed at enhancing the capabilities of long-context LLMs via the utilization of external retrieval mechanisms. This method, coined as MemLong, addresses the prevailing challenge of handling long contexts in LLMs, which is primarily constrained by the quadratic time and space complexity of attention mechanisms and the increasing memory consumption of key-value (K-V) caches during text generation.

Core Contributions

MemLong stands out with the following core contributions:

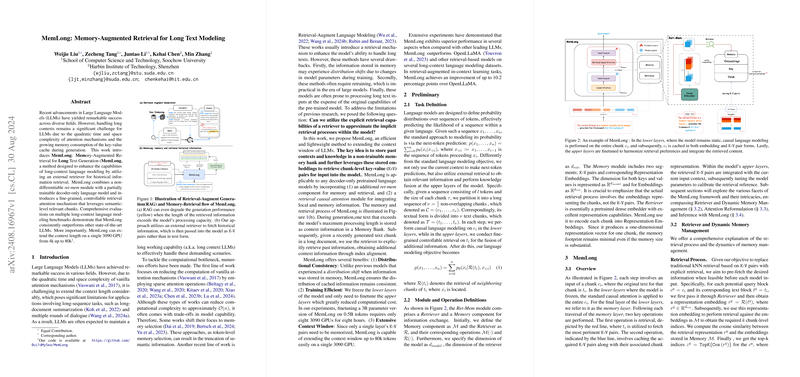

- Memory-Augmented Retrieval: MemLong incorporates a non-differentiable ret-mem module, designed to store past contexts in a memory bank and retrieve chunk-level K-V pairs as needed. This decoupling of storage and retrieval operations allows for a significant extension of the context window.

- Fine-Grained Retrievable Attention: By employing a retrieval causal attention module, MemLong ensures that retrieval processes are fine-tuned to maintain both local and semantic-level relevance.

- Efficiency and Practicality: The method introduces a partially trainable model architecture, where only the upper layers are fine-tuned, significantly reducing computational overhead.

Theoretical and Practical Implications

Theoretical Implications:

- Addressing Distributional Shift: Previous models faced a distribution shift when stored memory information changed due to training. MemLong mitigates this issue by freezing the lower layers and only fine-tuning the upper layers, ensuring that stored information remains consistent with the retrieval embeddings.

- Scalability and Extensibility: Extending context windows to 80k tokens on a single GPU represents a significant leap in long-context LLMing. The theoretical underpinnings of MemLong suggest that larger context windows can be handled efficiently without recomputing entire attention scores.

Practical Implications:

- Enhanced Long Text Applications: MemLong's ability to manage extended contexts efficiently paves the way for improved performance in applications such as long-document summarization and multi-turn dialogues.

- Resource Efficiency: By reducing the requirement to retrain entire models, MemLong offers a practical solution for deploying LLMs on GPUs with limited memory, thus democratizing access to high-performance LLMs.

Experimental Results

The results presented in the paper underscore MemLong's superior performance across multiple long-context LLMing benchmarks. Notably, the model outperforms state-of-the-art LLMs such as OpenLLaMA and other retrieval-augmented models. The experimental setup reveals that MemLong can extend the context length up to 80k tokens on GPUs, a significant improvement over current standards.

Numerical Results:

- In retrieval-augmented in-context learning tasks, MemLong achieves up to a 10.2 percentage point improvement over OpenLLaMA.

- For long-context LLMing, MemLong consistently shows reduced perplexity on datasets such as PG19, Proof-pile, and BookCorpus, demonstrating its ability to maintain performance with increasing context lengths.

Comparative Performance Analysis

MemLong exhibits distinct advantages when compared to other solutions:

- Sparse Attention Models: While methods like LongLoRA reduce computational complexity, they often trade-off model capability, specifically in retaining local context integrity. MemLong sidesteps this issue by leveraging chunk-level retrieval, maintaining coherent semantic structures.

- Retrieval-Augment LLMs: Contrasting with methods such as MemTrm, which suffer from memory distribution shifts, MemLong's architecture ensures stable memory management through dynamic updates and frozen lower layers.

Future Directions

The promising results of MemLong open up several potential avenues for further research:

- Model Scaling: Future work could explore the application of MemLong to larger models and varied architectures to validate the scalability and generalizability of the proposed approach.

- Advanced Retrieval Algorithms: Enhancing retriever efficiency by experimenting with different embedding techniques and retrieval algorithms could further optimize memory usage and retrieval accuracy.

- Real-World Applications: Testing MemLong in real-world deployments, particularly in applications requiring extensive context utilization such as legal document analysis or scientific literature review, would provide valuable insights into its practical utility.

Conclusion

MemLong represents a significant advancement in the field of LLMing by addressing the longstanding challenge of long-context processing. Through innovative use of memory-augmented retrieval and fine-grained attentional mechanisms, MemLong extends the context window dramatically without incurring prohibitive computational costs. The model's ability to integrate and utilize historical information effectively positions it as a powerful tool for a wide array of applications in natural language processing. The paper's findings and the model's demonstrated capabilities suggest a robust foundation upon which further research and development can build.