LRP4RAG: Detecting Hallucinations in Retrieval-Augmented Generation via Layer-wise Relevance Propagation

The paper, "LRP4RAG: Detecting Hallucinations in Retrieval-Augmented Generation via Layer-wise Relevance Propagation," authored by Haichuan Hu, Yuhan Sun, and Quanjun Zhang, introduces a novel approach for detecting hallucinations in Retrieval-Augmented Generation (RAG) systems by leveraging Layer-wise Relevance Propagation (LRP). Hallucinations in LLMs present significant risks, particularly when LLMs are deployed in real-world applications. These hallucinations arise when models output plausible yet incorrect information. Despite recent advancements in mitigating this issue using RAG, hallucinations still remain a critical challenge. This paper systematically investigates the potential of LRP for hallucination detection in RAG systems, presenting compelling empirical evidence of its efficacy.

Introduction

LLMs have significantly advanced the state of NLP, but they are prone to generating hallucinations, which can compromise the trustworthiness of these models. Retrieval-Augmented Generation (RAG) was proposed to mitigate such hallucinations by providing LLMs with relevant external knowledge during the response generation process. However, recent studies indicate that even RAG systems are not entirely free from hallucinations. There is an evident necessity to develop methods to systematically understand and detect these hallucinations.

Methodology

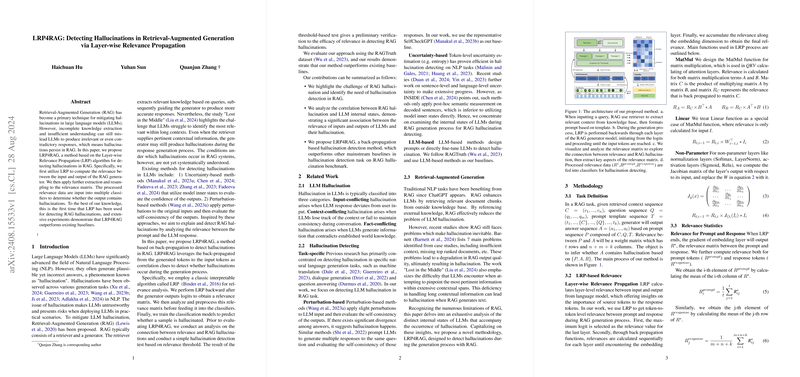

The authors propose LRP4RAG, which utilizes the LRP algorithm to detect hallucinations in RAG. LRP4RAG involves several key steps:

- Relevance Computation: LRP is employed to compute the relevance matrix between the input and output tokens of the RAG generator.

- Relevance Processing: Further extraction and resampling are applied to the relevance matrix to ensure it is suitable for classification tasks.

- Classification: The processed relevance data is fed into multiple classifiers to determine the presence of hallucinations in the generated output.

The method is distinctive as it provides an interpretable mechanism to back-propagate relevance scores from generated tokens to input tokens, effectively capturing the correlation between inputs and outputs.

Experimental Evaluation

The authors evaluate the proposed method on the RAGTruth dataset, which includes human-annotated samples of RAG-generated content. The dataset comprises responses from two models: Llama-2-7b-chat and Llama-2-13b-chat. The evaluation focuses on the classifier's accuracy, precision, recall, and F1 scores.

Key Findings:

- Relevance Distribution: The paper reveals significant differences in relevance distribution between hallucinated and non-hallucinated samples. The relevance scores for normal samples are generally higher, indicating a stronger correlation between the input prompts and outputs.

- Classifier Performance: The LRP-based methods demonstrate superior performance compared to baseline methods across all metrics. For Llama-2-7b-chat, the method achieves an accuracy of 69.16% and an F1 score of 70.64%, showing a substantial improvement over baseline approaches.

- Scalability: While larger models like Llama-2-13b-chat present greater challenges in hallucination detection, the LRP-based methods still maintain robust performance, with significant accuracy and precision advantages over baseline methods.

Implications

The findings of this paper have both practical and theoretical implications. Practically, LRP4RAG offers a reliable approach to detect hallucinations, enhancing the trustworthiness of RAG systems. Theoretically, it provides insights into the internal states of LLMs and their relevance distributions, contributing to the broader understanding of hallucination phenomena in LLMs.

Future Directions

Future research could extend the evaluation of LRP4RAG across a wider variety of models and datasets to further validate its generalizability. Additionally, exploring the integration of LRP with other interpretability tools could enhance the robustness of hallucination detection mechanisms.

In conclusion, the LRP4RAG method provides a comprehensive and effective approach for detecting hallucinations in retrieval-augmented generation systems. The thorough analysis and strong empirical results presented in the paper indicate its potential for practical applications, offering a significant step forward in mitigating hallucinations in LLMs.