FocusLLM: Scaling LLM's Context by Parallel Decoding - An Evaluation

The paper "FocusLLM: Scaling LLM's Context by Parallel Decoding" by Zhenyu Li et al. addresses an imperative challenge in the domain of LLMs: extending the context length. The paper proposes a novel framework, FocusLLM, which enhances LLMs' ability to manage and utilize information spanning extensive context lengths efficiently.

The ability to handle long context sequences is crucial for numerous applications, including document summarization, question answering, and generating coherent long-form content. Traditional transformer architectures face significant challenges in this domain due to their quadratic computational complexity with sequence length and poor extrapolation performance on longer sequences. The acquisition of high-quality, long-text datasets further exacerbates the difficulty.

Methodology

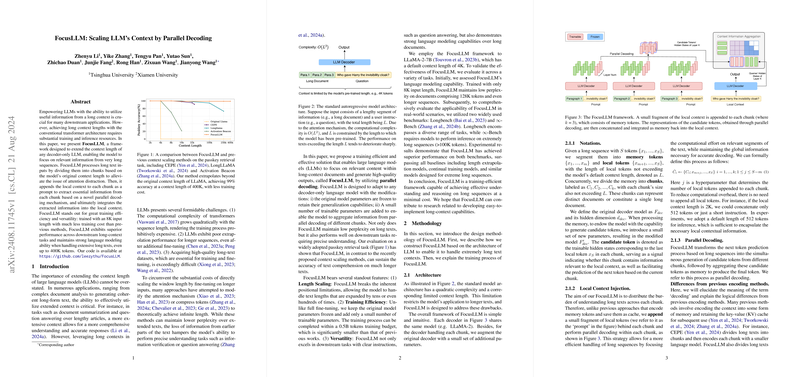

FocusLLM introduces an innovative approach to extend the context length of decoder-only LLMs. It processes long inputs by segmenting them into chunks based on the original model's context length. To mitigate attention distraction issues, local context is appended to each chunk as a prompt, facilitating the extraction of essential information using a parallel decoding mechanism. The information from these chunks is subsequently integrated into the local context.

The architecture of FocusLLM is constructed to maximize training efficiency and versatility. Key features include:

- Length Scaling: By breaking positional limitations, FocusLLM allows models to handle texts exponentially longer than their original capacity.

- Training Efficiency: The original model parameters are frozen to retain generalization capabilities, and only a minimal number of additional trainable parameters are introduced. Training is completed with significantly less computational resources compared to previous methods.

- Versatility: FocusLLM excels in diverse downstream tasks, including question answering and LLMing over long documents.

Experimental Evaluation

LLMing

The evaluation spans multiple datasets, including PG19, Proof-Pile, and CodeParrot, with text lengths ranging from 4K to 128K tokens. FocusLLM achieves and maintains low perplexity across significantly extended sequences, up to 400K tokens. This performance is juxtaposed against strong baselines such as Positional Interpolation (PI), NTK-aware Scale ROPE, StreamingLLM, AutoCompressor-6K, YaRN-128K, LongChat-32K, LongAlpaca-16K, LongLlama, and Activation Beacon. The results indicate that FocusLLM not only matches but often surpasses these models, particularly in extremely long contexts, while maintaining a much lower computational and memory footprint.

Downstream Tasks

The practical efficacy of FocusLLM was further validated on two comprehensive benchmarks: Longbench and -Bench. These benchmarks encapsulate various tasks such as question-answering, summarization, few-shot learning, and code completion, with average sequence lengths reaching up to 214K tokens in -Bench.

FocusLLM consistently outperformed previous context scaling methods across diverse metrics, demonstrating superior performance in both LLMing and task-specific evaluations. This highlights its capability for precise understanding and reasoning over extensive text contexts.

Efficiency Considerations

A crucial aspect of FocusLLM's design is its efficiency in terms of memory usage and inference time:

- Memory Footprint: FocusLLM exhibits a linear growth in memory usage without parallel processing, significantly reducing the overhead compared to traditional methods.

- Inference Time: The parallel processing method in FocusLLM, although marginally slower than standard models, offers substantial improvements in inference time over other long-context methods.

Extensions and Future Work

FocusLLM sets a noteworthy benchmark for future research in long-context LLMs. Several avenues for further exploration include:

- Dynamic Chunk Sizing: Investigating adaptive chunk sizes based on the underlying data structure.

- Synthetic Data: Leveraging synthetic datasets to enhance the model's training efficiency and performance on specific tasks.

- Extended Context Applications: Applying FocusLLM to domains requiring even longer context handling, such as multi-document summarization and complex narrative generation.

Conclusion

FocusLLM presents a substantial advancement in extending the context length of LLMs with minimal computational cost. By introducing a novel parallel decoding mechanism, it adeptly addresses the limitations of traditional transformer architectures. Its ability to maintain performance across extensive text lengths, coupled with efficient training and inference processes, makes FocusLLM a significant contribution to the paper and application of long-context LLMs.

The insights and methodologies introduced here will undoubtedly stimulate further innovations in the design and utilization of LLMs capable of managing extensive and complex textual data, fostering new developments in natural language processing and beyond.