A Survey on Benchmarks of Multimodal LLMs

Overview

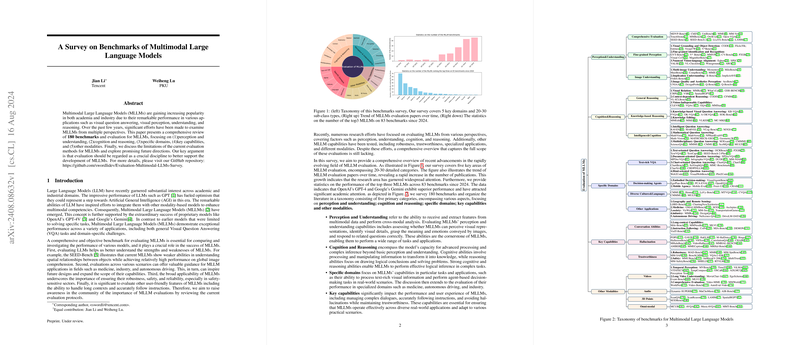

This paper provides an exhaustive survey on the benchmarks used for evaluating Multimodal LLMs (MLLMs). MLLMs have demonstrated significant advancements by integrating LLMs with other modalities such as images, videos, audio, and 3D point clouds. Their remarkable performance in tasks like Visual Question Answering (VQA), cognitive reasoning, and domain-specific applications underscores the necessity for robust evaluation metrics. This survey meticulously categorizes these benchmarks into five primary areas: Perception and Understanding, Cognition and Reasoning, Specific Domains, Key Capabilities, and Other Modalities.

Key Categories of Evaluation

Perception and Understanding

Evaluating MLLMs' ability to perceive and analyze multimodal data is foundational. This category includes benchmarks that test MLLMs on object detection, visual grounding, and fine-grained perception. For instance:

- Visual Grounding and Object Detection: Benchmarks such as Flickr30k Entities and Visual7W assess the model's capacity to localize and identify objects within scenes.

- Fine-Grained Perception: Benchmarks like GVT-Bench and CV-Bench evaluate detailed visual features including depth and spatial awareness.

- Nuanced Vision-Language Alignment: Benchmarks such as VALSE and ARO test the model's ability to align complex visual and linguistic information.

Cognition and Reasoning

These benchmarks focus on the MLLMs' capacity for high-level processing and problem-solving beyond primitive perception:

- General Reasoning: Benchmarks like VSR and What’s Up assess visual spatial reasoning and understanding of object relations.

- Knowledge-Based Reasoning: OK-VQA and A-OKVQA test the models’ ability to answer questions requiring external knowledge and commonsense reasoning.

- Intelligence and Cognition: Benchmarks such as M3Exam and MARVEL explore multidimensional abstraction and advanced cognitive tasks including mathematical reasoning.

Specific Domains

This section targets specific application areas to evaluate MLLMs' adaptability and efficacy:

- Text-Rich VQA: Benchmarks including TextVQA and DUDE measure models' performance in scenarios that heavily integrate text and visual information.

- Decision-Making Agents: Embodied decision-making benchmarks like OpenEQA and PCA-Eval test the MLLMs’ ability to interact with and make decisions in dynamic environments.

- Diverse Cultures and Languages: Henna and MTVQA assess models' performance across different languages and culturally diverse contexts.

Key Capabilities

This category evaluates critical operational capabilities of MLLMs:

- Conversation Abilities: Benchmarks such as MileBench and CoIN evaluate long-context handling and multi-turn dialogue adherence.

- Hallucination: Benchmarks like POPE and M-HalDetect assess the models' propensity to hallucinate, i.e., generate information not grounded in the input data.

- Trustworthiness: Robustness and safety benchmarks, including BenchLMM and MM-SafetyBench, evaluate the resilience of MLLMs against misleading inputs and their ability to handle sensitive content safely.

Other Modalities

Evaluations in this section focus on models' proficiency with diverse types of data beyond simple text and images:

- Video: MVBench and Video-MME test temporal dynamics understanding and coherent video content interpretation.

- Audio: AIR-Bench and Dynamic-SUPERB evaluate the models' capability to process and understand audio signals.

- 3D Scenes: ScanQA and M3DBench test the models' understanding of spatial relationships and depth in 3D environments.

- Omnimodal: MMT-Bench assesses models' performance on tasks involving multiple data modalities simultaneously.

Implications and Future Work

The structured evaluation framework outlined in this paper illuminates the strengths and limitations of contemporary MLLMs. The rigorous and diverse nature of benchmarks discussed will foster more robust and nuanced models in future research. This will ultimately drive progress towards the development of highly capable, safe, and trustworthy AI systems adaptable to a variety of real-world applications.

Future research avenues may include the creation of more comprehensive, large-scale benchmarks that capture real-world complexities and cross-modal interactions more effectively. Additionally, addressing gaps in current evaluations, such as the handling of highly dynamic and long-contextual datasets, will be critical.

Conclusion

This survey underscores the importance of comprehensive and systematic evaluations in the progression of Multimodal LLMs. By categorizing existing benchmarks into clear, functional domains, it offers valuable insights into the current landscape and paves the way for future advancements. Continued development and refinement of these benchmarks will be pivotal in ensuring the robustness, reliability, and applicability of MLLMs in practical and varied real-world contexts.