Fine-tuning LLMs with Human-inspired Learning Strategies in Medical Question Answering

LLMs play a pivotal role in natural language processing tasks but demand substantial data and computational resources for training. This paper specifically explores the efficacy of human-inspired learning strategies, such as curriculum learning, on fine-tuning LLMs in the context of medical question answering. Human-inspired learning strategies mimic common human learning practices to improve data efficiency by optimizing data ordering during training. The researchers conducted a comprehensive evaluation across multiple LLMs and datasets, analyzing the impact of different learning strategies under various data labeling scenarios.

Methodology

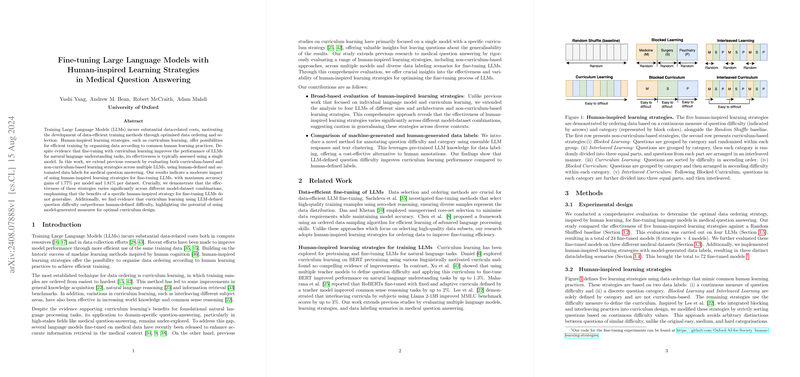

The experimental design included the application of five human-inspired learning strategies—Blocked Learning, Interleaved Learning, Curriculum Learning, Blocked Curriculum, and Interleaved Curriculum—against a Random Shuffled baseline. The researchers evaluated these strategies on four LLMs (TinyLlama 1.1B, Llama 2 7B, Llama 2 13B, and Mistral 7B) and three medical question answering datasets (LEK, MedMCQA, and MedQA). Each model was fine-tuned on the LEK dataset, implementing three different data labeling scenarios: human-defined difficulty, LLM-defined difficulty, and LLM-defined clustered categories.

Findings

Impact of Human-inspired Learning Strategies

Human-inspired learning strategies demonstrated modest improvements over the Random Shuffled baseline, with maximum accuracy gains peaking at 1.77% for models and 1.81% for datasets. These gains, albeit moderate, are significant at the 5% confidence level. Notably, Interleaved Learning consistently outperformed other strategies for multiple models and datasets, achieving the highest average accuracy gain. However, the optimal learning strategy was not consistent across different models and datasets, emphasizing variability in the effectiveness of these strategies.

Generalization Across Models and Datasets

The paper highlighted that the benefits of a specific learning strategy for fine-tuning one LLM do not generalize to other models or datasets. For instance, Curriculum Learning was the best-performing strategy for Llama 2 models but did not outperform Random Shuffle for TinyLlama 1.1B and Mistral 7B. Likewise, no single learning strategy consistently achieved the highest accuracy across all evaluated datasets. This variability necessitates cautious generalization when applying human-inspired learning strategies across different contexts.

LLM-defined Difficulty in Curriculum Design

The transition from human-defined difficulty to LLM-defined difficulty yielded improvements in curriculum-based learning strategies. Curriculum Learning, Blocked Curriculum, and Interleaved Curriculum all showed significant accuracy gains with LLM-defined difficulty measures. These findings support the usage of model-generated difficulty metrics as a cost-effective and viable alternative to human annotations, enhancing curriculum design for fine-tuning LLMs.

Implications and Future Directions

The research presents valuable insights into optimizing the fine-tuning process of LLMs through human-inspired learning strategies. Practically, leveraging model-generated difficulty measures can reduce the dependency on expensive human annotations, making the fine-tuning process more efficient and scalable. Theoretically, this paper reaffirms the variability in the effectiveness of learning strategies, advocating for context-specific evaluation and application.

Future explorations could involve expanding the medical curriculum to include a broader spectrum of questions, assessing alternative model-generated difficulty measures, and fine-tuning larger or specialized LLMs. Additionally, investigating the dynamic effects of fine-tuning across different stages of training could offer deeper understanding of the underlying mechanisms in human-inspired learning.

Conclusion

This paper emphasizes the nuanced impacts of human-inspired learning strategies on fine-tuning LLMs, particularly in medical question answering. While these strategies can yield accuracy improvements, their effectiveness is context-dependent, necessitating a tailored approach for different models and datasets. The potential of LLM-defined difficulty measures to enhance curriculum design is noteworthy, indicating a promising direction for future research and practical applications in AI.

References

This essay condenses the content and key findings from the specified paper. For complete details, refer to the original paper and its extensive bibliography on data-efficient fine-tuning and human-inspired learning strategies in LLMs.