Affective Computing in the Era of LLMs: A Survey from the NLP Perspective

Introduction

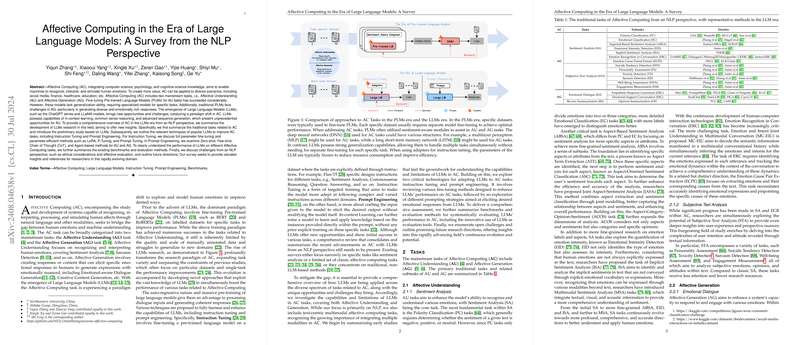

The integration of computer science with psychology has long been the cornerstone of Affective Computing (AC), an area aspiring to bridge the gap between human emotions and machine understanding. Traditionally, AC involves the tasks of Affective Understanding (AU) and Affective Generation (AG). While pre-trained LLMs (PLMs) like BERT and RoBERTa have driven significant progress, these models face inherent limitations in generalizing tasks and generating emotionally-rich content. The advent of LLMs such as the ChatGPT series and LLaMA models has substantially shifted paradigms in AC, introducing both new opportunities and challenges. This paper provides a comprehensive survey of this evolution, focusing on key technologies like Instruction Tuning and Prompt Engineering while discussing their implications and potential pathways for future research.

Tasks in Affective Computing

AC tasks are broadly categorized into AU and AG, each consisting of various sub-tasks:

- Affective Understanding (AU)

- Sentiment Analysis (SA): Tasks such as Polarity Classification (PC), Emotional Classification (EC), Aspect-Based Sentiment Analysis (ABSA), and Emotion Recognition in Conversation (ERC) evaluate a model's ability to comprehend nuanced human emotions from textual data.

- Subjective Text Analysis (STA): This includes tasks like Suicide Tendency Detection, Personality Assessment, Toxicity Detection, and Sarcasm Detection, which focus on analyzing subjective emotions and opinions in text.

- Affective Generation (AG)

- Emotional Dialogue (ED): Tasks like Empathetic Response Generation (ERG) and Emotional Support Conversation (ESC) involve creating responses that reflect empathy and provide emotional support.

- Review Summarization: Particularly Opinion Summarization (OS), which involves condensing multiple opinions on a topic into a coherent summary.

Preliminary Studies with LLMs

The LLMs like GPT-3.5-turbo and LLaMA models have demonstrated notable promise across various AU and AG tasks through zero-shot and few-shot capabilities. While LLMs excel in generalization, initial studies indicate that their performance in complex tasks still lags behind PLMs fine-tuned on specific data. The effectiveness of LLMs varies widely across different AU tasks, with challenges predominantly in implicit sentiment analysis and generating contextually appropriate responses. Moreover, LLMs' performance is highly sensitive to prompt engineering and the fine-tuning approach applied.

Instruction Tuning

Instruction Tuning, including techniques like LoRA, Prefix Tuning, and P-Tuning, has shown potential in improving LLM performance on specific tasks by fine-tuning a subset of model parameters. Key points include:

- For Polarity and Emotional Classification: Instruction Tuning has been effectively utilized to enhance the accuracy and contextual understanding of models like T5 and ChatGLM.

- For Aspect-Based Sentiment Analysis (ABSA): Approaches like InstructABSA and SCRAP demonstrate that multi-tasking with instruction tuning improves performance on granular sentiment tasks.

- Emotion Recognition in Conversation (ERC): With CKERC and DialogueLLM showcasing improved contextual understanding through methods that inject common-sense reasoning into model training.

Despite these advancements, full or even parameter-efficient fine-tuning methods require significant resources, and achieving a balance between model efficiency and performance remains a research challenge.

Prompt Engineering

Prompt Engineering techniques, such as Zero-shot Prompts, Few-shot Prompts, Chain-of-Thought (CoT) Prompts, and Agent-based methods, are critical for optimizing LLM performance without extensive model retraining. Highlights include:

- Zero-shot and Few-shot: Used to generate high-quality responses in emotionally nuanced tasks with minimal training data.

- Chain-of-Thought (CoT): Enhances reasoning capabilities by breaking complex tasks into intermediate steps, such as THOR for Implicit Sentiment Analysis.

- Agent-based Methods: Leverage interaction among multiple LLMs, exemplified by Cue-CoT and PANAS frameworks, to collaboratively tackle intricate tasks, thereby improving outputs through a dialogue-based approach.

Benchmarks and Evaluation

Comprehensive benchmarks and evaluation metrics are essential for assessing LLM performance in AC tasks. Benchmarks like SOUL, MERBench, and EIBENCH, alongside emerging evaluation techniques like Emotional Generation Score (EGS) and SECEU scores, provide a foundation for systematically comparing model performance across diverse datasets and tasks.

Discussion and Future Directions

Despite significant advancements, several challenges remain:

- Ethics: Ensuring user privacy and addressing potential biases in sentiment data remain critical.

- Effective Evaluation: Developing comprehensive evaluation standards that accurately reflect LLM capabilities remains a pressing need.

- Multilingual and Multicultural AC: Expanding AC research to encompass diverse linguistic and cultural backgrounds to improve global applicability.

- Multimodal AC: Leveraging data from various modalities beyond text to achieve holistic sentiment analysis.

- Vertical Domain LLMs: Developing specialized LLMs for domains requiring high emotional intelligence, like mental health counseling, remains underexplored.

Conclusion

LLMs have ushered in a new era for Affective Computing, overcoming many limitations of traditional PLMs through advanced instruction tuning and prompt engineering techniques. However, addressing the outlined challenges remains essential for further advancements. This paper serves as a roadmap for researchers, offering insights into leveraging LLM capabilities to achieve real-time, accurate, and contextually appropriate emotional understanding and generation.