Overview of Instruction Back-and-Forth Translation for Better Alignment

The paper "Better Alignment with Instruction Back-and-Forth Translation," authored by Thao Nguyen et al. from the University of Washington and Meta FAIR, presents an innovative method for generating high-quality synthetic data aimed at improving the alignment of LLMs. The key contribution of this work is the development of a process termed "instruction back-and-forth translation," which leverages the diversity of web-sourced information while ensuring high response quality through a novel rewriting mechanism.

Methodology

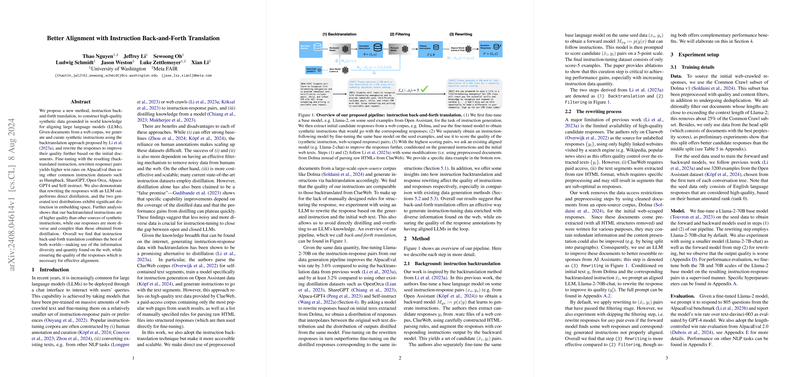

The proposed method involves three major steps to generate instruction-response pairs from web-based documents:

- Backtranslation: This step generates synthetic instructions using a fine-tuned backward model that learns from seed instruction-response pairs. Given a response from a large-scale, open-source corpus (e.g., Dolma), the backward model produces an instruction .

- Filtering: A fine-tuned forward model is used to score the generated (instruction, response) pairs on a 5-point scale. Only high-scoring pairs (score=5) are retained, ensuring alignment quality.

- Rewriting: An aligned LLM is employed to improve the textual quality of the responses, conditioned on both the generated instruction and the initial web text. This step interpolates between the initial web-sourced responses and the knowledge within the LLM, benefiting from both diversity and relevance.

Experimental Results

The paper evaluates the proposed method using AlpacaEval and other NLP benchmarks, focusing on both the effectiveness of backtranslation and the quality improvements achieved through rewriting.

Performance Metrics:

- Win Rate: The win rates on AlpacaEval indicate that LLMs fine-tuned with the proposed method outperform those fine-tuned on data generated purely by backtranslation or other common instruction datasets like OpenOrca and ShareGPT.

- Response Quality: Rewritten responses show improved alignment and comprehensibility compared to directly distilled responses. The MAUVE scoring affirms that rewritten responses are distinct from purely distilled or purely web-sourced responses.

Ablation Studies:

- Impact of Rewriting vs. Filtering: Rewriting responses significantly improves win rates, highlighting the importance of this step over merely filtering the backtranslated pairs.

- Model Scale: Tests at both 7B and 70B parameter scales demonstrate consistent performance gains, suggesting scalability and general applicability.

Qualitative Analysis:

- Instruction Quality: The instructions generated by backtranslation exhibit higher diversity and complexity compared to those generated by traditional synthetic methods. However, they do not entirely match the depth of manually crafted instructions.

- Diversity and Complexity: The use of web-sourced documents ensures a broader variety of topics and a richer set of responses, which are crucial for covering edge cases and long-tail information.

Implications and Future Developments

The implications of this research are multifold:

- Enhanced Fine-Tuning Data: The back-and-forth translation method provides a scalable way to create high-quality instruction-tuning datasets, merging the strength of web diversity and the precision of model-generated content.

- Improved LLM Alignment: By leveraging both backtranslation and rewriting, the alignment of LLMs to real-world documents is improved, potentially increasing usability and applicability in various AI systems.

- Scalability and Accessibility: Unlike previous methods dependent on restricted or difficult-to-process corpora, the proposed use of open-source web documents like Dolma facilitates broader access and utilization.

Future Work

Future research can explore:

- Enhanced Curation Techniques: Further improvements in data quality can be achieved by integrating additional curation techniques and quality filters.

- Domain-Specific Fine-Tuning: Extending the methodology to more specialized domains, such as legal or medical texts, could benefit through adapting the rewriting step to models fine-tuned on domain-specific datasets.

- Pre-Training Implications: Investigating the impact of instruction back-and-forth translated data on the pre-training phase could provide insights into its broader applicability in LLM development pipelines.

Conclusion

Overall, the paper by Nguyen et al. presents a robust and scalable method for generating high-quality instructional data for LLM fine-tuning. The instruction back-and-forth translation method stands out by effectively combining the breadth of web-sourced information with the controlled quality enhancements of model-based rewriting, paving the way for better aligned and more capable LLMs.