Scaling LLM Test-Time Compute Optimally can be More Effective than Scaling Model Parameters

The paper by Snell et al. addresses the pressing question of how effectively LLMs can use additional computation during inference to improve performance on challenging prompts. This research seeks to illuminate the trade-offs between scaling test-time compute and pretraining compute, a topic of substantial concern for both theoretical understanding and practical applications of LLMs.

Summary of Methods and Key Findings

Key Issues Addressed

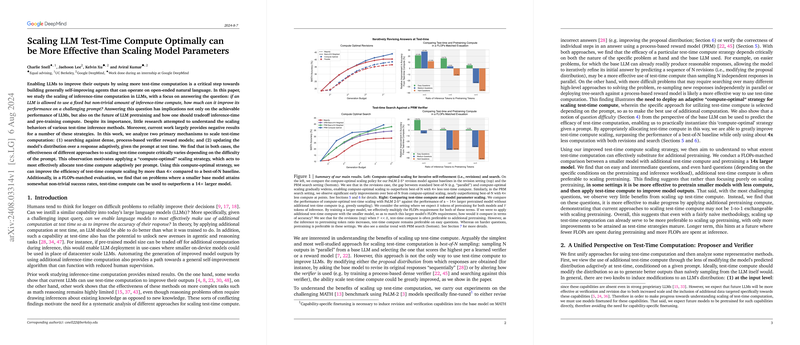

- Mechanisms for Scaling Test-Time Compute: The authors analyze two primary mechanisms—(1) searching against dense, process-based verifier reward models and (2) updating the model's distribution over responses adaptively at test time.

- Evaluation on Problem Difficulty: The paper finds that the effectiveness of these methods varies depending on prompt difficulty, necessitating an adaptive "compute-optimal" strategy.

Main Contributions

- Compute-Optimal Scaling Strategy: Introducing a strategy that adaptively allocates test-time compute based on prompt difficulty to maximize performance.

- Empirical Findings:

- Significant Efficacy of Test-Time Computation: On various problem difficulty levels, compute-optimal scaling can outperform a best-of-N baseline using about 4x less computation.

- Trade-off Between Pretraining and Inference: For easier tasks and specific conditions, scaling inference compute can outperform pretraining a much larger model.

Detailed Analysis

Proposer and Verifier Framework (Section 2)

The paper embraces a unified perspective where inference-time methods refine the model's response distribution via modifications at both the input and output levels. The authors investigate process-based reward models (PRMs) and models trained to revise their own answers iteratively, known as revision models.

Search and Beam Search (Section 3)

A comparative evaluation of different search algorithms against PRMs highlights that while beam search outperforms at lower generation budgets, it becomes less effective at higher budgets due to over-optimization. Lookahead search, despite being a sophisticated method, underperforms due to inefficiencies in handling intermediate PRM predictions.

Revisions Over Parallel Sampling (Section 4)

The paper finds that a ratio of sequential revisions (iterative self-improvements) to parallel sampling can optimize test-time computation effectively. For easier problems, sequential revisions are more beneficial, while difficult problems benefit from a balanced approach of both sequential and parallel sampling.

Implications

Practical Implications

- Deployment in Resource-Constrained Environments: Smaller models augmented with test-time compute could be employed in scenarios where large-scale models are infeasible, such as on-device applications.

- Adaptive Compute Allocation: The notion of compute-optimal scaling provides a framework for dynamically adjusting test-time computation based on real-time evaluation of problem difficulty, relevant to both cost and performance optimization.

Theoretical Implications

- Improving Self-Improvement Algorithms: Automated iterative self-improvement using test-time compute hints at the development of more autonomous agents capable of operating with reduced human intervention.

- Trade-off Understanding: The analysis challenges the conventional wisdom of purely relying on scaling pretraining and opens avenues for more nuanced strategies that balance pretraining and inference compute.

Future Work

The paper underscores several avenues for future exploration. Key areas include further optimizing the trade-offs between pretraining and inference compute, finding efficient methods to predict prompt difficulty dynamically, and integrating test-time compute strategies more deeply with training regimes.

Conclusion

Snell et al. provide a rigorous and insightful analysis demonstrating that smart allocation of test-time compute can substantially enhance LLM performance, thus offering an alternative to simply scaling model parameters. The findings have broad implications for deploying LLMs efficiently and advancing state-of-the-art self-improving AI systems. Future developments in adaptive compute allocation and integrated training-inference strategies are promising areas poised to further this impactful research.