Effective Multi-task Parameter Efficient Fine-Tuning with a Mixture of Dyadic Experts

The paper "MoDE: Effective Multi-task Parameter Efficient Fine-Tuning with a Mixture of Dyadic Experts," introduces an innovative approach to parameter-efficient fine-tuning for adapting LLMs to a diverse array of tasks. Unlike traditional methods such as Low-Rank Adaptation (LoRA) that exhibit redundancy in down-projection matrices of multi-task settings, the proposed Mixture of Dyadic Experts (MoDE) leverages a novel strategy that includes a shared down-projection matrix and atomic rank-one adapters. This design facilitates nuanced task specialization and promotes parameter efficiency, setting a new standard in multi-task learning.

Background and Motivation

Parameter-efficient fine-tuning (PEFT) methods like LoRA have significantly reduced the computational overhead needed for adapting LLMs to downstream tasks by incorporating low-rank projection matrices into the model's architecture. LoRA has demonstrated efficacy in handling individual tasks but falls short in multi-task scenarios due to redundant down-projection matrices. Mixture-of-Experts (MoE) architectures attempt to address these shortcomings by combining multiple specialized sub-models. However, they often face challenges related to parameter redundancy and inefficient use of resources.

Key Innovations

Shared Down-Projection Matrix

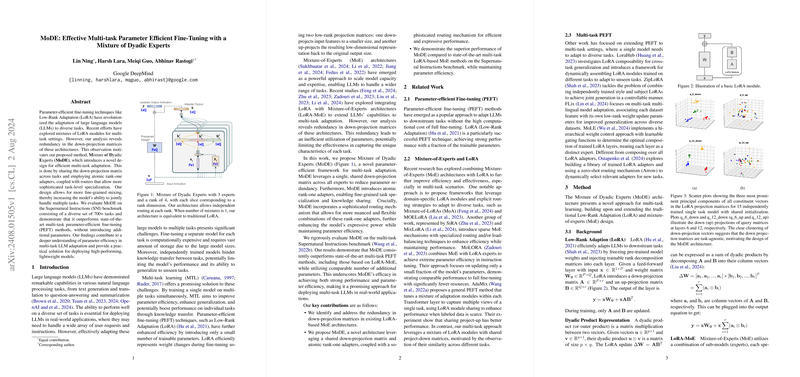

The principal motivation for the MoDE architecture arises from the observed redundancy in the down-projection matrices of LoRA modules across tasks. The empirical analysis utilizing Principal Component Analysis (PCA) revealed that vectors corresponding to down-projection matrices significantly cluster together, indicating task-agnostic behavior. By sharing a single down-projection matrix across all tasks, MoDE reduces the number of trainable parameters and promotes efficient knowledge transfer among tasks.

Fine-Grained Routing with Dyadic Experts

A significant innovation of MoDE is the introduction of atomic rank-one adapters that allow for fine-grained control in adapting to multiple tasks. Instead of having multiple high-rank experts, MoDE employs numerous low-rank experts selectable at the rank level. This sophistication is further enhanced by leveraging a routing mechanism that provides the flexibility to mix and match dyadic rank-one adapters for task-specific specialization.

Experimental Validation

The performance of the MoDE architecture was rigorously evaluated on the Supernatural Instructions (SNI) benchmark, which consists of over 700 diverse tasks. The results demonstrate that MoDE consistently outperforms state-of-the-art multi-task PEFT methods, achieving superior performance without introducing additional parameters. Key experimental results include:

- Performance Metrics: MoDE achieves a ROUGE-L score of 60.00 on the multi-task SNI dataset when configured with 16 experts and rank-4 adapters, outperforming traditional LoRA (56.11), MoLORA (57.77), and MoLORA-SD (58.28).

- Parameter Efficiency: Despite improved performance, MoDE maintains parameter efficiency. For instance, MoDE 16×4 configuration uses roughly 6.64% additional parameters compared to the base model while still achieving notable performance gains.

- Task-level Win Rates: MoDE exhibited a win rate of 78% against traditional LoRA, 73% against MoLORA, and 68% against MoLORA-SD across individual tasks within the benchmark, confirming its robustness.

Generalization and Additional Insights

MoDE's architecture also demonstrates flexibility through its generalization over various configurations of rank and number of experts. The paper explored multiple iterations, such as varying the number of rank-one experts and their combinations, confirming that MoDE not only adapts effectively to different parameter budgets but also maintains robust performance across different hyperparameter settings.

Practical and Theoretical Implications

- Practical Implications: MoDE's architecture paves the way for deploying LLMs in real-world applications that require handling numerous tasks efficiently. Reduced parameter redundancy translates into lower memory footprint and computational costs, making this approach highly suitable for resource-constrained environments.

- Theoretical Implications: The introduction of shared down-projection matrices and fine-grained routing mechanisms offers new avenues for research into parameter-efficient architectures. Such innovations could further enhance our understanding of multi-task adaptation, leading to the development of even more sophisticated and efficient model designs.

Future Prospects

Future research directions include optimizing MoDE's router to improve efficiency further, exploring task-specific patterns in the routing decisions, and investigating its generalization capabilities to unseen tasks. Additionally, evaluating MoDE's performance on larger models and incorporating it with other PEFT techniques could reveal deeper insights and broader applications.

Conclusion

MoDE represents a significant advancement in the domain of parameter-efficient fine-tuning frameworks for multi-task LLM adaptation. By reducing parameter redundancy through shared down-projection matrices and enabling fine-grained task specialization with rank-one adapters, MoDE achieves a balance between performance, efficiency, and adaptability. This innovative architecture holds promise for advancing state-of-the-art methodologies in multi-task learning, with profound implications for both practical deployment and theoretical research.