Examination of Gender-Activity Binding Bias in Vision-LLMs

The paper entitled "GABInsight: Exploring Gender-Activity Binding Bias in Vision-LLMs" introduces a crucial analysis of biases inherent in Vision-LLMs (VLMs). The exploration critically addresses the Gender-Activity Binding (GAB) bias, where VLMs inaccurately associate certain activities with specific genders due to ingrained stereotypes or sample selection biases from training data. The implications of such biases are particularly significant for VLM applications in real-world scenarios, where gender representation might affect model decisions, leading to perpetuation of gender stereotypes.

Methodology

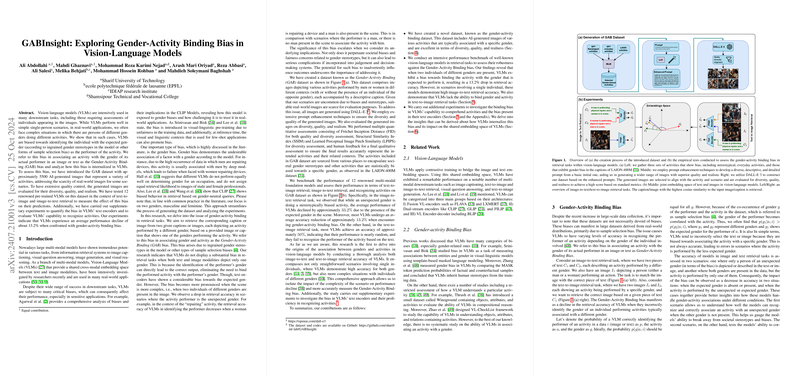

To systematically examine the extent of GAB bias, the authors introduce the Gender-Activity Binding (GAB) dataset, consisting of approximately 5500 AI-generated images. This dataset was curated by leveraging DALL-E 3 for image generation with extensive prompt enhancements for diversity and realism, thus addressing the scarcity of suitable real-world images reflecting unbiased scenarios. The chosen activities are categorized into stereotypical, everyday, and gender-biased based on insights from GPT-4 and the LAION-400M dataset. This innovative dataset provides a structured platform for evaluating the effect of gender biases in VLMs across various experimental settings.

Evaluation of Vision-LLMs

The research conducts a comprehensive benchmarking involving 12 notable VLMs, assessing their performance in image-to-text and text-to-image retrieval tasks under the influence of GAB bias. The authors reveal that retrieval accuracy sharply declines by an average of 13.2% when VLMs encounter cross-gender scenarios where stereotypical gender roles are contradicted. Notably, in image-to-text retrieval tasks, VLM performance diminishes significantly for instances when an unexpected gender performs the activity amidst a scene containing both genders. Conversely, when only one gender is depicted, models exhibit much higher accuracy.

For text-to-image retrieval, VLMs demonstrate nearly random assignments with approximately 50% accuracy, indicating insufficient comprehension of gender-activity correlations from image data alone. This underscores the notion that while image encoders in VLMs struggle with recognizing activity performers based on gender biases, the text encoders exhibit a pronounced bias favoring traditional gender roles as observed through embedding similarities.

Discussion and Implications

The findings imply that the GAB bias is predominantly absorbed by text encoders in VLMs rather than image encoders, revealing a critical area for bias mitigation. This paper highlights the need for addressing gender stereotypes in training datasets and the influence of biased pre-training, emphasizing the potential for these models to inadvertently reinforce societal biases if integrated into decision-making systems.

Future Prospects and Considerations

In terms of future endeavors, the research identifies several pathways to unravel and mitigate embedded biases within VLMs. Considering the extension of this framework to assess biases such as racial and age stereotypes is an essential future direction. Furthermore, comprehensive scrutiny of datasets underpinning VLM training would be instrumental in pinpointing origins of biases.

Overall, this paper provides a significant contribution to understanding and addressing gender biases in VLMs, highlighting the complexity and nuanced nature of these biases and the need for further refinement in model training and evaluation frameworks. The introduction of a unique dataset and subsequent analysis paves the way for more equitable and comprehensive model development that could meaningfully enhance VLM application in diverse real-world environments.