Adaptive Prompting Strategies in AI-Generated Image Recreation

The paper "As Generative Models Improve, People Adapt Their Prompts" presents an intriguing exploration of how the sophistication of generative AI models influences user behavior, particularly in the context of text-to-image generation using OpenAI's DALL-E models. Utilizing an extensive dataset obtained from an online experiment involving 1,891 participants who collectively generated over 18,000 prompts, the authors investigate the dynamic interplay between model capabilities and user prompting strategies.

Experimental Design and Model Comparison

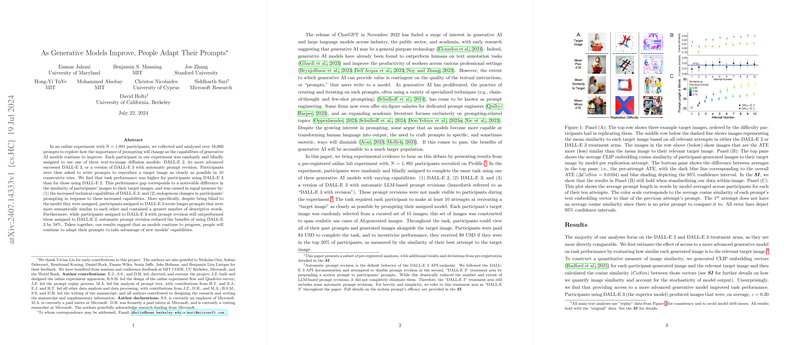

The authors conducted a randomized controlled trial to systematically compare three variants of text-to-image generative models: DALL-E 2, DALL-E 3, and DALL-E 3 with automatic prompt revision. Each participant was tasked with replicating a target image as closely as possible across ten attempts within a 25-minute window. The participants were blind to the model they were assigned, allowing for an unbiased assessment of the effects of model capability on user behavior.

Analytical Framework

Performance was assessed using CLIP embedding cosine similarity and DreamSim, metrics that capture the semantic and perceptual similarity between the generated and target images. The paper revealed a significant improvement in performance for users interacting with DALL-E 3 compared to those using DALL-E 2. This enhancement was due to a combination of the model's superior technical capabilities and adaptive changes in user prompting behavior. Specifically, users assigned to DALL-E 3 generated longer and more descriptively rich prompts, exhibiting a higher degree of prompt similarity over successive attempts.

Decomposition of Improvement into Model and Prompting Effects

By replaying prompts across different models, the paper decomposes the observed performance gains into direct model effects and indirect prompting effects. The decomposition showed that approximately half of the performance gains were attributable to the enhanced technical capabilities of DALL-E 3, while the other half resulted from users' behavioral adaptations in response to these capabilities. This finding underscores the bidirectional learning process: as models improve, users refine their prompts to exploit these advancements effectively.

Implications and Future Directions

The implications of this paper are twofold. Practically, it highlights the necessity for continuous user training and interface improvements to maximize the benefits of advanced generative models. Theoretically, it suggests that the interaction between users and AI models is dynamic and evolving, with users playing a crucial role in realizing the potential of AI advancements.

Future research could extend this work by exploring how different user demographics adapt their prompting strategies, examining the extent to which these strategies can be generalized across different generative models, or evaluating the impact of additional training or automated prompt optimization features on user performance. Such studies could further elucidate the interplay between human ingenuity and machine intelligence, guiding the development of more intuitive and effective AI tools.

In conclusion, this paper provides valuable insights into how improvements in generative AI models influence user behavior, demonstrating that advancements in AI capabilities and human adaptations are mutually reinforcing. This dynamic interaction has significant implications for the design and deployment of future AI systems.