RAG-QA Arena: Evaluating Domain Robustness for Long-form Retrieval Augmented Question Answering

Introduction

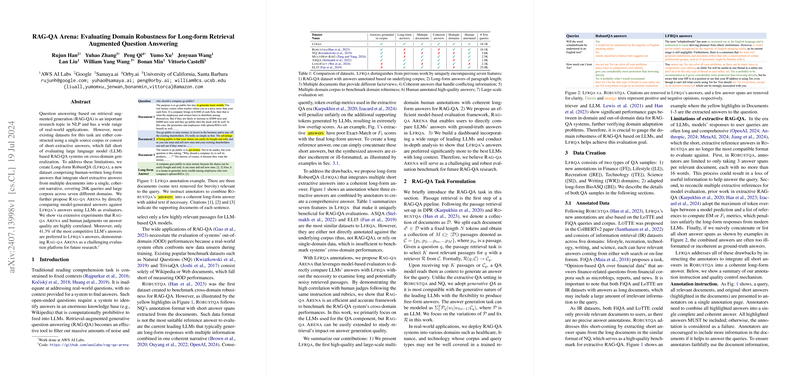

The paper introduces Long-form RobustQA (Lfrqa), a sophisticated dataset aimed at bridging the gap in current evaluation methodologies for LLM-based Retrieval-Augmented Generation Question Answering (RAG-QA) systems. Unlike existing datasets that often focus on single-source corpora with short extractive answers, Lfrqa emphasizes human-written long-form answers synthesized from multiple documents. This dataset encompasses 26,000 queries spanning seven different domains, making it a comprehensive tool for assessing the cross-domain robustness of RAG-QA systems.

Dataset Characteristics

Lfrqa is constructed to address specific inadequacies in previous datasets:

- Grounding in Corpus: Answers in Lfrqa are explicitly annotated in relation to the underlying corpus, ensuring that the generated answers are both relevant and accurate.

- Long-form Answers: The dataset includes paragraph-length answers, making it more suitable for evaluating the generative capabilities of modern LLMs.

- Multi-document Integration: Answers are derived from multiple documents, demanding models to synthesize information from various sources.

- Coherence and Completeness: Annotators integrate conflicting information into coherent narratives, enhancing the quality and applicability of responses.

- Diverse Domains: The dataset spans multiple domains including biomedical, finance, lifestyle, recreation, technology, science, and writing, providing a robust benchmark for cross-domain performance.

- Human Quality Control: High-quality, human-annotated answers are integral to Lfrqa, ensuring the reliability of the benchmark.

- Large-scale Evaluation: A vast evaluation set enables extensive experimentation and benchmarking.

RAG-QA Task Formulation

In the RAG-QA pipeline, two primary components are considered:

- Passage Retrieval: Utilizing models like ColBERTv2, the task is to select the most relevant passages from a large document collection.

- Answer Generation: Leveraging leading LLMs to generate coherent and accurate answers based on the retrieved passages.

The paper focuses on the performance of various LLMs, evaluating their robustness and effectiveness in generating long-form answers across diverse domains.

Evaluation Framework

The paper introduces a novel evaluation framework termed RAG-QA Arena, which implements a pairwise comparison approach:

- Human and Model-based Evaluations: Both human judges and LLM-based evaluators assess the quality of the answers by comparing them against Lfrqa. This method ensures a scalable and reliable evaluation of model performance.

- Evaluation Metrics: Pairwise preference metrics such as win-rate and win+tie rate are used to gauge the quality of the generated answers.

Results highlight a strong correlation between human judgments and model-based evaluations, validating the efficacy of using LLMs as evaluators in this benchmark.

Experimental Results

The paper conducts extensive experiments using multiple leading LLMs:

- GPT-4-turbo

- GPT-4-0125-preview

- Mixtral-8x22B-Instruct

- Llama-3-70b-Instruct

- Command R+

- Qwen1.5-110b-chat

It was observed that increasing the number of retrieved passages from 5 to 10 significantly improves performance, particularly for GPT-4 models. The best win rate against Lfrqa was recorded as 41.3% for GPT-4o, underscoring the high quality of Lfrqa's long-form answers.

Implications and Future Directions

The development of Lfrqa and the RAG-QA Arena framework marks a significant step in the evaluation of RAG-QA systems:

- Benchmarking Robustness: Lfrqa provides a robust benchmark for evaluating the cross-domain performance of LLM-based RAG-QA systems, making it an essential tool for researchers.

- Improving QA Models: High-quality annotations and a comprehensive evaluation framework can guide the fine-tuning and improvement of generative models.

- Future Research: The dataset and evaluation framework open avenues for future research in prompt engineering, model training, and further exploration of retrieval mechanisms to enhance answer generation quality.

Conclusion

Lfrqa and the RAG-QA Arena framework set a new standard for evaluating RAG-QA systems, emphasizing the need for coherence, completeness, and domain robustness in long-form answer generation. The high-quality annotations and robust evaluation metrics make this work a valuable resource for advancing the field of NLP and improving the capabilities of generative QA models.