An Examination of "Multimodal Self-Instruct: Synthetic Abstract Image and Visual Reasoning Instruction Using LLM"

The paper "Multimodal Self-Instruct: Synthetic Abstract Image and Visual Reasoning Instruction Using LLM" presents a novel approach to overcoming the limitations of current large multimodal models (LMMs) in understanding abstract images. As current LMMs show a limited ability to perform reasoning tasks and comprehend abstract visuals such as charts, maps, and flowcharts, this research introduces a multimodal self-instruction pipeline to enhance these capabilities.

Summary

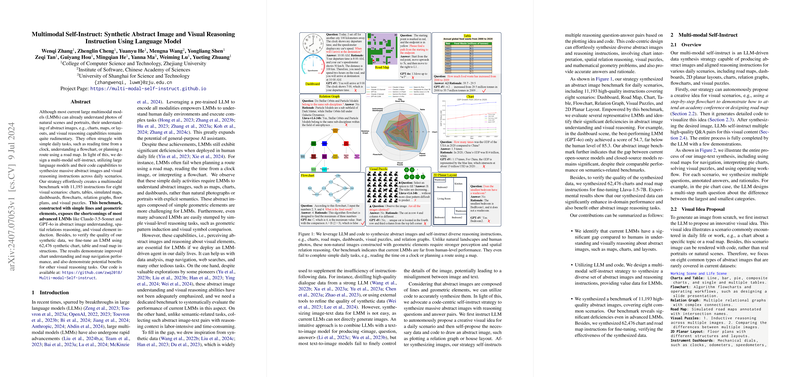

The paper focuses on creating a multimodal benchmark comprising 11,193 instructions across eight visual scenarios: charts, tables, simulated maps, dashboards, flowcharts, relation graphs, floor plans, and visual puzzles. The researchers utilized LLMs with integrated code capabilities to generate these synthetic abstract images and corresponding instructions. These benchmarks are specifically designed to challenge advanced models like Claude-3.5-Sonnet and GPT-4o, highlighting their deficiencies in abstract image understanding and complex visual reasoning tasks.

To validate the effectiveness of the proposed pipeline, the paper details the implementation of a fine-tuning experiment on an LMM using 62,476 synthetic instructions derived from charts, tables, and road maps. The results demonstrate notable improvements in the model's understanding of charts and map navigation, underscoring the potential benefits for a range of visual reasoning tasks.

Implications and Future Directions

The implications of this work are significant for both theoretical and practical aspects of AI deployment. The enhanced ability to understand and reason about abstract images enables more effective AI-driven decision support systems in domains where visual data is paramount, such as data analysis, geographical navigation, and visualization interpretation.

Theoretically, this research paves the way for further exploration into the integration of code-based image synthesis with LLMs, potentially offering a route to developing more sophisticated multimodal AI systems. It also raises interesting questions about the optimal balance between data-driven learning and rule-based logic in AI training frameworks.

Looking ahead, the research community might expand on this work by exploring the relationships between different types of abstract visual reasoning tasks, investigating how improvements in one domain transfer to others. Additionally, further advancements in visual encoders could lead to more nuanced and powerful LMMs capable of achieving human-like performance in tasks involving abstract reasoning.

In conclusion, the paper by Zhang et al. represents an important step in addressing the limitations of current multimodal AI models in processing abstract images. By integrating code-centric data synthesis with LLM capabilities, the authors provide a robust framework for enhancing the interdisciplinary understanding and reasoning capacity of AI systems. This research opens up new pathways for the design of intelligent systems capable of navigating the complex visual data challenges inherent in many real-world applications.