Fine-Tuning with Divergent Chains of Thought Boosts Reasoning Through Self-Correction in LLMs

This paper introduces a novel approach to enhancing the reasoning capabilities of LLMs titled Divergent Chain of Thought (DCoT). This method builds on the foundation of Chain of Thought (CoT) prompting, which improves performance by generating intermediate reasoning steps. However, the proposed DCoT method advances this approach by facilitating the generation and comparison of multiple reasoning chains in a single inference step, thus potentially increasing accuracy in the final solutions provided by the model.

Methodology

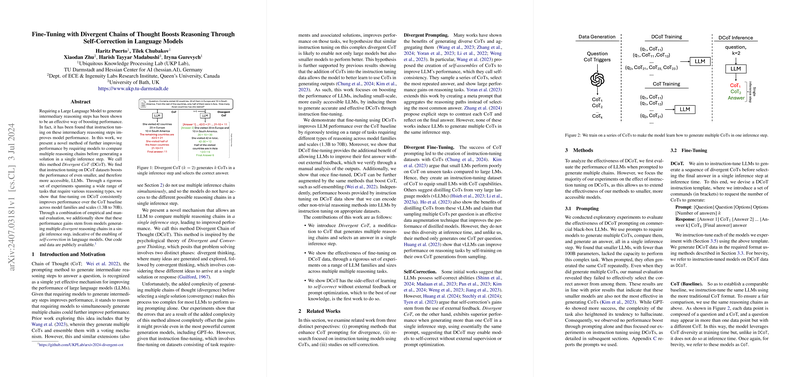

The key innovation of the DCoT framework is in its ability to instruct LLMs to produce several divergent reasoning paths before arriving at a final decision. This is inspired by the cognitive theories of Divergent and Convergent Thinking, which suggest a multi-phase approach to problem-solving. The process involves generating numerous ideas (divergent phase) and synthesizing them to derive a single solution (convergent phase).

For implementation, DCoT requires fine-tuning models with datasets that contain multiple reasoning paths per question, allowing the model to learn how to generate and select among various potential solutions. This methodology addresses the limitation faced by prior models which could not generate multiple inference chains simultaneously due to the complexity of the task.

Results

The experimentation spanned across models with parameter sizes ranging from 1.3B to 70B, demonstrating consistent improvement over baseline CoT models. Notably, the empirical results substantiate that even smaller, more accessible LLMs benefit from this fine-tuning approach. The performance boost was significant across a variety of tasks, indicative of the method's broad applicability.

Quantitatively, the work showed improvements in task performance across various datasets, including mathematics, logic, and multi-hop reasoning tasks. Furthermore, the introduction of DCoT allowed some models to enhance their accuracy without additional external feedback, indicating a self-correcting capability—a novel advancement in the field.

Implications and Future Directions

The implications of this research are multifaceted. Practically, the introduction of DCoT empowers smaller models to achieve enhanced performance, making high-quality reasoning tasks more accessible without requiring extensive computational resources. This democratizes access to powerful AI and broadens the range of applications for which these LLMs can be effectively utilized.

Theoretically, the success of this method suggests that further exploration into divergent thinking strategies might unlock additional reasoning capabilities in LLMs. The framework presents a new paradigm where multi-step reasoning does not rely solely on external oversight or feedback loops.

Future research may explore the integration of DCoT within larger, more context-rich models or alternative reasoning paradigms such as code prompting or graph-based reasoning. Additionally, investigating the differential impacts of various scales of divergent reasoning (i.e., number of reasoning chains generated) could offer deeper insights into optimizing model training and inference strategies.

This research underscores the value of fine-tuning with complex reasoning data and sets the stage for subsequent advancements in enhancing AI reasoning through refined model training techniques.