Deciphering the Factors Influencing the Efficacy of Chain-of-Thought: Probability, Memorization, and Noisy Reasoning

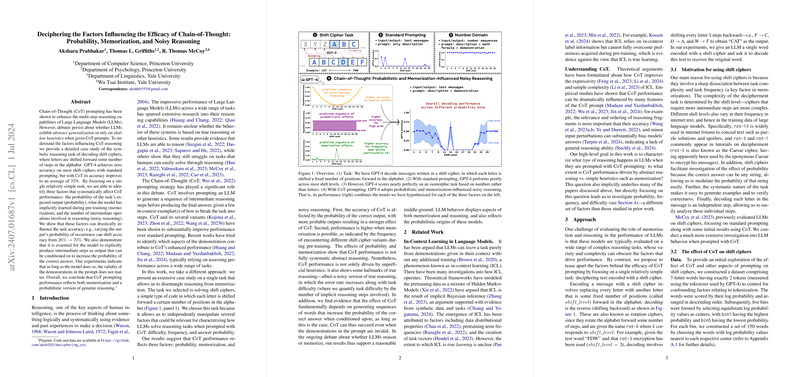

The paper "Deciphering the Factors Influencing the Efficacy of Chain-of-Thought: Probability, Memorization, and Noisy Reasoning" critically examines the underlying mechanisms driving the performance of Chain-of-Thought (CoT) prompting in LLMs. The primary focus is to discern whether these models rely on genuine reasoning or resort to memorization and heuristics. By employing a methodical case paper using shift ciphers, the authors delineate the influence of three critical factors on CoT reasoning: probability, memorization, and noisy reasoning.

Key Findings

- Probability Influence: The probability of expected output significantly impacts CoT performance. For instance, testing with GPT-4 shows accuracy variations from 26% to 70% contingent on output probability. This probabilistic influence is evident through instances of unfaithfulness, where the intermediate CoT steps are overridden by a high-probability final answer, even if incorrect.

- Memorization Role: Memorization is highlighted by performance spikes at commonly encountered tasks during pre-training, such as the rot-13 cipher. Despite the complexity associated with this shift level, its frequent appearance in corpora equips LLMs to handle it more proficiently.

- Noisy Reasoning: CoT reasoning exhibits characteristics of noisy symbolic reasoning, where task complexity, manifesting as the number of reasoning steps required, inversely affects accuracy. The task of decoding a shift cipher exemplifies this by showing decreased performance with increased complexity, particularly for intermediate shift levels.

Methodology

The authors utilize a systematic approach by focusing on a singular task to isolate reasoning from memorization, allowing them to manipulate task frequency, difficulty, and probability independently. The shift cipher decoding task, while straightforward, illuminates how these three factors interplay to affect CoT prompting efficacy across three LLMs: GPT-4, Claude 3, and Llama 3.1.

Experimentation

Several prompting strategies were tested:

- Standard Prompting: Found to be largely ineffective across challenging shift levels.

- Text-CoT Prompting: Encouraged decoding one letter at a time with improved performance but not infallible.

- Math-CoT and Number-CoT Prompting: Mathematical reasoning frameworks demonstrated superior performance by abstracting from linguistic noise, nearly achieving perfect accuracy.

Moreover, logistic regression analyses substantiate the significance of output probability, shift level frequency, and the number of reasoning steps, further demonstrating the multifaceted nature of CoT reasoning.

Implications and Future Perspectives

The findings advocate for a nuanced understanding of LLM reasoning capabilities:

- CoT performance is a composite of probabilistic, memorization-influenced reasoning with inherent noise.

- The reliance on probabilistic cues over logical reasoning steps points to potential areas for enhancing LLMs' cognitive abilities.

- Encouraging internal reasoning that does not depend heavily on textual self-conditioning remains a frontier for future research.

This paper underscores the probabilistic origins of these models and suggests a balance between memorization and reasoning, opening avenues for refining CoT methodologies and improving LLMs' decision-making processes in diverse contexts. The insights drawn extend their relevance to broader AI tasks, paving the way for developments in AI research aimed at fostering more genuine reasoning capabilities.