The paper "LLM Uncertainty Quantification through Directional Entailment Graph and Claim Level Response Augmentation" addresses the challenge of quantifying uncertainty in responses generated by LLMs, which are prone to errors due to data sparsity and hallucination issues. The authors introduce a nuanced method for uncertainty quantification (UQ) that leverages both directional entailment logic and response augmentation at the claim level.

Overview

- Directional Entailment Graph:

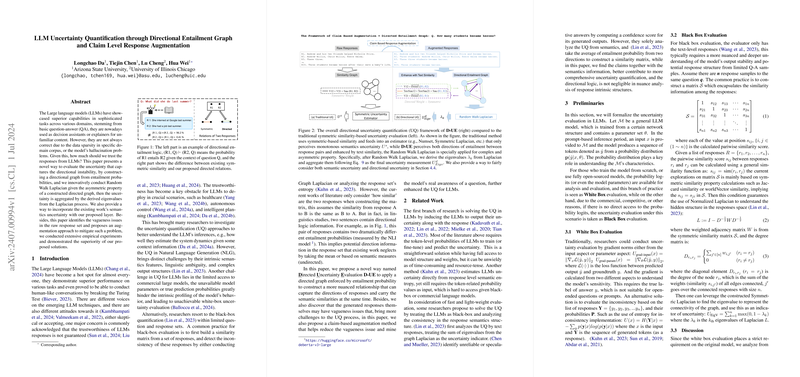

- The paper proposes constructing a directional graph to encapsulate entailment probabilities derived from responses. This graph considers asymmetric entailment relationships, as traditional symmetric similarity metrics fail to capture direction-specific information in linguistic entailed responses.

- A random walk Laplacian process is performed on this directed graph to extract eigenvalues that serve as indicators of uncertainty. These eigenvalues reflect response dispersion and connectivity in the graph, providing insights into model uncertainty.

- Response Augmentation:

- A major contribution is the proposal of a claim-based response augmentation technique. This approach identifies vagueness in the response set by supplementing incomplete or unclear claims with context-derived augmentation. This ensures that potential correct claims are not overlooked, improving the robustness and reliability of UQ.

- The augmentation is performed by extracting claims from the responses and reconciling them with the context of the question to derive comprehensive claims that better reflect correct underlying assertions.

- Comprehensive Integration:

- The paper introduces a method to combine directional entailment-based uncertainty measures with existing semantic uncertainty techniques. It normalizes these measures to account for differing scales and aggregates to produce a holistic uncertainty measure.

Experimental Framework

- Datasets and Evaluation: The framework is tested on prominent datasets such as CoQA, TriviaQA, and NLQuAD, using Llama3 and GPT3.5-turbo for validation.

- Metrics: The evaluation employs AUROC and AUARC metrics, with the latter addressing shortcomings of AUROC in imbalanced scenarios. This dual-metric approach ensures a comprehensive evaluation of UQ effectiveness.

- Comparative Analysis: The approach is compared against 12 baseline methods, each focusing on different semantic similarity measures, showing consistent improvement across various tasks and datasets.

Findings

- The integrated approach (D-UE) significantly improves uncertainty evaluation, capturing directional entailment and resolving vagueness in responses, outperforming traditional semantics-only UQ methods.

- The claim augmentation process is particularly effective in scenarios with vague language, enhancing the delineation of correct responses and thereby improving subsequent UQ accuracy.

Conclusion

The proposed method for enhancing the uncertainty quantification of LLMs through a combined approach of directional entailment graph construction and claim-level response augmentation provides a robust solution to existing challenges in response evaluation. The integration of these notions reveals and preserves nuanced semantic logic, thereby significantly improving the trustworthiness of LLM-generated responses in various real-world applications. This work lays foundational concepts for future research in both UQ and the broader field of LLM reliability and trustworthiness.