OMG-LLaVA: Bridging Image-level, Object-level, Pixel-level Reasoning and Understanding

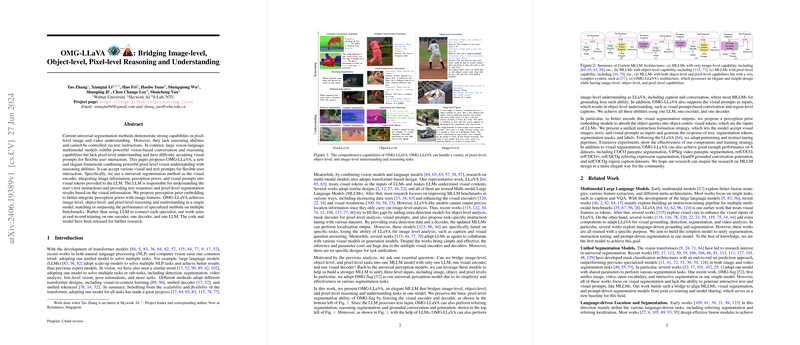

The paper presents OMG-LLaVA, a new multimodal framework that integrates image-level, object-level, and pixel-level reasoning and understanding, addressing the limitations of existing models in this domain. Developed by a collaboration between researchers from Wuhan University, Skywork AI, and S-Lab, NTU, OMG-LLaVA offers a unified approach that combines the strengths of existing pixel-level segmentation methods and large vision-language multimodal models.

Summary and Methodology

OMG-LLaVA employs a simple yet elegant architecture that integrates a universal segmentation method, OMG-Seg, as the visual encoder with a LLM. This design allows for the processing of various visual and text prompts, enabling flexible user interactions. The significant contributions of OMG-LLaVA are as follows:

- Perception Prior Embedding: This strategy integrates object information into the image features. Object queries are embedded into pixel-centric visual tokens based on segmentation masks, thereby preserving detailed object-level information.

- Tokenization Strategy: OMG-LLaVA unifies image-level, object-level, and pixel-level tasks into token generation tasks. It uses pixel-centric and object-centric visual tokens, which are processed by an LLM to output detailed text responses and segmentation results.

- Unified Instruction Formulation: This allows the model to handle diverse tasks such as image captioning, referring segmentation, and grounded conversations, with a single architecture.

The framework capitalizes on the universal segmentation capabilities of OMG-Seg and integrates them seamlessly with advanced LLMs like InterLM2-7B. The model performs end-to-end training involving pretraining and instruction tuning stages, ensuring flexible and accurate visual and text prompt interaction.

Results and Implications

The model's efficacy is demonstrated across numerous benchmarks:

- Referring Expression Segmentation (RES): OMG-LLaVA achieves impressive results on datasets such as refCOCO, refCOCO+, and refCOCOg, outperforming contemporary models like LISA and PixeLLM. It achieves improvements of 1.5-5.0 cIoU points over its competitors.

- Grounded Conversation Generation (GCG): OMG-LLaVA excels in generating accurate and detailed descriptions interleaved with segmentation masks. It surpasses other models in both text description metrics (METEOR, CIDEr) and segmentation quality (AP50, mIoU).

- General Pixel-level Tasks: Beyond niche benchmarks, OMG-LLaVA retains robust capabilities in more general segmentation tasks like panoptic segmentation on COCO and video segmentation on VIPSeg.

The robustness and generalizability of OMG-LLaVA stem from a balance of simplicity and effectiveness in its design. By freezing the visual encoder and decoder, and only tuning projection and LLM components during instruction tuning, the model ensures efficient and effective training.

Future Directions

While OMG-LLaVA offers notable advancements, several areas could benefit from further research:

- Enrichment with Diverse Data: Extending the instruction-tuning datasets to include more complex and varied visual prompts and segmentation scenarios could bolster the model's applicability and accuracy in real-world tasks.

- Integration with Video Segmentation: Enhancing OMG-LLaVA to handle temporal dynamics for video segmentation could expand its utility in applications requiring spatial-temporal reasoning.

- Part-level Understanding: Current capabilities focus on pixel-level segmentation but incorporating part-level segmentation would provide more granular and precise outputs, crucial for applications requiring fine-detail analysis.

Conclusion

OMG-LLaVA represents a significant step towards comprehensive multimodal perception systems by bridging image-level, object-level, and pixel-level tasks within a unified architecture. Its contributions in perception prior embedding, integrated tokenization strategies, and unified instruction formulation offer a path towards more flexible and capable AI models. While future work can address existing limitations and expand its capabilities, OMG-LLaVA sets a new benchmark in the field of multimodal learning, paving the way for more integrated and holistic AI systems.