Efficacy of LLM Self-Play in Non-Zero-Sum Games

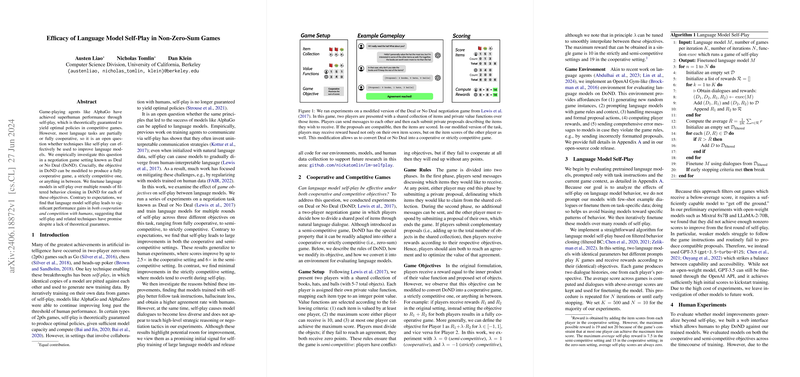

The paper "Efficacy of LLM Self-Play in Non-Zero-Sum Games" presents an empirical paper addressing the potential of self-play as a mechanism to enhance the performance of LLMs in contexts extending beyond strictly competitive environments. The investigation zeroes in on the "Deal or No Deal" (DoND) negotiation game, a versatile setting that can be tuned to be fully cooperative, partially competitive, or strictly competitive.

Key Contributions and Findings

- Game-Enriched Environment: The authors adapted the DoND game, enabling it to oscillate between cooperative, semi-competitive, and strictly competitive settings. This adaptation is pivotal, as it permits the evaluation of LLMs under various degrees of competitive and cooperative interaction.

- Self-Play Algorithm Implementation: An iterative finetuning strategy leveraging filtered behavior cloning was employed, where LLMs played multiple rounds of the game against themselves. Successful dialogues were retained, and fine-tuning proceeded based on these selections. This iterative process was repeated for ten rounds, with each round utilizing a pretrained model—GPT-3.5 in particular.

- Performance Metrics and Evaluation: Contrary to conventional wisdom suggesting limitations in applying self-play to non-zero-sum games, significant improvements were observed:

- Scores: Self-play led to impressive enhancements, specifically in semi-competitive and cooperative settings. Performance gains up to 14 times (semi-competitive) and 17 times (cooperative) were reported. Notably, models trained via self-play managed scores superior to baseline GPT-4 models.

- Generalization: The improvements were not confined to self-play scenarios but also extended to interactions involving human participants. Scores obtained in collaboration with humans showed increments by factors up to 6 in semi-competitive and 2 in cooperative contexts.

- Human Evaluation: Crowdsourced evaluations involving human participants were meticulously structured, incorporating bonus incentives aligned with achieving high game scores. This approach ensured high-quality data collection and evaluation of model generalization capabilities.

- Divergence in Strictly Competitive Games: Notably, the benefits of self-play were not replicated in strictly competitive settings (zero-sum scenarios). Models tended to overfit during self-play, failing to generalize when paired against other agents like GPT-4 or human participants.

Analytical Insights and Implications

- Dialogue Characteristics: The analysis revealed interesting patterns:

- Dialogue lengths increased significantly in cooperative settings, denoting elaborative communication strategies.

- Conversely, semi-competitive dialogues became more concise over iterations, potentially as a strategy to reach agreements faster.

- Vocabulary usage also varied, contracting in semi-competitive games but expanding in cooperative interactions.

- Strategic Complexity: Despite performance enhancements, the increase in scores was largely attributed to an improved ability to reach valid agreements, rather than the adoption of advanced negotiation strategies. This suggests a strong potential for further performance boosts if self-play were combined with additional training methodologies.

- Filtered Behavioral Cloning: The observed improvements underscore the efficacy of filtered behavior cloning in steering models towards desirable behaviors through self-play, particularly in environments where LLMs can leverage their inherent generalization capabilities.

Implications and Future Directions

The promising outcomes from cooperative and semi-competitive settings suggest self-play as a viable technique for enhancing LLMs in broader real-world scenarios, beyond traditional game environments. Future research trajectories could explore:

- Integration with Advanced Techniques: Combining self-play with methods such as reinforcement learning from human feedback (RLHF) or natural language reflections could potentially address current limitations in strategic depth.

- Population Play and Diverse Objectives: Investigating more sophisticated self-play configurations, such as diverse agent populations, could further mitigate overfitting and foster better generalization.

- Real-World Applications: Extending the application of self-play beyond synthetic or game-based contexts to real-world tasks could validate its utility in practical AI scenarios.

Conclusion

This paper offers compelling evidence for the efficacy of LLM self-play in enhancing performance across a spectrum of cooperative and competitive tasks, providing a foundation for future explorations toward more intelligent and versatile AI systems. While challenges remain, especially in strictly competitive contexts, the evidence points to significant prospects for self-play in refining and advancing the capabilities of LLMs.